Topic: technical documentation

-

Googlebot Crawling Limits: What You Need to Know

Googlebot's default crawl limits are 15MB for HTML files, 2MB for CSS/JavaScript/images, and 64MB for PDFs, with content beyond these thresholds ignored for indexing. These generous limits are rarely a concern for most websites but are critical for sites with exceptionally large pages or files to...

Read More » -

Wikipedia Group's AI Detection Guide Now Powers a 'Humanizing' Chatbot Plug-In

A new open-source tool called Humanizer uses a Wikipedia community's guide to help AI like Claude write more naturally by avoiding 24 identified chatbot language patterns. The tool is based on a list from Wikipedia's AI Cleanup project and works as a "skill file" for Claude, though it may not imp...

Read More » -

Google's New Protocol Secures AI Agent Deals, Backed by 60 Firms

Google has launched the Agent Payments Protocol (AP2), an open standard backed by over 60 companies to ensure secure and reliable AI-driven transactions. AP2 uses cryptographically signed Mandates to authorize purchases with user consent, supporting various payment methods and ensuring accountabi...

Read More » -

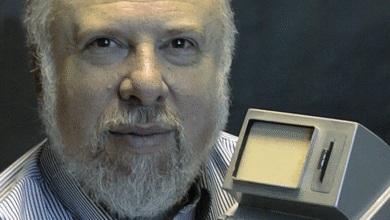

Jef Raskin's Vision: The Quest for a Humane Computer

Jef Raskin, a pioneer in human-computer interaction, believed interfaces should align with human cognitive patterns to reduce mental effort, not just focus on visual design. Raskin was the original force behind the Macintosh project at Apple, blending computer science and visual arts to bring a u...

Read More »