visionOS 26.4 Unlocks Foveated Streaming for Apps & Games

▼ Summary

– visionOS 26.4 beta 1 introduces support for foveated streaming via NVIDIA CloudXR for the Apple Vision Pro.

– This technology allows apps and games to stream high-resolution, low-latency immersive content from a remote computer or server.

– Foveated streaming improves performance by transmitting high-quality visuals only where the user is approximately looking.

– It enables developers to combine streamed, processor-intensive environments with native spatial content, like a car’s interior gauges.

– The feature aims to make it easier to port existing VR applications to the Vision Pro and enhance existing visionOS apps.

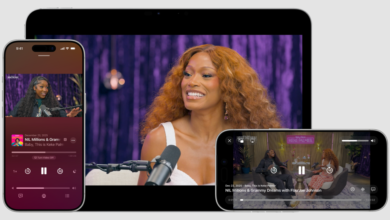

The latest visionOS 26.4 beta introduces a significant advancement for Apple Vision Pro users by enabling foveated streaming support for apps and games. This update, which also accommodates new video features in Apple Podcasts, fundamentally enhances how high-performance content is delivered to the headset. By leveraging technologies like NVIDIA CloudXR, the system allows for the streaming of immersive, high-resolution visuals with minimal delay, directly to the device.

Apple’s official documentation clarifies that this framework is a powerful new tool for developers. It enables them to take existing virtual reality applications, games, or experiences built for powerful desktop computers or cloud servers and stream them seamlessly to Apple Vision Pro. The core innovation lies in foveated streaming, a technique that intelligently allocates bandwidth and processing power. It streams high-quality detail only to the approximate area where the user is looking, while rendering peripheral vision at a lower resolution. This smart allocation ensures smooth performance and low latency, which are critical for comfortable and immersive extended reality experiences.

A major benefit of this approach is the ability to blend streamed content with native spatial elements. Developers can overlay interactive, locally-rendered components using Apple’s RealityKit framework on top of the streamed environment. For instance, in a racing game, the intricate dashboard gauges inside the car could be rendered natively on the Vision Pro, while the demanding outdoor track scenery is streamed from a remote computer. This hybrid model optimizes both visual fidelity and system resources.

This integration with established third-party solutions like NVIDIA CloudXR suggests a strategic move to broaden the Vision Pro’s software ecosystem. It lowers the barrier for developers who have already created content for other VR and computing platforms, making it more straightforward to port those experiences to Apple’s spatial computer. The potential extends beyond ports, however, unlocking new possibilities within native visionOS applications as well.

Consider a flight simulator app. Using this technology, it could render a detailed, interactive cockpit natively while streaming a vast, processor-intensive landscape from the cloud. This allows for incredibly rich and complex virtual worlds that would otherwise be beyond the standalone processing limits of the headset. While it remains to be seen if Apple’s own first-party apps will adopt this technology initially, its implementation sets a clear best practice for third-party developers to follow.

The introduction of foveated streaming in visionOS 26.4 marks a pivotal step in evolving the Vision Pro from a powerful standalone device into a versatile hub capable of tapping into remote computational power. It promises a future with more sophisticated, visually stunning, and complex applications, significantly enriching the platform’s content library and user experience.

(Source: 9to5Mac)