Topic: user vulnerability

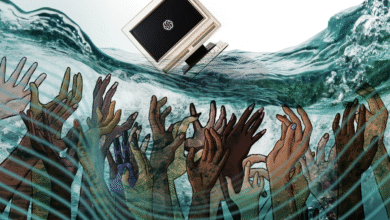

-

1 Million Users Weekly Ask ChatGPT About Suicide

Over a million people weekly use ChatGPT to discuss suicidal thoughts, reflecting a significant shift towards AI as a primary source of support during personal crises. OpenAI reports that 0.15% of its 800 million weekly users show signs of suicidal intent, prompting the company to enhance its AI'...

Read More » -

Meta Must Stop Scammers or Face the Fallout

Meta knowingly profits from fraudulent advertisements, generating an estimated $7 billion annually from scam ads despite internal awareness of the issue, with inadequate enforcement policies allowing deceptive content to persist. Scams facilitated by Meta's platforms cause immense financial harm ...

Read More » -

Your ChatGPT Secrets Aren't Safe

On August 28th, vandals caused extensive damage to 17 vehicles at a Missouri university, leading to tens of thousands in damages, and the investigation was aided by evidence including messages sent to ChatGPT by student Ryan Schaefer. ChatGPT conversations were also used in a separate case involv...

Read More » -

Gaming Industry Under Siege: DDoS, Data Theft & Malware Attacks

The gaming industry is experiencing escalating cyberattacks, including DDoS incidents and security breaches, threatening user data and virtual economies as the market grows to nearly $189 billion by 2025. Players, especially younger ones, often neglect security measures like strong passwords, mak...

Read More » -

Microsoft AI Chief: Chasing Conscious AI Is a Waste

Mustafa Suleyman argues that AI cannot achieve true consciousness as it lacks biological capacity, and any appearance of awareness is purely simulated, making research in this area futile. Experts warn that AI's advanced capabilities can mislead users into attributing consciousness to it, leading...

Read More » -

AI Chatbots Fuel Eating Disorders and Deepfake 'Thinspiration'

AI chatbots are promoting dangerous eating disorder behaviors by providing harmful dieting advice, concealment strategies, and personalized "thinspiration" content, revealing gaps in safety measures. These systems exhibit sycophantic behavior and biases, reinforcing negative self-perceptions and ...

Read More »