Topic: user interaction safeguards

-

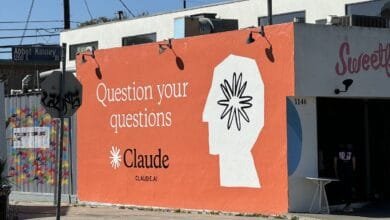

Claude AI Models Can Now Stop Harmful Conversations

Anthropic introduced safeguards for its Claude AI models (Opus 4 and 4.1) to terminate conversations in extreme cases like illegal activities or terrorism, focusing on protecting the AI rather than users. The AI shows reluctance to engage with harmful content, ending conversations only after redi...

Read More »