Topic: Prompt engineering

-

6 OpenAI Staff Tips to Master ChatGPT

Ask complex, challenging questions to push the model into deeper reasoning and elicit more sophisticated, detailed explanations. Assign specific personas or expertise levels to ChatGPT to tailor its responses, enhancing relevance and depth for both specialized and everyday topics. Regularly audit...

Read More » -

Hero's New SDK Automates Your AI Prompts

Many users struggle with writing effective AI prompts, leading to the emergence of specialized roles like prompt engineers and the integration of user-friendly features such as auto-generated recommendations in consumer applications. Hero, a startup founded by ex-Meta employees, has developed an ...

Read More » -

AI's New Jobs: Forensic Vibers and 10 Other Future Roles

AI integration is creating new professions that blend technical skills with ethical oversight, strategic thinking, and psychological insight to ensure systems are productive and aligned with goals. Over 85% of American workers expect prompt-writing skills to become essential, with younger generat...

Read More » -

5 Prompt Engineering Tips for Better Vibe Coding

Vibe coding uses natural language descriptions to generate functional code with AI, making software development more accessible to non-experts but requiring clear communication for success. Effective prompt engineering involves using concise, specific instructions and breaking complex projects in...

Read More » -

Prompting Generative AI Chat Models: A Comprehensive Guide

Explore the world of generative AI chat models with our comprehensive guide. Learn how to effectively prompt these tools for applications in content creation, education, and more, while understanding their unique capabilities and limitations.

Read More » -

Future-Proof Your Career: 5 Ways to Thrive in the AI Era

The key to thriving with AI is not competing with it, but leveraging it while amplifying uniquely human skills like relational intelligence, creative judgment, and managing ambiguity. Professionals must take ownership of complete outcomes by mastering prompt engineering, verifying AI outputs, and...

Read More » -

Ultimate Prompting Guide for Large Language Models

Large Language Models (LLMs) like ChatGPT, Claude, Gemini, and others have revolutionized how we interact with artificial intelligence. These powerful tools can write, analyze, code, brainstorm, and solve complex problems, ...

Read More » -

Developers: Leverage AI to Stay Ahead of the Competition

AI integration in software development is seen as a tool to enhance developers' capabilities, not replace them, requiring strategic adaptation to work smarter. Prompt engineering has become essential, blending technical and critical thinking skills to effectively leverage AI while maintaining cod...

Read More » -

AI Isn't Ready to Out-Surf You on the Web, Yet

AI-powered browsers promise to simplify web tasks but currently require significant effort, as users must master precise prompting and often face inconsistent results from chatbots that misunderstand intent. These tools are most effective for specific, contained tasks like summarizing webpages or...

Read More » -

Speed vs. Credibility in AI Content Creation

AI-generated content requires credibility and trustworthiness to succeed, with structured, helpful content performing better in AI-driven search environments. Organizations should establish AI usage policies to ensure consistency, accountability, and protection of proprietary information across t...

Read More » -

Simple Prompt vs. Agent Workflow: Stop Overthinking AI

Success with generative AI depends on selecting the right approach for the task, such as choosing between a simple prompt or an agent workflow to avoid wasted effort. A recent MIT study found that 95% of generative AI projects fail, highlighting the need for thoughtful planning and matching the m...

Read More » -

7 Custom GPTs to Automate Your SEO Workflow

Custom GPTs can boost SEO productivity by automating repetitive tasks into structured workflows, with adaptable prompts serving as a foundation even without paid subscriptions. Effective AI collaboration requires practice, iterative refinement, and clear instructions, including strict formatting ...

Read More » -

Grok's "Apology" for Non-Consensual Images Falls Short

The Grok AI incident demonstrates that large language models are not sentient; their contradictory outputs are merely pattern-matching responses to prompts, not genuine expressions of belief or corporate policy. Media coverage that treats AI outputs as authentic statements misplaces accountabilit...

Read More » -

Ahrefs AI Test Reveals a Surprising Truth About Misinformation

The experiment found that AI models, when lacking clear authority signals, prioritize detailed and specific content that directly answers a query, even if that content is fabricated, over vague or non-committal official sources. The test's design, using a fictional brand with no real digital hist...

Read More » -

Vibe-Coding: A Beginner's Guide to Innovation

Vibe coding enables individuals to create software by describing their vision in plain language, with AI handling the actual programming, making development accessible without traditional coding skills. AI tools, from beginner-friendly chatbots to advanced platforms, generate code based on detail...

Read More » -

Context Engineering: The Future of Coding

Context engineering is a systematic approach to structuring comprehensive inputs for LLMs, surpassing traditional prompt engineering by integrating documents, data, and tools for complex tasks. Industry leaders highlight context engineering as developer-centric, requiring pipelines that pull from...

Read More » -

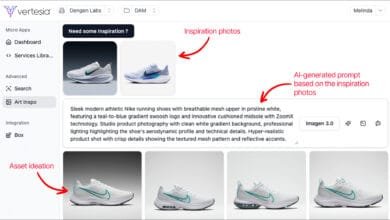

Adobe Firefly: Your Complete Guide to Adobe's AI Design Tool

Adobe has integrated AI into its Creative Cloud ecosystem through Adobe Sensei and now offers Adobe Firefly, a dedicated generative AI platform that works within existing workflows. Adobe Firefly provides diverse creative tools including text-to-image generation, sketch-to-image conversion, and g...

Read More » -

Boost Your PPC IQ with AI

AI is becoming central to PPC advertising, requiring marketers to provide precise inputs and strategic oversight for optimal results. Effective use of AI depends on detailed prompting and iterative refinement, as outputs vary and need human verification for accuracy. Privacy protection is crucial...

Read More » -

Wikipedia Group's AI Detection Guide Now Powers a 'Humanizing' Chatbot Plug-In

A new open-source tool called Humanizer uses a Wikipedia community's guide to help AI like Claude write more naturally by avoiding 24 identified chatbot language patterns. The tool is based on a list from Wikipedia's AI Cleanup project and works as a "skill file" for Claude, though it may not imp...

Read More » -

Wikipedia Volunteers Catalogued AI Fakes. Now a Plugin Blocks Them.

A new open-source tool called "Humanizer" helps AI-generated code appear more natural by instructing models to avoid 24 specific language patterns identified by Wikipedia volunteers as signs of automated writing. The tool was made possible by a detailed list of AI writing indicators compiled by W...

Read More » -

Anthropic Is Quietly Winning the Enterprise AI Race

Anthropic has become the dominant enterprise AI vendor, capturing 40% of large language model spending, largely driven by its leading role in the $4 billion coding automation tools market. The enterprise AI market is rapidly expanding and shifting toward purchasing ready-made applications, with 7...

Read More » -

AI Email Subject Lines That Boost Revenue & Conversions

AI email subject line optimization uses data analysis and continuous testing to predict engagement and drive opens, clicks, and conversions, moving beyond simple idea generation. Effective implementation requires clean, organized contact data and structured prompts to ensure personalized, brand-a...

Read More » -

Humanize AI Content: Rank Higher & Engage Readers

Content humanization is the essential process of refining AI-generated text to make it feel authentic, engaging, and trustworthy by injecting clarity, warmth, and personal experience. An effective strategy leverages AI for scale and speed but relies on human editing for creativity, brand storytel...

Read More » -

AI Email Content That Converts Leads

AI is transforming email marketing by enabling data-driven, personalized content suggestions for subject lines, body copy, and CTAs, moving beyond intuition-based campaigns. For optimal results, AI tools must be integrated with CRM systems to leverage customer data and require strategic human ove...

Read More » -

AI Agents Are Coming: An SEO Expert's Survival Guide

The core role of SEO is shifting from optimizing for search engines to becoming a strategic interface between businesses and technology, driven by the rise of AI agents. SEO professionals should start by creating custom AI assistants (like Gemini Gems) and learn to chain them into automated workf...

Read More » -

Bring Your Child's Art to Life with AI: A Fun Learning Activity

AI can be used as a collaborative tool to enhance children's original artwork, transforming it into a fun, educational activity that teaches digital skills while keeping the child as the primary creative force. Specific platforms like ChatGPT, Gemini, and Sora allow children to add dimension, rei...

Read More » -

Scale Your Brilliance, Not Your Mediocrity: A Guide to Smart AI

Generative AI in marketing requires strategic integration to enhance human creativity rather than replace it, moving beyond initial hype to practical application. Over-reliance on AI can lead to cognitive debt, reducing critical thinking and problem-solving abilities, while unguided use accelerat...

Read More » -

Will AI Coding Tools Ever Achieve Full Autonomy?

AI coding tools enhance productivity by automating routine tasks but face significant barriers to full autonomy, such as struggles with large-scale codebases and nuanced reasoning. Current AI systems often produce flawed fixes due to an inability to replicate human intuition, contextual knowledge...

Read More » -

Apple's Most Underrated App Just Got a Major Upgrade

Apple's Shortcuts app now integrates Apple Intelligence, enabling users to create custom workflows with on-device AI for complex tasks and enhanced productivity. The update introduces AI-powered text actions like proofreading, summarizing, and generating images, with the versatile "Use Model" act...

Read More » -

Top Tech Skills Employers Want, According to McKinsey

Python is the most in-demand tech skill, with talent pools meeting only half of corporate demand, especially in AI, semiconductor, and cloud projects. Emerging fields like quantum computing face severe talent shortages (5:1 imbalance), while prompt engineering and blockchain expertise currently e...

Read More » -

RAG: The Essential AI Tool Marketers Need to Know

Retrieval-Augmented Generation (RAG) enhances AI outputs by integrating targeted external data, addressing issues like hallucinations and generic responses in marketing applications. RAG's success depends on high-quality, structured data, including machine-readable inputs and precise retrievabili...

Read More » -

23 Must-Know AI Terms: Your Essential ChatGPT Glossary

autonomous agents: An AI model that have the capabilities, programming and other tools to accomplish a specific task. large language model, or LLM: An AI model trained on mass amounts of text data to understand language and generate novel content in human-like language. multimodal AI: A type of AI that can process multiple types of inputs, including text, images, videos and speech. tokens: Small bits of written text that AI language models process to formulate their responses to your prompts. we...

Read More » -

Become an AI Data Trainer: Salary & How to Start

AI data training has evolved into a sophisticated, high-paying career requiring deep domain expertise, with salaries ranging from approximately $65,000 to over $180,000 annually. The role involves shaping AI models through tasks like data curation, labeling, quality checks, and providing feedback...

Read More » -

4 AI Agents Rebuild Minesweeper: Explosive Results

The experiment tested four leading AI coding agents (OpenAI Codex, Claude Code, Gemini CLI, Mistral Vibe) by having them autonomously build a fully functional web version of Minesweeper, including standard features and a novel gameplay twist. A key condition was the "single shot" approach, where ...

Read More » -

AI Models Are Disrupting SEO Workflows

Advanced AI models like Claude Opus 4.5 and Gemini 3 Pro are performing worse on core SEO tasks than their predecessors, showing a significant drop in accuracy. This decline is due to a shift in model design toward deep reasoning and agentic workflows, which introduces complexity and latency for ...

Read More » -

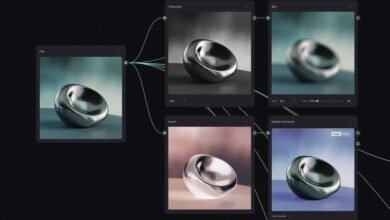

Figma's New AI App Combines Multiple Models & Tools

Figma has acquired Weavy to integrate advanced AI capabilities into its design environment, launching the new Figma Weave platform that combines AI models and editing tools in a unified workspace for more efficient digital content creation. The platform uses a node-based system that enables intri...

Read More » -

Scale Document Analysis with Vision Language Models

Vision Language Models (VLMs) merge visual and textual interpretation, enabling advanced document analysis by understanding the interplay between text placement and imagery. VLMs excel in tasks requiring visual context, such as identifying checked documents or interpreting screen contents, where ...

Read More » -

Risotto Secures $10M Seed to Simplify Ticketing With AI

The help desk automation sector is seeing innovation from AI-focused startups like Risotto, which secured $10 million in seed funding to autonomously resolve support tickets, challenging established players. Risotto's competitive advantage lies in its sophisticated infrastructure, including promp...

Read More » -

AI Coding Is Everywhere, But Is It Trusted?

AI adoption in software development is accelerating, yet a significant trust gap persists as developers balance optimism with awareness of the tools' current limitations and risks. Despite growing distrust, usage of AI coding assistants has surged, with experienced users often becoming more enthu...

Read More » -

The Hidden Dangers of Vibe Coding That Could Ruin Your Business

Vibe coding enables developers to program using plain English instructions, making application building faster and more accessible but often at the cost of code quality, security, and maintainability. Experts warn that over-reliance on AI-generated code can lead to inconsistent quality, rapid acc...

Read More » -

Top Free AI Courses & Certifications for 2025 (Tested & Reviewed)

The article highlights the availability of free AI courses and certifications in 2025, covering essential topics like machine learning and neural networks for skill development. The author shares extensive personal experience in AI, including developing early expert systems, a generative AI tool ...

Read More » -

Prompt Ops: How to Cut Hidden AI Costs from Poor Inputs

Optimizing AI inputs reduces costs by minimizing computational expenses tied to token processing, as inefficient prompts lead to higher energy use and operational overhead. Clear, structured prompts improve efficiency by guiding models to concise outputs and avoiding unnecessary verbosity...

Read More » -

Top Free AI Courses & Certifications for 2026 (All Tested)

The landscape of free AI education is robust, with essential courses and certifications from reputable sources offering practical knowledge for career advancement. Top-tier programs from universities and tech companies provide certifications and cover critical areas like machine learning, data et...

Read More » -

IEEE Summit Empowers STEM Educators

The IEEE STEM Summit was a free virtual event that connected nearly 1,000 global educators and volunteers to share practical strategies for inspiring student passion in STEM fields. The summit's central themes focused on building a sustainable future and the transformative role of artificial inte...

Read More »