Runway’s First World Model Unveiled with Native Audio in Latest Video AI

▼ Summary

– Runway has launched its first world model, GWM-1, which uses frame-by-frame prediction to create simulations that understand physics and real-world behavior.

– The company positions GWM-1 as more general than competitors like Google’s Genie-3, aiming to create simulations for training agents in domains like robotics and life sciences.

– Runway released three specialized versions of the model: GWM-Worlds for interactive scene generation, GWM-Robotics for synthetic training data, and GWM-Avatars for simulating human behavior.

– The company also updated its Gen 4.5 video model, adding features like native audio and long-form, multi-shot generation for more complex and consistent videos.

– Runway plans to eventually merge its specialized world models into one and will make GWM-Robotics available via an SDK, with active talks underway for enterprise use.

The competitive landscape for artificial intelligence is intensifying with the introduction of Runway’s first world model, GWM-1. This new system represents a significant leap in AI’s ability to simulate and understand real-world physics and behavior over time, moving beyond simple video generation. Unlike models that require training on every conceivable real-life scenario, a world model learns an internal simulation of how the world functions. This allows it to reason, plan, and act with a foundational grasp of physical principles, opening doors for applications in complex fields like robotics and scientific research.

Runway positions GWM-1 as a more general-purpose simulation tool compared to alternatives like Google’s Genie-3. The company’s approach is rooted in the belief that teaching models to predict pixels directly is the most effective path to achieving a broad understanding of the world. According to Runway’s CTO, building a powerful video model was a necessary precursor to this development. At sufficient scale and with the right data, this method yields a system with a robust comprehension of real-world dynamics.

The launch is structured around three specialized applications of the core technology: GWM-Worlds, GWM-Robotics, and GWM-Avatars. Each serves a distinct purpose, though the long-term vision is to integrate them into a single, unified model.

GWM-Worlds functions as an interactive application. Users can establish a scene using a text prompt or an image, and the model dynamically generates the environment as they explore it. This generation is informed by an understanding of geometry, physics, and lighting, running at 24 frames per second in 720p resolution. While it has clear potential for gaming, Runway emphasizes its value in training AI agents to navigate and interact within simulated physical spaces.

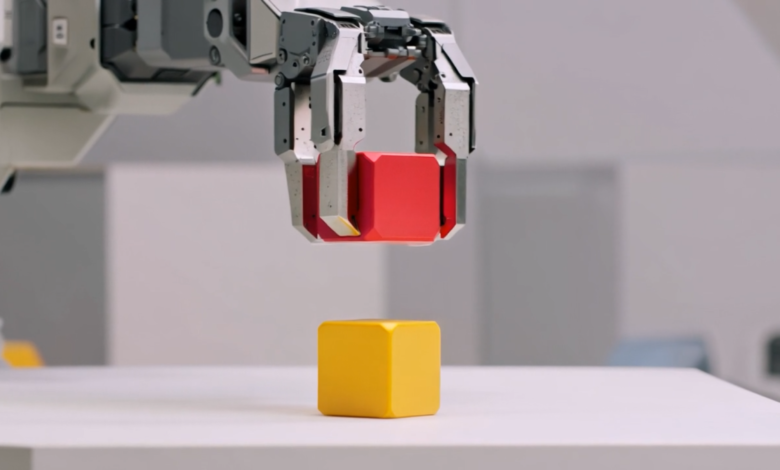

GWM-Robotics focuses on creating synthetic data to train robotic systems. This data can be enriched with variables like changing weather conditions or unexpected obstacles. This approach not only provides vast training datasets but can also help identify potential points where a robot might fail or violate its operational policies in diverse scenarios. Runway plans to make this technology available through a Software Development Kit (SDK) and is currently in discussions with multiple robotics companies and enterprises.

The third pillar, GWM-Avatars, is dedicated to crafting realistic human avatars to simulate behavior. This area is already populated by companies like D-ID and Synthesia, with applications in communication and training. Runway’s entry aims to contribute highly realistic simulations of human interaction.

In a parallel development, Runway has rolled out a substantial update to its foundational Gen 4.5 video generation model. This enhancement introduces native audio and long-form, multi-shot generation capabilities. Users can now produce videos up to one minute in length featuring consistent characters, integrated dialogue, background sound, and complex, multi-angle shots. The update also allows for editing existing audio and modifying multi-shot videos of any duration.

This advancement brings Runway’s offerings closer to the all-in-one functionality seen in competitors like Kling, particularly in the areas of integrated audio and narrative sequencing. More broadly, it signals a maturation in the field, as video generation models evolve from experimental prototypes into production-ready creative and professional tools. The updated Gen 4.5 model is now accessible to all of Runway’s paid users.

(Source: TechCrunch)