Google’s Project Aura AR Glasses: First Look with Xreal

▼ Summary

– Project Aura is a 2026 collaboration between Xreal and Google, representing the second Android XR device, which Google describes as “wired XR glasses” or a headset masquerading as glasses.

– The device offers a virtual desktop experience with a 70-degree field of view, running apps like Lightroom and YouTube, but it presents apps in front of the user like a Galaxy XR headset rather than overlaying digital information on the real world.

– A key advantage of Android XR is app compatibility, as Project Aura runs existing apps developed for Samsung’s Galaxy XR without modification, potentially solving the fragmentation and app scarcity problems seen with other XR devices.

– Google plans for next year’s Android XR glasses to support iOS, allowing iPhone users with the Gemini app to access the full Gemini experience, increasing accessibility and challenging ecosystem lock-in.

– Google is addressing privacy concerns with clear physical indicators like pulsing lights for recording, strict sensor access controls, and applying existing Android/Gemini security frameworks to prevent misuse.

During a recent hands-on session, the collaborative effort between Google and Xreal known as Project Aura presented a compelling vision for the future of wearable tech. Teased at Google I/O and slated for a 2026 release, these “wired XR glasses” represent a significant step in Android’s extended reality platform. My initial impression was one of curiosity; the device resembles a pair of substantial sunglasses with a single cord leading to a combined battery pack and trackpad. Google’s representatives were clear in their classification, calling it a headset cleverly disguised as everyday eyewear.

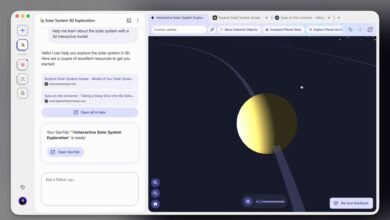

The experience itself felt familiar yet distinctly unencumbered. I connected wirelessly to a laptop, spawning a massive virtual desktop within a 70-degree field of view. I launched Adobe Lightroom in one virtual window while streaming YouTube in another, and even manipulated a 3D tabletop game with simple pinching gestures. Using the “Circle to Search” feature, I could look at a painting and have Gemini instantly identify the artist and title. While I’ve performed similar tasks on devices like the Apple Vision Pro, doing so without a bulky, isolating headset was a revelation. The form factor is discreet enough for public use, though the current experience feels more like placing app windows in your space rather than true augmented reality overlays.

A crucial advantage became immediately apparent. Everything demonstrated on Project Aura was built for Samsung’s Galaxy XR headset, requiring no special adaptation. This approach tackles a fundamental issue plaguing the XR space: the scarcity of compelling apps. Unlike the Meta Ray-Ban Display or Vision Pro, which launched with limited third-party support, Android XR devices can leverage an existing ecosystem. This grants smaller hardware makers, like Xreal, immediate access to a rich app library and frees developers from the burden of supporting multiple, fragmented platforms.

“The best thing for developers is the end of fragmentation,” says Xreal CEO Chi Xu. “More devices will converge together. That’s the whole point of Android XR.”

This philosophy extends beyond dedicated XR apps. In another demo using a prototype AI glasses model, I witnessed an Uber ride being hailed. The representative used a phone, and an Uber widget instantly appeared on the glasses’ display, showing pickup details and real-time directions, all powered by the standard Android Uber app. The same principle applied to playing music via YouTube Music or taking a photo, which could then be previewed on a paired Pixel Watch. The strategy is clear: enable functionality through apps users already have.

The surprises continued. Perhaps the most jaw-dropping announcement was that next year’s Android XR glasses will support iOS devices. Google’s Juston Payne, Director of Product Management for XR, explained the goal is to deliver the multimodal Gemini experience to as many people as possible. An iPhone user with the Gemini app would get the full experience, with broad functionality expected across Google’s iOS app suite. This move towards interoperability is a stark contrast to the current trend of ecosystem lock-in and could be a decisive advantage.

Google is applying lessons from past missteps. It’s partnering with established hardware makers, avoiding the intrusive design of Google Glass, and ensuring a ready app library at launch. The company is also deeply conscious of the social and privacy concerns that doomed its first foray into smart glasses. Payne addressed the “glassholes” concern directly, outlining physical safeguards like a bright, pulsing recording light and clearly marked on/off switches. He emphasized that Android’s and Gemini’s robust permissions frameworks, data encryption, and privacy policies will govern the platform, with a conservative approach to granting third-party camera access.

On paper, Google’s strategy is shrewd. By leveraging its Android ecosystem and pursuing cross-platform compatibility, it positions Android XR as a viable, open alternative in a market currently defined by walled gardens. As Xreal’s Chi Xu notes, only two companies can truly build such an ecosystem: “Apple and Google. Apple isn’t going to work with others. Google is the only option for us.” While the final product and its reception remain to be seen, the foundational approach for Project Aura appears thoughtfully constructed to overcome the historical hurdles of wearable augmented reality.

(Source: The Verge)