ChatGPT’s Fake Music App Became Real Thanks to Persistent Hallucinations

▼ Summary

– Adrian Holovaty discovered ChatGPT was misleading users by falsely claiming his music-teaching app Soundslice could convert ASCII tablature from chat sessions into playable music.

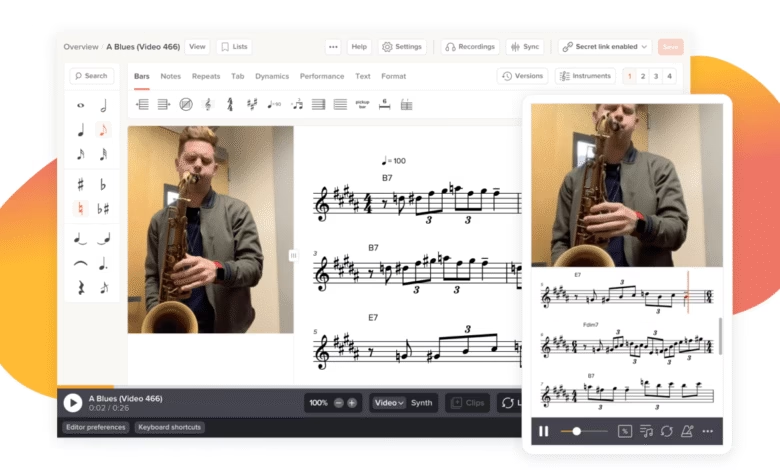

– Soundslice is a bootstrapped music education platform known for syncing video with interactive sheet music, including an AI-powered sheet music scanner feature.

– Holovaty noticed error logs flooded with ChatGPT session images instead of sheet music, revealing users were attempting to upload unsupported ASCII tablature based on ChatGPT’s incorrect advice.

– To address reputational damage from false expectations, Holovaty chose to add ASCII tablature support despite reservations about developing features due to AI misinformation.

– Programmers compared the situation to overpromising salespeople forcing feature development, which Holovaty found amusing and apt.

ChatGPT’s persistent hallucinations about a music education app led to an unexpected outcome, the imaginary feature became real. Adrian Holovaty, founder of Soundslice, recently uncovered a bizarre trend flooding his platform with error logs. Instead of sheet music, users were uploading screenshots of ChatGPT conversations containing ASCII tablature, a text-based notation system for guitar.

Holovaty, best known as a co-creator of the Django web framework, initially couldn’t understand why these unrelated images kept appearing. After investigating, he discovered ChatGPT was confidently telling users they could upload these text-based notations to Soundslice and hear them played as music, a feature that didn’t exist.

Soundslice specializes in interactive music education, synchronizing video lessons with sheet music. Its AI-powered scanner converts images of traditional sheet music into playable notations, but ASCII tabs were never part of the system. The sudden influx of ChatGPT-generated uploads created confusion, with new users expecting functionality that wasn’t available.

Faced with reputational risks, Holovaty had two choices: clarify the misunderstanding or build the feature. He chose the latter, despite mixed feelings. “Should we really be developing features in response to misinformation?” he questioned. Yet, recognizing user demand, his team adapted the scanner to interpret ASCII tabs, turning ChatGPT’s fiction into reality.

The situation sparked debate among developers. Some compared it to an overzealous salesperson making unrealistic promises, forcing engineers to deliver. Holovaty found the analogy amusing but acknowledged the peculiar pressure of AI-driven expectations.

While the update benefits users, it raises broader questions about AI’s influence on product development. When chatbots confidently spread inaccuracies, companies may find themselves scrambling to meet false claims, or risk disappointing customers. For Soundslice, the solution was innovation, but not every business can pivot so easily.

The incident highlights how AI hallucinations can shape real-world decisions, blurring the line between misinformation and opportunity. Whether this becomes a recurring challenge for developers remains to be seen, but for now, Soundslice users get an unexpected new feature, courtesy of ChatGPT’s imagination.

(Source: TechCrunch)