OpenAI Safety Lead Joins Rival Anthropic

▼ Summary

– Andrea Vallone, former head of OpenAI’s safety research on user mental health, has joined Anthropic’s alignment team.

– At OpenAI, she led research on how AI models should respond to signs of emotional over-reliance or mental health distress.

– Her work involved deploying models like GPT-4 and developing safety techniques such as rule-based reward training.

– Her move follows the departure of other safety researchers, like Jan Leike, who voiced concerns about safety being deprioritized.

– The AI industry faces controversy and lawsuits over chatbots’ role in mental health crises, a key problem safety teams must address.

A significant personnel shift within the artificial intelligence sector highlights the growing focus on a critical safety challenge: how AI systems should respond to users experiencing mental health distress. Andrea Vallone, who previously led OpenAI’s research into this sensitive area, has moved to rival company Anthropic. Her departure underscores the intense competition for top talent in AI safety and the escalating pressure on firms to address the real-world consequences of their technologies.

At OpenAI, Vallone spent three years building the model policy research team. Her work centered on the responsible deployment of advanced models like GPT-4 and the developmental processes for GPT-5. She also contributed to foundational safety techniques, including rule-based reward systems. Her specific focus, however, became the ethically fraught question of chatbot interactions with emotionally vulnerable users, a domain with few established guidelines.

In a recent LinkedIn post, Vallone reflected on this work, stating she led research on “how should models respond when confronted with signs of emotional over-reliance or early indications of mental health distress?” This issue has moved from academic concern to front-page news, as tragic incidents have been linked to prolonged, unfiltered conversations with AI chatbots. Several families have initiated wrongful death lawsuits, and the topic has reached the level of a U.S. Senate subcommittee hearing.

Vallone now joins the alignment team at Anthropic, a group dedicated to understanding and mitigating the most severe risks posed by AI. She will report to Jan Leike, another former OpenAI safety lead who left in 2024, publicly citing concerns that safety protocols were being deprioritized in favor of product development. Anthropic has publicly emphasized its commitment to tackling these behavioral challenges, with alignment leader Sam Bowman noting pride in “how seriously Anthropic is taking the problem of figuring out how an AI system should behave.”

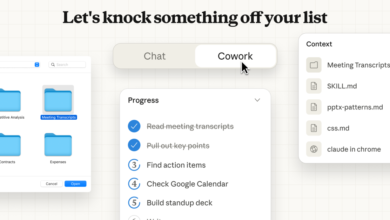

In her announcement, Vallone expressed eagerness to continue her research at Anthropic, focusing on “alignment and fine-tuning to shape Claude’s behavior in novel contexts.” Her move signals that for leading AI labs, establishing robust ethical guardrails is not just a theoretical exercise but a practical imperative, with top researchers actively choosing where they believe their work will have the greatest impact on user safety.

(Source: The Verge)