The Download: Lab-Mimicked Pregnancy and AI Parameters Explained

▼ Summary

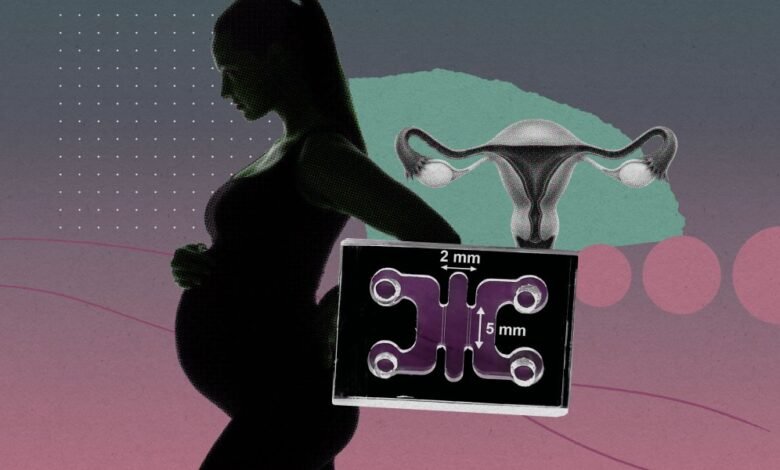

– Scientists in Beijing have successfully mimicked the first moments of human embryo implantation using a lab-created microfluidic chip and endometrial organoids.

– This research, detailed in recent Cell Press papers, represents the most accurate lab model of early pregnancy to date, using human embryos from IVF centers.

– In AI, a large language model’s parameters are the adjustable settings that fundamentally control its behavior, analogous to the dials in a complex system.

– The scale of these parameters is immense, with OpenAI’s GPT-3 having 175 billion and Google DeepMind’s Gemini 3 potentially having trillions.

– AI companies have become secretive about their model specifics, like parameter counts, due to intense industry competition.

The process of human embryo implantation, a critical and complex biological event marking the official start of pregnancy, has been successfully recreated outside the human body. In a groundbreaking series of experiments, scientists in Beijing have observed this intricate dance of cells within a microfluidic chip, using human embryos from IVF centers and lab-grown uterine lining tissue known as organoids. This research, detailed in three recent papers published by Cell Press, represents the most accurate simulation of early pregnancy to date, offering an unprecedented window into a process that has long been shrouded in mystery due to the ethical and technical challenges of studying it in vivo. The ability to model implantation in such detail could revolutionize our understanding of early development, infertility, and pregnancy complications.

In the laboratory, researchers watched as a ball-shaped embryo made contact with and then burrowed into the endometrial organoid, with the initial structures of what would become the placenta beginning to form. This work provides a powerful new platform for scientific inquiry, potentially reducing reliance on animal models and opening new avenues for testing drugs and understanding why some pregnancies fail. The implications for reproductive medicine and developmental biology are profound, as scientists can now manipulate and observe these early stages in a controlled environment.

Shifting focus from biology to artificial intelligence, the inner workings of large language models (LLMs) like ChatGPT are often described in terms of their vast number of parameters. These parameters are the fundamental components that define a model’s knowledge and behavior. Imagine a colossal, planet-sized pinball machine with billions of precisely adjusted paddles and bumpers. The path of the ball, representing how the model processes and generates language, is entirely determined by the specific settings of these internal components. Each parameter is a numerical value the model adjusts during its training on massive datasets, learning patterns and associations in human language.

The scale of these models is staggering. OpenAI’s GPT-3, launched in 2020, was built with 175 billion parameters. The current generation of models is believed to be exponentially larger. For instance, industry analysts speculate that Google DeepMind’s latest model, Gemini 3, may contain at least a trillion parameters, with some estimates suggesting the figure could be closer to seven trillion. In today’s highly competitive AI landscape, companies have become increasingly secretive about their architectures. Firms like Google and OpenAI no longer routinely disclose technical details such as parameter counts, treating this information as a proprietary advantage. This shift toward opacity makes it more challenging for the broader research community to understand, compare, and audit the capabilities of these powerful systems.

(Source: Technology Review)