Spot AI-Generated Faces: Learn to Identify the Fakes

▼ Summary

– AI-generated faces are now so realistic that even “super recognizers,” people with exceptional facial recognition skills, cannot reliably distinguish them from real photos.

– A short, five-minute training session on common AI rendering errors significantly improved detection accuracy for both super recognizers and typical individuals.

– The study suggests super recognizers may use a different set of clues beyond common rendering errors to identify fakes, as training boosted both groups by a similar amount.

– Researchers propose that future detection systems could combine AI algorithms with trained super recognizers in a “human-in-the-loop” approach.

– The study’s authors emphasize that taking time to scrutinize facial features is crucial, as trained participants slowed their analysis to achieve better accuracy.

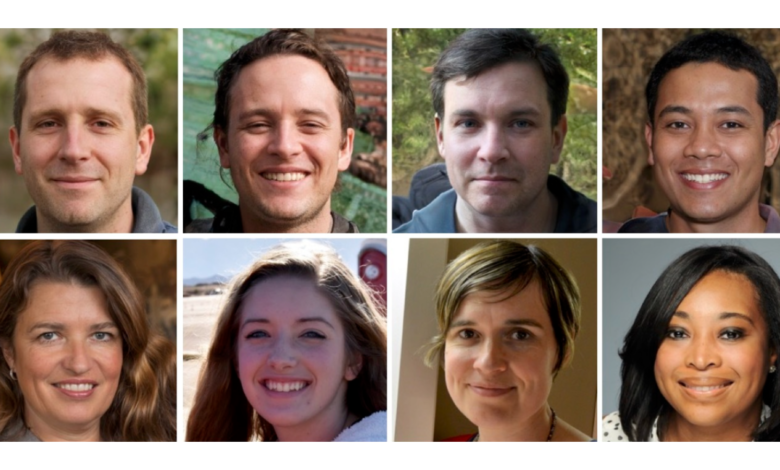

The ability to distinguish between real human faces and those generated by artificial intelligence has become a critical skill in the digital age. New research reveals that even individuals with exceptional facial recognition abilities struggle to identify AI-generated faces, often mistaking them for real people. A brief, targeted training session, however, can significantly boost anyone’s ability to spot these convincing fakes by teaching them to look for specific digital artifacts.

A study published in the journal Royal Society Open Science demonstrates the startling realism of modern AI imagery. Participants, including a group known as “super recognizers” who typically excel in facial processing tasks, performed no better than random chance when trying to identify computer-generated faces. People with average recognition skills fared even worse, incorrectly labeling AI faces as real more often than not. The encouraging finding was that a simple five-minute training module dramatically improved detection rates for everyone involved.

This training focused on common rendering flaws found in synthetic images. AI algorithms often produce subtle errors, such as oddly symmetrical features, strange hairline textures, unnaturally smooth skin, or even a “middle tooth.” Participants learned to scrutinize these details. After the short tutorial, super recognizers correctly identified 64% of fake faces, up from 41%, while typical recognizers improved from 30% to 51% accuracy.

Interestingly, the training helped both groups by a similar margin. Because super recognizers started from a higher baseline, the researchers suggest they may be using a different, perhaps more intuitive, set of clues beyond just obvious rendering mistakes to assess authenticity. The study’s lead author noted this points to a promising avenue for future detection methods, potentially combining AI algorithms with trained human observers, particularly those with superior recognition skills.

The technology behind these hyperrealistic fakes, known as generative adversarial networks (GANs), works by pitting two AI systems against each other. One generates the image, and another tries to detect if it’s fake. Through continuous iteration, the images become so refined they can fool both machines and humans, sometimes appearing more proportionally “perfect” than actual human faces, a phenomenon called hyperrealism.

In the experiments, participants viewed a series of faces, each displayed for ten seconds, and had to judge their authenticity. After training, people took more time to examine each image, slowing down by nearly two seconds on average. This deliberate scrutiny is a key takeaway: taking a moment to carefully inspect facial features is crucial for spotting AI-generated content.

It is important to note the study’s limitations. The effectiveness of the training was measured immediately afterward, so the longevity of the improvement is unknown. Experts commenting on the research also pointed out that because different people were tested before and after training, it’s difficult to quantify the exact improvement for any single individual. Future studies that retest the same participants over time will be needed to determine if such training offers a lasting defense against increasingly sophisticated digital forgeries.

(Source: Live Science)