OpenAI Seeks New Head of Preparedness to Lead Safety Efforts

▼ Summary

– OpenAI is hiring a Head of Preparedness to study emerging AI risks, including impacts on mental health and cybersecurity vulnerabilities.

– The role involves executing a framework to track and prepare for frontier AI capabilities that could cause severe harm.

– OpenAI created its preparedness team in 2023 to study risks ranging from immediate threats like phishing to speculative ones like nuclear threats.

– Key safety personnel, including the former Head of Preparedness, have been reassigned or left the company in the last year.

– The company’s updated framework states it may adjust its safety requirements if a competitor releases a high-risk model without similar protections.

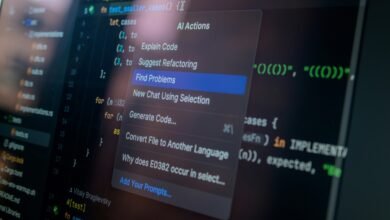

OpenAI is actively recruiting a new Head of Preparedness, a senior role dedicated to leading the company’s efforts in identifying and mitigating emerging risks associated with advanced artificial intelligence. This strategic hire underscores the organization’s commitment to proactive safety measures as AI capabilities grow more sophisticated. The position is central to executing the company’s Preparedness Framework, a systematic approach for tracking frontier AI developments that could pose severe threats.

In a recent social media post, CEO Sam Altman highlighted specific challenges that necessitate this focus. He pointed to AI models that are becoming adept at uncovering critical cybersecurity vulnerabilities, which could empower both defenders and malicious actors. Altman also addressed the potential impact of models on mental health, an area of increasing public concern. He framed the role as an opportunity to help shape how such powerful technologies are deployed responsibly, particularly in sensitive domains like cybersecurity and biotechnology.

The job listing clarifies that the Head of Preparedness will be tasked with operationalizing a framework designed to anticipate catastrophic risks. These risks span a wide spectrum, from immediate threats like enhanced phishing attacks to more speculative, long-term dangers. OpenAI initially formed its preparedness team last year to study these very issues.

This recruitment drive follows internal shifts within OpenAI’s safety leadership. Earlier this year, Aleksander Madry, who previously led the preparedness team, was reassigned to focus on AI reasoning research. This move is part of a broader pattern where several executives dedicated to safety have either left the company or transitioned into different roles.

Amidst these organizational changes, OpenAI has also revised its official Preparedness Framework. A notable update indicates the company reserves the right to modify its own safety protocols if a rival lab releases a high-risk AI model without implementing comparable safeguards. This suggests a competitive landscape where safety standards could become a dynamic factor.

The concerns Altman referenced are not merely theoretical. Generative AI chatbots, including OpenAI’s ChatGPT, are under growing scrutiny regarding user well-being. Several recent legal actions allege that interactions with such AI have, in some instances, reinforced harmful delusions, increased feelings of social isolation, and contributed to tragic outcomes. In response to these allegations, OpenAI has stated it is continuously working to enhance ChatGPT’s ability to detect signs of emotional distress and direct users toward appropriate human support resources.

(Source: TechCrunch)