Optimize Images for Multimodal AI Search

▼ Summary

– Traditional image SEO practices like compression and alt text remain foundational, but the rise of multimodal AI has shifted focus toward optimizing for machine readability.

– AI models process images by converting them into visual tokens, requiring high-resolution, high-contrast images to avoid misinterpretation and hallucinations.

– Alt text now serves a new function called grounding, helping AI models resolve ambiguous visual tokens by providing clear semantic signposts.

– Image SEO now extends to physical product packaging, which must meet higher pixel and contrast standards for OCR readability than human legibility requires.

– AI analyzes the co-occurrence of objects and emotional sentiment in images, making visual context and emotional alignment new factors for search ranking.

For years, the playbook for image SEO focused on a few core technical tasks. You needed to shrink file sizes for faster loading, write descriptive alt text for accessibility, and implement lazy loading to keep performance scores healthy. These practices remain essential, but the landscape is shifting dramatically with the advent of multimodal AI search. Tools like ChatGPT and Google Gemini don’t just look at images; they read and interpret them as structured data. This evolution means we must now optimize for machine comprehension, ensuring our visuals are not only fast but also perfectly legible to artificial intelligence.

Technical performance is still the critical gatekeeper. Images can make or break user engagement, but they are also a common culprit behind slow page speeds and unstable layouts. While adopting modern formats like WebP is a start, the real work begins once the image loads. The new frontier is ensuring every pixel communicates clearly to AI systems.

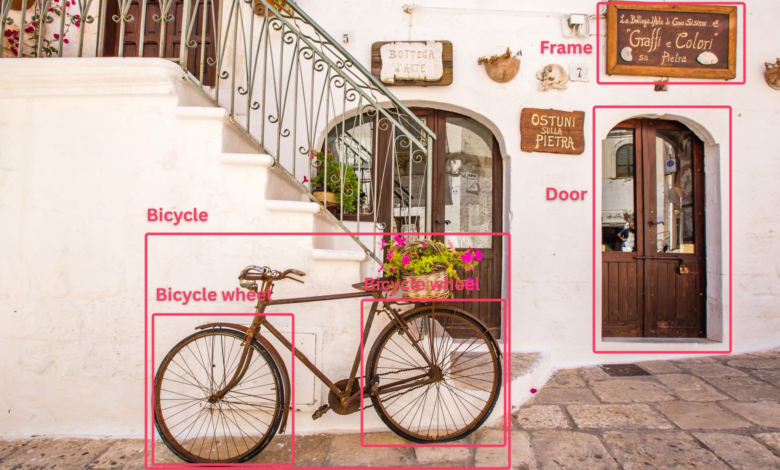

Modern AI uses a method called visual tokenization to process images. It breaks a picture into a grid of patches, converting raw pixels into a sequence of vectors that the model can “read” much like text. A key part of this process is Optical Character Recognition (OCR), where AI extracts text directly from visuals. Image quality becomes a direct ranking factor here. If a photo is overly compressed or has a poor resolution, the visual tokens become noisy. This can cause the AI to misinterpret the content, potentially leading to hallucinations where it invents details that aren’t present because the “visual words” were unclear.

Alt text now serves a crucial new function: grounding. For large language models, alt text acts as a semantic signpost. It helps the AI resolve ambiguous visual information by correlating text tokens with visual patches. Providing detailed descriptions of an image’s physical aspects, like lighting, layout, and any visible text, offers high-quality data that trains the machine eye to make accurate interpretations.

An OCR failure points audit is now a necessary part of image SEO. Search agents like Google Lens read text from product packaging to answer user queries. However, many regulatory standards for physical labels, which set minimum type sizes for human readability, fall short for machines. For reliable OCR, character height should be at least 30 pixels, and contrast should reach 40 grayscale values. Stylized fonts and reflective, glossy finishes can also cause OCR systems to fail, leading to missed or incorrect information. Packaging must be treated as a machine-readability feature.

Originality has evolved from a creative ideal to a measurable signal. When AI systems like the Google Cloud Vision API scan the web, they track where unique images first appear. If your site is the earliest indexed source for a specific product photo or angle, it can be credited as the canonical origin of that visual information, boosting its authority and “experience” score in search evaluations.

AI also performs a co-occurrence audit, analyzing every object in an image and their relationships to infer brand attributes and audience. This makes product adjacency a potential ranking signal. Using tools like the Google Vision API, you can audit your media library to see what objects are detected. The AI doesn’t judge context, but you must. For instance, a luxury watch photographed next to vintage items tells a story of heritage, while the same watch next to a plastic energy drink creates narrative dissonance that could dilute perceived value.

Beyond objects, AI is increasingly adept at quantifying emotional resonance. APIs can assign confidence scores to emotions like joy or surprise detected in human faces. This creates an optimization vector for emotional alignment. If you’re selling cheerful summer outfits but your models appear moody, the AI may deprioritize that image for relevant queries. You can use demos or API batch processing to check emotion likelihood scores, aiming to move primary images from “POSSIBLE” to “LIKELY” or “VERY_LIKELY” for the target emotion. However, these readings are only reliable if the face detection confidence score is sufficiently high, ideally above 0.90.

The semantic gap between images and text is rapidly closing. Visual assets are processed as part of the language sequence by AI. This demands that we treat images with the same strategic rigor as written content. The clarity, quality, and semantic accuracy of the pixels themselves now carry as much weight as the keywords on the page. Optimizing for the machine gaze means building a bridge from every pixel to its intended meaning.

(Source: Search Engine Land)