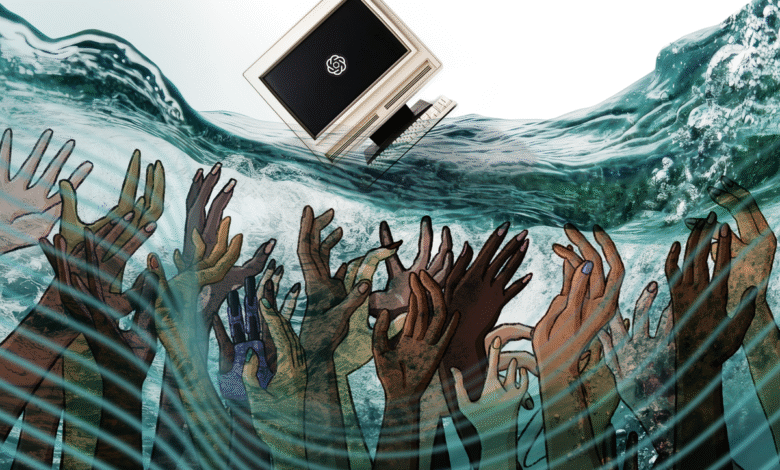

Your ChatGPT Secrets Aren’t Safe

▼ Summary

– A Missouri college student was charged with vandalism after allegedly confessing to ChatGPT about damaging 17 cars, marking the first known case of AI incriminating a user.

– ChatGPT evidence also led to an arrest in the high-profile Palisades Fire case, where a suspect used the AI to generate images of a burning city.

– OpenAI’s CEO stated that AI conversations lack legal protections, raising privacy concerns as users share intimate personal information with the chatbot.

– Companies like Meta plan to scan AI interactions for targeted advertising, using personal data from chats to serve ads without opt-out options.

– The growing use of AI apps exposes users to data exploitation by tech firms, advertisers, and law enforcement, reigniting debates about privacy versus convenience.

In the early morning of August 28th, a typically quiet parking lot at a Missouri university became the site of extensive property destruction. Over a span of 45 minutes, vandals targeted 17 vehicles, smashing windows, breaking mirrors, tearing off wipers, and denting chassis, resulting in damages totaling tens of thousands of dollars. A police investigation spanning a month collected various pieces of evidence, including shoe impressions, witness accounts, and surveillance video. However, the breakthrough came from an unexpected source: a series of messages sent to the AI chatbot ChatGPT, which ultimately led to criminal charges against 19-year-old student Ryan Schaefer.

Shortly after the incident, Schaefer reportedly used the ChatGPT application on his phone to describe the destruction. In these exchanges, he allegedly asked the AI, “how fNoneked am I bro?.. What if I smashed the shit outta multiple cars?” This appears to mark the first known instance where an individual’s conversation with an artificial intelligence platform contributed directly to their criminal indictment. Police documents referenced the “troubling dialogue” as a key element in the case.

Only days later, ChatGPT was referenced again in legal documents, this time concerning a much more severe crime. Authorities arrested 29-year-old Jonathan Rinderknecht for his alleged involvement in starting the Palisades Fire, a devastating California wildfire that destroyed thousands of structures and claimed 12 lives earlier this year. According to the affidavit, Rinderknecht had asked the AI to create images depicting a city in flames.

These two incidents are unlikely to be the last where artificial intelligence plays a role in implicating individuals in illegal activities. OpenAI CEO Sam Altman has publicly stated that no legal protections currently safeguard user conversations with the chatbot. This raises significant privacy issues for the emerging technology and underscores the depth of personal information people disclose to AI systems.

Altman noted on a recent podcast, “People talk about the most personal shit in their lives to ChatGPT. Young people, especially, are using it as a therapist or a life coach, discussing relationship issues and other private matters. Currently, conversations with a therapist, lawyer, or doctor are protected by legal privilege, but that’s not the case with AI.”

The broad capabilities of AI models like ChatGPT mean individuals rely on them for a wide array of sensitive tasks. These range from editing private family photographs to interpreting complicated documents such as bank loan agreements or rental contracts, all of which contain highly personal data. A recent study conducted by OpenAI indicated that users are increasingly seeking medical advice, shopping recommendations, and even engaging in role-playing scenarios with the chatbot.

Other AI applications explicitly market themselves as virtual therapists or romantic partners, often without the safeguards employed by more regulated industries. Meanwhile, illicit services on the dark web enable people to treat AI not just as a confidant, but as a potential accomplice in unlawful acts.

The volume of sensitive information being shared is staggering, attracting attention from both law enforcement and malicious actors seeking to exploit it. When Perplexity introduced an AI-powered web browser earlier this year, security researchers found that hackers could potentially hijack the system to access a user’s private data, which could then be used for blackmail.

The corporations behind this technology are also capitalizing on the vast repository of intimate user data. Starting in December, Meta plans to utilize individuals’ interactions with its AI tools to deliver targeted advertisements across Facebook, Instagram, and Threads. Both voice and text exchanges with AI will be analyzed to discern personal interests and potential purchasing behavior, with no option for users to opt out.

Meta explained in a blog post, “For example, if you chat with Meta AI about hiking, we may learn that you’re interested in hiking. As a result, you might start seeing recommendations for hiking groups, posts from friends about trails, or ads for hiking boots.”

While this might sound harmless, historical examples of targeted advertising demonstrate its potential for harm. Individuals searching for phrases like “need money help” have been shown ads for predatory lenders. Online casinos have targeted problem gamblers with offers of free spins, and elderly users have been encouraged to invest retirement savings in overpriced gold coins.

Meta CEO Mark Zuckerberg has acknowledged the extensive personal data that will be gathered under the new AI advertising policy. In April, he stated that users would enable Meta AI to “know a whole lot about you, and the people you care about, across our apps.” This is the same executive who once referred to Facebook users as “dumb fucks” for entrusting him with their personal information.

Pieter Arntz, a security researcher at Malwarebytes, commented following Meta’s announcement, “Like it or not, Meta isn’t really about connecting friends all over the world. Its business model is almost entirely based on selling targeted advertising space across its platforms. The industry faces big ethical and privacy challenges. Brands and AI providers must balance personalisation with transparency and user control, especially as AI tools collect and analyze sensitive behavioral data.”

As artificial intelligence becomes more integrated into daily life, the trade-off between personal privacy and convenience is becoming increasingly pronounced. Similar to the public reckoning prompted by the Cambridge Analytica scandal, this new trend of data harvesting, along with cases like those of Schaefer and Rinderknecht, could push digital privacy back to the forefront of technology policy discussions.

In the less than three years since ChatGPT’s introduction, over a billion people now use standalone AI applications. Many of these users unknowingly become subjects of exploitation by tech firms, advertisers, and criminal investigators. A well-known saying suggests that if you aren’t paying for a service, you are not the customer, you are the product. In the age of artificial intelligence, it may be more accurate to replace “product” with “prey.”

(Source: The Independent)