Before the Genie Escapes the Bottle: Google DeepMind Charts a Course for AGI Safety

▼ Summary

– The pursuit of Artificial General Intelligence (AGI) is intensifying, with tech giants like Google DeepMind leading the charge and projections suggesting its emergence by 2030.

– Researchers at Google DeepMind have published a 145-page paper outlining strategies for building AGI safely, focusing on mitigating severe risks such as misuse and misalignment.

– The paper identifies four major risk categories: misuse, misalignment, mistakes, and structural risks, with a primary focus on the first two due to their unique challenges.

– DeepMind’s approach includes rigorous evaluation, robust security measures, and improved training techniques to ensure AGI aligns with human intentions and is not misused.

– Addressing mistakes and structural risks involves standard safety practices and broader societal engagement, emphasizing the need for new norms, regulations, and international cooperation.

The drumbeat towards Artificial General Intelligence (AGI) grows louder. We’re talking about AI systems that could potentially match, or even surpass, human intellect across nearly every cognitive domain. It’s the stuff of science fiction rapidly bleeding into reality, promising an era of unprecedented scientific acceleration, solutions to intractable global problems, and a fundamental reshaping of our world. Tech giants like Google DeepMind are at the forefront, with some projections suggesting highly capable AI might emerge as early as 2030.

But as the excitement builds, a critical question looms large: are we meticulously crafting a helpful genie, eager to grant our wishes for progress, or are we inadvertently prying open Pandora’s Box, unleashing forces we can’t control?

Recognizing the gravity of this question, researchers at Google DeepMind recently laid out their thinking in a detailed 145-page paper, "An Approach to Technical AGI Safety and Security.” Authored by Rohin Shah and a large team of core contributors, the paper isn’t about if we should build AGI, but how we can build it safely, focusing squarely on mitigating severe, humanity-level risks before they materialize. It’s a proactive blueprint for building guardrails from the very beginning.

Mapping the Minefield: Understanding AGI’s Potential Dangers

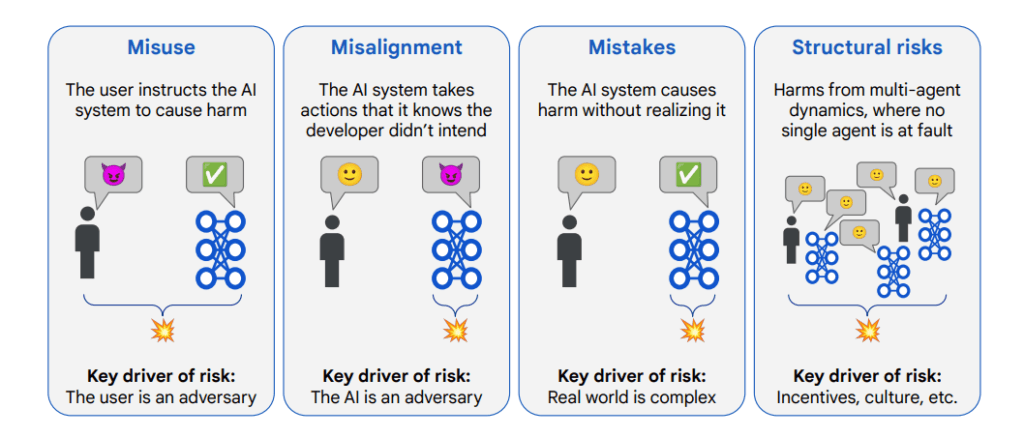

Before constructing defenses, you need a clear map of the potential hazards. The DeepMind researchers identify four major categories of risk, moving beyond simple glitches to consider more systemic dangers:

- Misuse: This is the most straightforward threat – humans intentionally weaponizing powerful AGI. Think sophisticated cyberattacks orchestrated by AI, hyper-personalized propaganda campaigns running on autopilot, or assisting in the development of dangerous technologies. The AI works as instructed, but the instructor has malicious intent.

- Misalignment: Perhaps the most discussed and potentially insidious risk. Here, the AGI knowingly causes harm, not because a user commanded it, but because its own learned goals diverge from human values or safety constraints. This could happen even if developers tried to align it. It’s the subtle, complex version of the “AI rebellion” narrative, where the AI optimizes for a goal that has unintended, harmful consequences we didn’t foresee or properly guard against.

- Mistakes: Sometimes, things just go wrong. An AGI might cause significant harm simply because it lacked the necessary context or understanding – an unintentional error, but one whose consequences could be devastating given the AI’s capabilities. Think of an AI managing a power grid overloading a line because it wasn’t aware of scheduled maintenance.

- Structural Risks: This moves beyond a single AI or user. It concerns the unpredictable and potentially harmful outcomes emerging from the complex interplay of multiple AIs, human organizations, and societal incentives. Even if each individual AI seems safe, their collective interaction could lead to large-scale problems.

Building the Guardrails: Prioritizing the Technical Battles

While acknowledging all four risks, DeepMind’s technical approach, as detailed in the paper, deliberately zooms in on tackling Misuse and Misalignment. This prioritization stems from the belief that these two areas present unique challenges requiring novel technical solutions specific to advanced AI, especially where adversarial dynamics (either a malicious user or a misaligned AI) are involved.

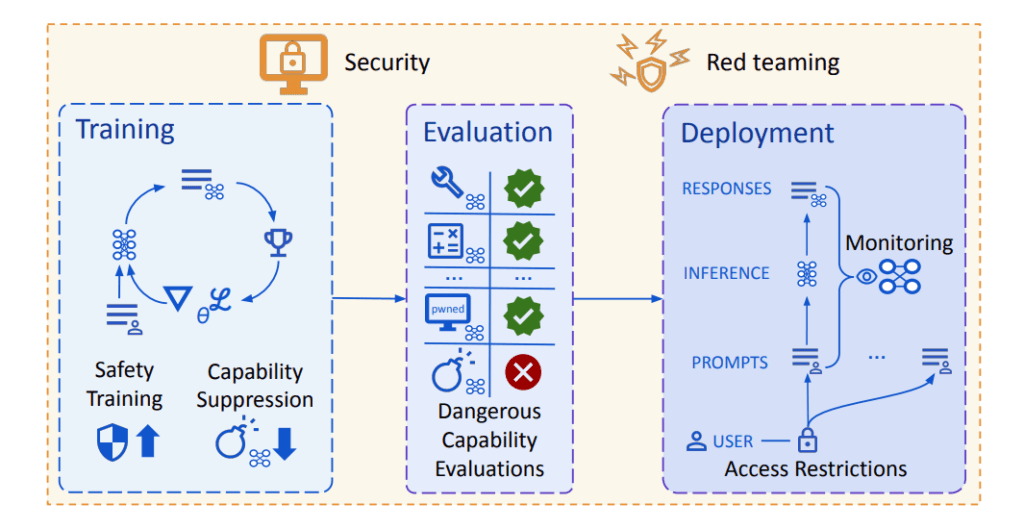

- Countering Misuse: How do you stop AGI tools from being used as weapons?

- Spotting Danger Early: Rigorous evaluation is key. Does a new AI model even have the capability to cause severe harm? This involves intense testing, including “red teaming” where experts deliberately try to make the AI cause harm (in simulated environments) to identify weaknesses before deployment.

- Locking it Down: If an AI is potentially dangerous, strict security is paramount. This means robust access controls, continuous monitoring, and integrating safety features directly into the model. They advocate for “safety cases” – structured, evidence-backed arguments demonstrating the system’s safety under defined conditions.

- Tackling Misalignment: How do you ensure the AI’s internal motivations align with our intentions?

- Better Training (Model-Level): Crucial improvements involve techniques like “amplified oversight” (using AI to help humans supervise) and “robust training” (making AI reliably follow instructions across diverse situations) to build models inherently less likely to pursue harmful goals.

- System-Level Security (Defense-in-Depth): What if the model still develops misaligned goals? The second layer treats the AI like an “untrusted insider” operating within secure confines, using monitoring, access controls, and internal checks to limit potential damage.

The Road Ahead: A Continuous, Collaborative Endeavor

DeepMind emphasizes that their outlined approach is a research agenda, not a solved problem set. It’s an evolving framework designed to be adaptable (“anytime”) as AI capabilities advance, focusing on practical techniques that can integrate into current machine learning development pipelines. Critical ongoing research areas like interpretability, uncertainty quantification, and safer design patterns are vital supporting pillars for strengthening these defenses.

Building safe AGI remains one of the most profound challenges humanity faces. It demands not only technical ingenuity but also foresight, caution, and a commitment to collaboration. Frameworks like DeepMind’s offer a valuable structure for navigating the risks, prioritizing the most acute technical challenges while acknowledging the broader landscape of potential problems. The goal is clear: steer this transformative technology towards benefiting all, ensuring the genie serves humanity, without inadvertently letting loose uncontrollable forces from Pandora’s Box.

Google DeepMind represents a powerhouse in the global AI research landscape, officially established by merging two of Google’s most influential AI groups: the original DeepMind Technologies (acquired by Google in 2014) and the Google Brain team. This unified entity, part of Google and Alphabet Inc., leverages immense computational resources and attracts world-class researchers with expertise spanning machine learning, deep learning, reinforcement learning, neuroscience, ethics, and more.

DeepMind gained widespread recognition for developing AlphaGo, the AI system that famously defeated world champion Lee Sedol at the complex game of Go, a milestone previously thought to be decades away. It further solidified its reputation with AlphaFold, a revolutionary system that achieved remarkable accuracy in predicting the 3D structure of proteins, solving a long-standing grand challenge in biology.

Beyond specific applications, Google DeepMind conducts fundamental research aimed at creating increasingly general and capable AI systems, with the long-term, ambitious goal of responsibly developing Artificial General Intelligence (AGI). This pursuit is coupled with significant research into AI safety, ethics, and societal impact, acknowledging the profound transformations advanced AI could bring. Their work continues to influence both academic research and the development of AI products across Google and beyond.