NVIDIA’s Vision for the Future: GTC 2025 Keynote Highlights

▼ Summary

– NVIDIA CEO Jensen Huang presented a vision at GTC 2025 for reshaping computing and technology, emphasizing the evolution from perception-focused AI to “agentic AI” and “physical AI” which understand context and interact with the physical world.

– The new Blackwell architecture, featured in the GeForce 5090, is central to NVIDIA’s strategy for “AI factories,” with significant advancements like disaggregation of MVLink switches and liquid cooling to achieve one Exaflops of computing power per rack.

– NVIDIA’s expanding software ecosystem, including the CUDA-X libraries, is crucial for deploying accelerated software broadly, reaching a tipping point of accelerated computing adoption across various sectors.

– NVIDIA is expanding AI development beyond the cloud into enterprise and edge computing, with partnerships like those with Cisco and T-Mobile, and a notable collaboration with General Motors for building a self-driving car fleet.

– Huang introduced NVIDIA Dynamo, an open-source operating system for AI factories, and outlined a multi-year hardware roadmap, including Blackwell Ultra, Vera Rubin, and Rubin Ultra, to help organizations plan infrastructure investments confidently.

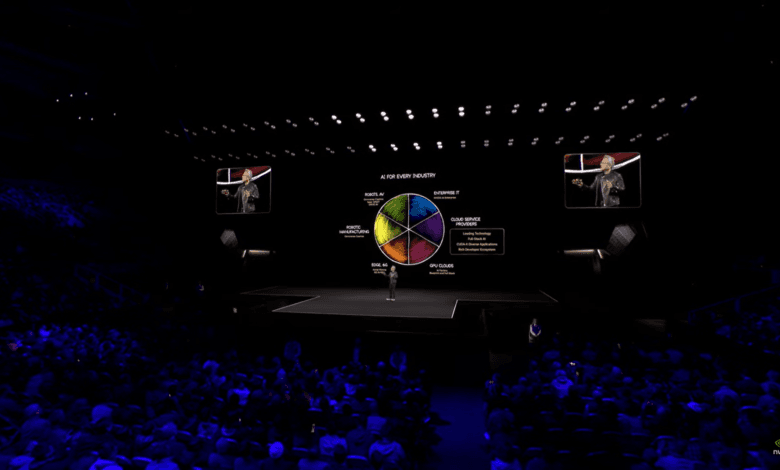

In his characteristic black leather jacket, NVIDIA CEO Jensen Huang took the stage at GTC 2025 this March to present what might be his most ambitious vision yet for the computing giant. The packed auditorium fell silent as Huang began to outline how NVIDIA plans to reshape not just computing but our entire relationship with technology.

“Today, we create intelligence in token factories,” Huang declared early in his presentation. “These tokens transform images into scientific data charting alien atmospheres and guiding tomorrow’s explorers. They turn raw data into foresight so next time we’ll be ready.“

The keynote outlined a comprehensive journey from AI’s recent past through its immediate future, presenting a roadmap that carries significant implications for industries worldwide.

The Shifting Landscape of AI

Huang framed AI’s evolution in distinct phases: from the perception-focused systems of the past decade to the generative capabilities that have dominated headlines for the last five years. But it was his characterization of what comes next that captured attention – what he called “agentic AI” and “physical AI.”

These next-generation systems move beyond generating content to actually understanding context, reasoning independently, formulating plans, and interacting with the physical world. According to Huang, these systems represent far more than incremental progress – they require rethinking the fundamental computing architecture needed to power them.

“The computation requirement for AI, especially with agentic AI and reasoning, is easily a hundred times more than we thought we needed this time last year,” Huang explained, citing the increased tokens generated through reasoning processes like “Chain of Thought” and the demand for faster computation to maintain responsiveness.

Blackwell: Powering the AI Factory

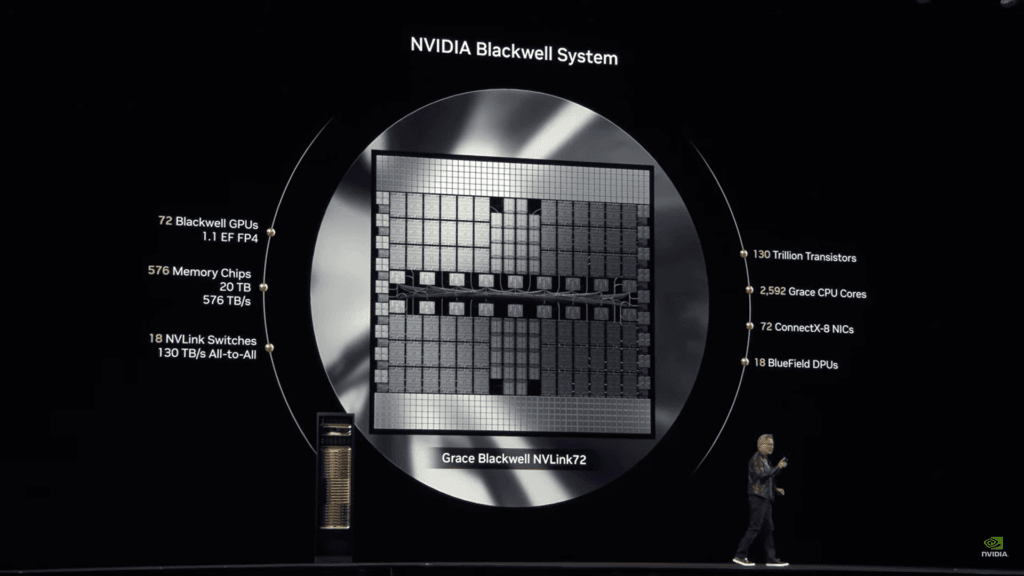

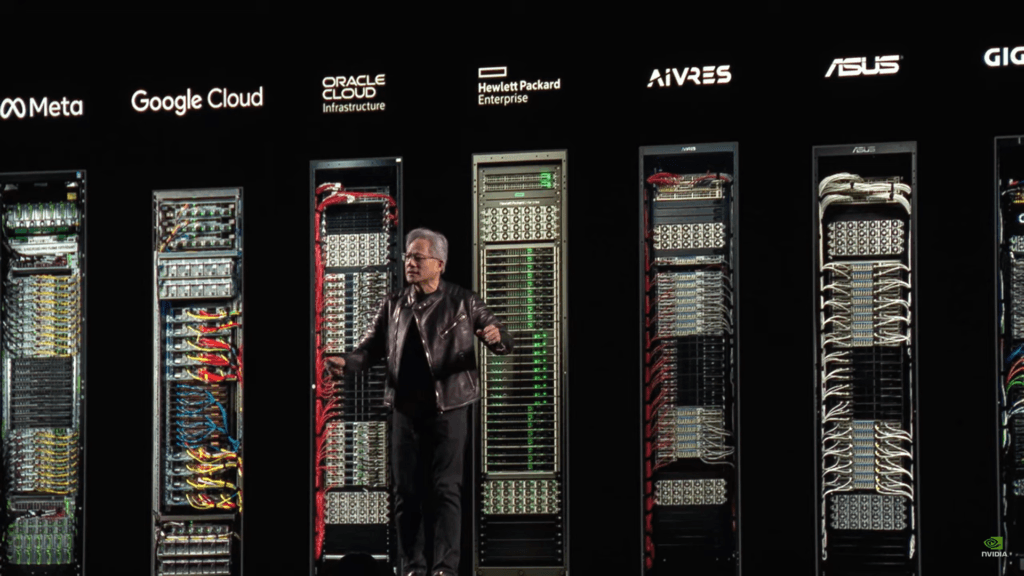

Central to NVIDIA’s strategy is the Blackwell architecture, prominently featured in the new GeForce 5090. Beyond raw performance improvements over its predecessor, Blackwell represents NVIDIA’s vision for “AI factories” – infrastructure specifically designed to generate AI tokens at unprecedented scale.

A key technological advancement enabling this vision is the disaggregation of MVLink switches and the shift to liquid cooling, creating incredibly dense computing units. “A single rack with Blackwell can achieve one Exaflops of computing power,” Huang noted, emphasizing the critical importance of scaling up before scaling out for complex AI workloads.

Software: The Critical Enabler

While hardware advancements captured headlines, Huang devoted significant time to NVIDIA’s expanding software ecosystem. The CUDA-X libraries continue to grow, now including new tools like qdss for sparse solvers in computational analysis and engineering applications.

“CUDA is in every cloud, it’s in every data center,” Huang stated, positioning the platform as the foundation that allows developers to deploy accelerated software broadly. This ecosystem, according to NVIDIA, has reached “the tipping point of accelerated computing,” driving adoption across sectors.

Beyond the Cloud: Enterprise and Edge

Though AI development began primarily in cloud environments, NVIDIA emphasized its expansion into enterprise and edge computing. Partnerships like those with Cisco and T-Mobile aim to build comprehensive stacks for AI in radio networks, suggesting the potential for AI to transform communications infrastructure.

Perhaps most notably, Huang announced that General Motors has selected NVIDIA to build their future self-driving car fleet. This partnership spans the entire automotive value chain – from manufacturing to enterprise operations to in-car systems.

Safety remains paramount, with NVIDIA’s “Halos” initiative for automotive safety promising rigorous assessment and validation systems to ensure autonomous systems meet the highest safety standards.

Meeting Extreme Demands: NVIDIA Dynamo

AI inference – generating tokens in production environments – presents unique computing challenges, requiring both high throughput and minimal latency. To address these requirements, Huang introduced NVIDIA Dynamo, an open-source “operating system of an AI Factory.”

Dynamo manages the complexities of distributed inference across massive GPU clusters, handling workload distribution, cache routing, and optimizing for different phases of inference. When paired with Blackwell and MVLink 72, Dynamo is projected to achieve 40 times the AI factory performance of the previous Hopper architecture for reasoning models.

A Multi-Year Vision

Perhaps most surprisingly, Huang revealed a detailed multi-year roadmap for NVIDIA’s hardware evolution:

- Blackwell Ultra (Second Half of 2025): An incremental upgrade with more floating-point operations, memory, and bandwidth.

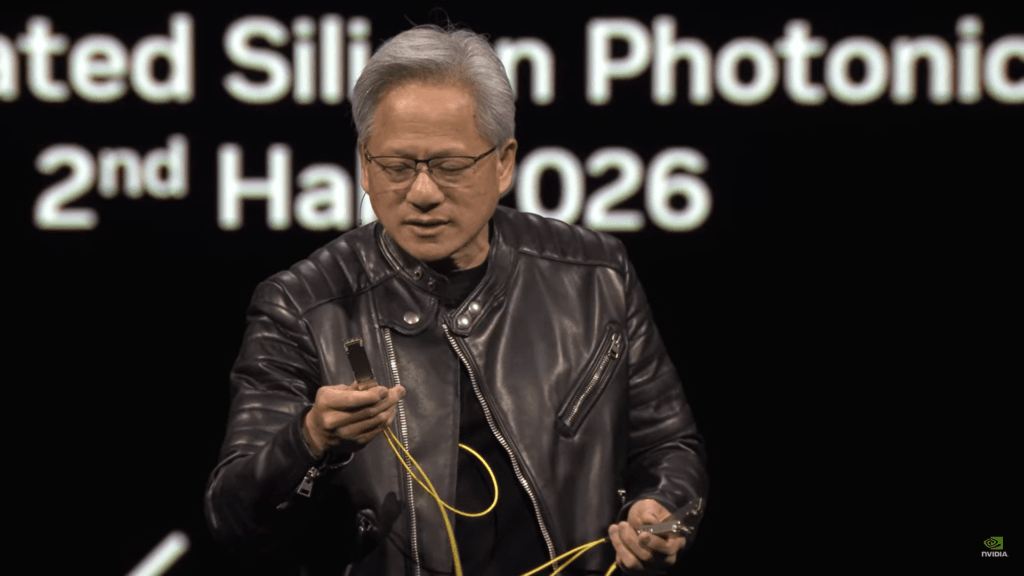

- Vera Rubin (Second Half of 2026): Featuring a new CPU with doubled performance, new GPU architecture, enhanced networking (MVLink 144), and HBM4 memory.

- Rubin Ultra (Second Half of 2027): An extreme scale-up architecture promising 15 Exaflops and 4,600 TB/s scale-up bandwidth.

This transparent long-term outlook, unusual in the traditionally secretive tech industry, appears designed to help organizations plan infrastructure investments with confidence.

The Networking Challenge

Scaling to millions of GPUs presents significant networking challenges. To address them, NVIDIA announced its first co-packaged optics silicon photonic system, featuring 1.6 terabit per second capabilities based on micro ring resonator modulator technology.

This innovation aims to dramatically reduce power consumption and cost compared to traditional transceivers, enabling more efficient scaling of massive AI infrastructure.

Enterprise AI: Making It Accessible

Recognizing that not every organization can build hyperscale infrastructure, NVIDIA introduced new enterprise-focused offerings. The DGX Spark, a personal AI development platform in a smaller form factor, and the DGX Station, a high-performance workstation featuring liquid-cooled Grace Blackwell processors, aim to bring AI capabilities to organizations of all sizes.

Complementing this hardware, NVIDIA announced Nims – a system including open-source enterprise-ready reasoning models and libraries that companies can deploy on various NVIDIA platforms, whether on-premise or in the cloud.

Robotics: The Next Frontier

The keynote concluded with Huang’s vision for robotics as the next major technology industry, driven by labor shortages and advances in what he termed “physical AI.”

NVIDIA’s approach centers on a continuous development loop of simulation, training, testing, and real-world experience. Three key platforms power this approach:

- Omniverse and Cosmos generate massive amounts of synthetic data to train robot behaviors

- NVIDIA Isaac Groot N1, a new foundation model for humanoid robots featuring a dual “thinking fast and slow” architecture

- NVIDIA Newton, a GPU-accelerated physics engine developed with DeepMind and Disney Research

In a move that surprised many, Huang announced that Groot N1 would be open-sourced, potentially accelerating adoption across the robotics community.

The Path Forward

As attendees filed out of the keynote hall, conversations buzzed with implications. NVIDIA’s vision suggests not merely evolutionary progress but a fundamental rethinking of computing architecture to enable increasingly sophisticated forms of artificial intelligence.

The company’s broad ecosystem strategy – spanning hardware, software, enterprise solutions, and strategic partnerships – positions NVIDIA at the center of this transformation. While challenges remain, particularly in scaling, power efficiency, and enterprise adoption, the roadmap presented at GTC 2025 offers a compelling vision of computing’s future.

What remains to be seen is how this vision will reshape industries, from automotive to healthcare to communications, and ultimately, our relationship with intelligent machines.