The Open-Source Rebellion: How Hugging Face is Rewriting Big Tech’s AI Playbook

In the high-stakes poker game of artificial intelligence, where tech giants bet billions on proprietary models hidden behind elaborate paywalls, one company is playing an entirely different game. Hugging Face, valued at $4.5 billion, following funding rounds that have raised a total of $396 million to date, has attracted investment from major technology companies, including Google, Amazon, Nvidia, IBM, and Salesforce and inviting everyone to play.

“The traditional venture playbook says to build moats, not bridges,” notes Sarah Chen, a prominent Silicon Valley VC. “But Hugging Face is proving that in AI, the network effect of open collaboration might be the biggest moat of all.”

The Numbers Don’t Lie

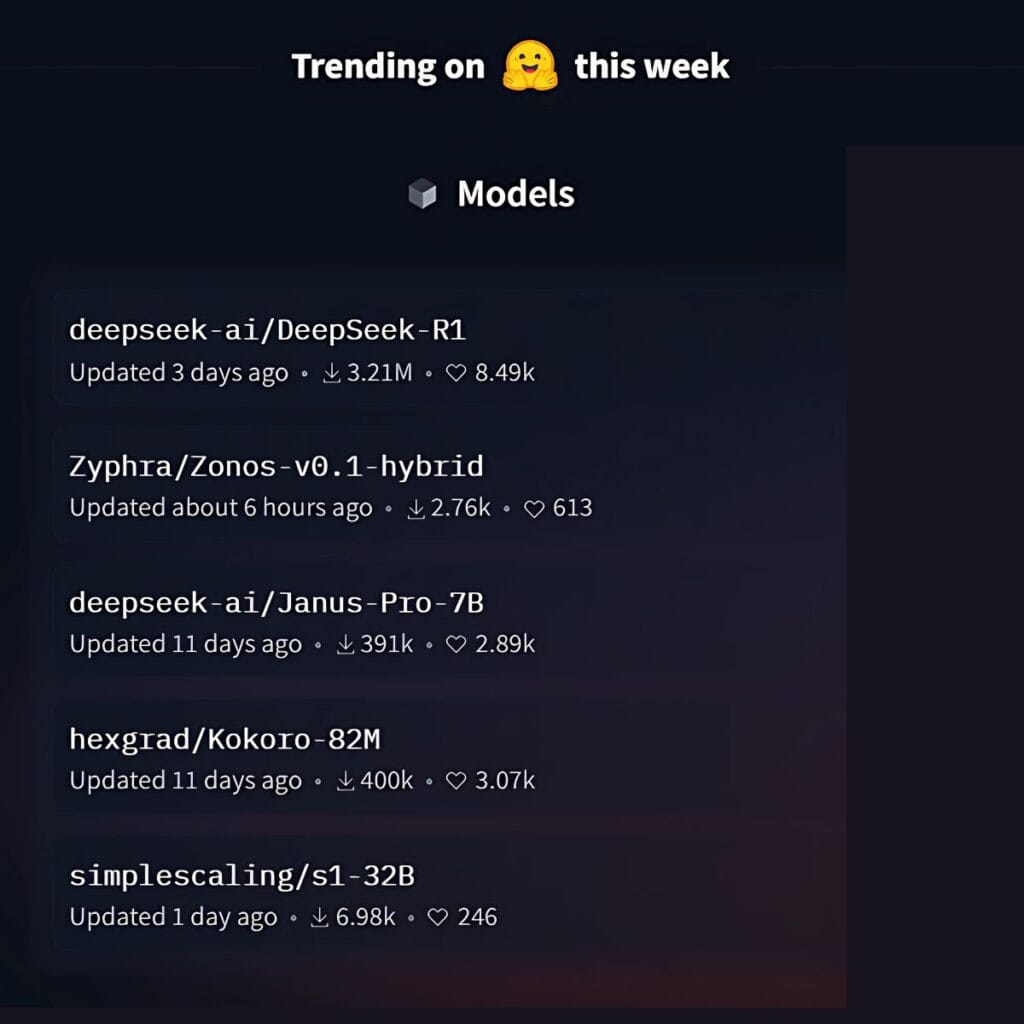

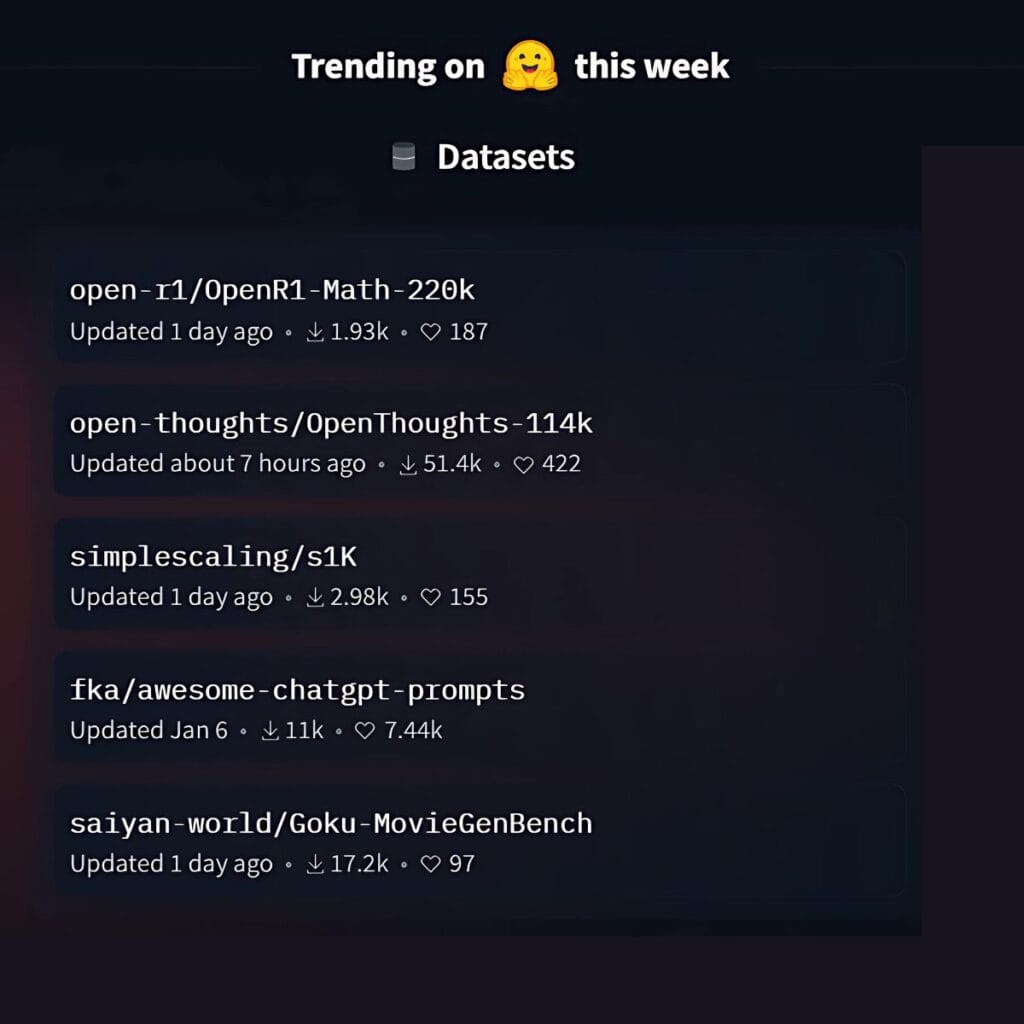

Every 10 seconds – faster than you can say “democratized AI” – a new project lands on Hugging Face’s servers. The platform has blazed past the million-repository milestone, a number that has venture capitalists and tech giants alike doing double-takes at their spreadsheets.

Inside their San Francisco headquarters, the energy feels less like a typical startup and more like a cross between a research lab and a public library. Engineers cluster around standing desks, debating the finer points of transformer architectures while their latest creation, SmolLM2, quietly outperforms heavyweight contenders in ways that challenge conventional wisdom about AI development.

The Technical Edge: Small Models, Big Impact

SmolLM2 represents everything that makes Hugging Face’s approach unique. While competitors race to build ever-larger models, Hugging Face’s team took a different route.

“We’re seeing a fundamental shift in how AI models are developed,” explains CEO Clement Delangue. “The future isn’t just about massive models , it’s about specialized, efficient ones that excel at specific tasks.”

The numbers back him up. SmolLM2’s variants, particularly the lean 360-million and 135-million and lately the 1.7 billion parameters parameter versions, are showing remarkable efficiency. For context, that’s a fraction of the size of models like GPT-4, yet they’re achieving competitive results in specific domains.

The secret sauce? A meticulously curated training approach that combines:

- An 11 trillion token dataset balancing web content and programming examples

- Custom datasets for mathematics (FineMath), coding (Stack-Edu), and conversation (SmolTalk)

- Instruction fine-tuning and example-based learning

- Reinforcement learning for user-aligned responses

“When we released SmolLM2’s training data as open source,” notes a senior Hugging Face engineer, “it wasn’t just about transparency. It was about proving that careful curation and intelligent architecture can sometimes outperform raw computational power.”

The Dollar Store Revolution

In a move that seems almost heretical in today’s AI economy, Hugging Face offers its HUGS service – a tool that automates the implementation of AI models – for just one dollar per hour through major cloud providers. “It’s not just about cost,” explains Dr. Maria Rodriguez, an AI ethics researcher at Stanford. “It’s about fundamentally changing who gets to participate in AI development.”

This democratization is already showing results. Through partnerships with Amazon and Google, HUGS is helping companies break free from traditional AI vendor lock-in. The platform can adapt open-source models like Meta’s Llama to run on various hardware configurations, from Nvidia to AMD chips, giving companies unprecedented control over their AI infrastructure.

In a move that seems almost heretical in today’s AI economy, Hugging Face offers its HUGS service – a tool that automates the implementation of AI models – for just one dollar per hour through major cloud providers. “It’s not just about cost,” explains Dr. Maria Rodriguez, an AI ethics researcher at Stanford. “It’s about fundamentally changing who gets to participate in AI development.”

This democratization is already showing results. Through partnerships with Amazon and Google, HUGS is helping companies break free from traditional AI vendor lock-in. The platform can adapt open-source models like Meta’s Llama to run on various hardware configurations, from Nvidia to AMD chips, giving companies unprecedented control over their AI infrastructure.

The Business Implications

The platform’s growth tells a story that’s reshaping the AI industry:

- Over one million publicly available AI models

- Partnerships with tech giants while maintaining independence

- A community spanning from individual developers to major research institutions

- Revenue streams that prove open source can be profitable

“We’re not just building a platform,” a senior engineer at Hugging Face explains. “We’re architecting a future where AI development looks more like Wikipedia and less like Fort Knox.”

The Market Response

Inside the company’s latest board meeting, Hugging Face outlined an aggressive expansion into enterprise solutions while maintaining their open-source core. The strategy is paying off – early investors who bet on their vision are seeing returns that validate the unconventional approach.

“This isn’t just about democratizing AI,” notes Chen. “It’s about proving that open source can be a viable business model in one of tech’s most competitive spaces.”

The Coming AI Landscape

The implications for the broader AI industry are profound. While tech giants continue to guard their models, Hugging Face’s success is forcing a rethinking of traditional AI business models. Their emphasis on specialized, efficient models is particularly disruptive in an era where the environmental and computational costs of AI are under increasing scrutiny.

“One major company could see its market value halved as AI disruption hits home,” predicts Delangue, though he won’t name names. “Just ask the call centers watching Klarna’s AI handle two-thirds of customer interactions with eerie efficiency.“

The Bottom Line

In an era where tech increasingly feels like a zero-sum game, Hugging Face is betting on abundance. Hosting over 120,000 pre-trained models and 20,000 datasets, they’re proving that in the AI race, the winner might not be the company with the biggest models or the deepest pockets, but the one that builds the biggest community.

Their strategy of open-source commitment, specialized model development, and accessible tools is proving to be a viable business model. In making AI development look more like a collaborative workshop than a walled garden, Hugging Face might just be showing us the future of technology development itself.

In democratizing AI development, Hugging Face is ensuring that solutions to these edge cases can come from anywhere, not just Silicon Valley’s elite labs. The real revolution might not be in the technology itself, but in how it’s shared. And in that shift might lie the key to ensuring AI’s benefits don’t just trickle down, but flood outward to every corner of the globe.

Be sure to check our Annex to this blog exploring the FAQ “Understanding Hugging Face: What It Is and Why It Matters“