Microsoft Unveils New AI Chip for Faster Inference

▼ Summary

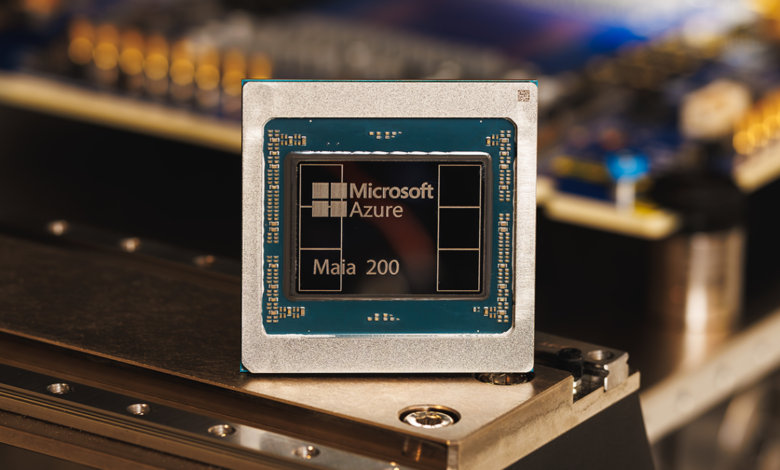

– Microsoft has launched the Maia 200, a new chip designed to scale and optimize AI inference workloads.

– The chip offers significantly improved performance over its predecessor, delivering over 10 petaflops in 4-bit precision.

– The launch addresses the growing operational importance and cost of AI inference for businesses.

– It is part of a broader industry trend where tech giants like Google and Amazon develop in-house chips to reduce reliance on Nvidia.

– Microsoft claims Maia 200 outperforms competitors’ chips and is already powering its own AI models and Copilot, with external testing now available.

Microsoft has introduced its newest custom silicon, the Maia 200, engineered specifically to accelerate and scale artificial intelligence inference workloads. This powerful chip represents a strategic move to enhance performance and efficiency for running complex AI models, addressing a critical and costly phase in the AI lifecycle. As businesses increasingly deploy AI, the computational demands of inference, the process of using a trained model to generate predictions, have become a major operational focus.

The Maia 200 succeeds the Maia 100 from 2023 and delivers a significant performance leap. It integrates over 100 billion transistors, enabling it to deliver over 10 petaflops in 4-bit precision and approximately 5 petaflops of 8-bit performance. This substantial increase in computational power allows the chip to handle today’s most demanding AI models with ease while providing capacity for future, even larger models. The company emphasizes that a single Maia 200 node can run the largest current models without strain, offering headroom for growth.

This development is part of a broader industry shift where major technology firms are designing their own specialized hardware to reduce reliance on external suppliers. Nvidia’s dominant GPUs have been essential for AI, but companies like Microsoft, Google, and Amazon are now creating competitive in-house alternatives. Google offers its Tensor Processing Units (TPUs) through its cloud services, while Amazon recently launched the latest iteration of its Trainium AI accelerator chip. These custom solutions aim to lower overall hardware costs and provide optimized performance for specific workloads.

Microsoft positions the Maia 200 as a direct competitor in this space. The company claims the chip delivers three times the FP4 performance of Amazon’s third-generation Trainium chips and surpasses the FP8 performance of Google’s seventh-generation TPU. By developing its own silicon, Microsoft gains greater control over its AI infrastructure, potentially leading to more efficient and cost-effective operations for its services and clients.

The chip is already in active use within Microsoft’s ecosystem. It powers models developed by the company’s Superintelligence team and supports the operations of its Copilot AI assistant. Furthermore, Microsoft has begun offering access to the Maia 200 software development kit to a select group of external partners, including developers, academic researchers, and leading AI labs, inviting them to integrate the new hardware into their own projects. This rollout underscores Microsoft’s commitment to building a comprehensive, high-performance AI stack from the silicon level upward.

(Source: TechCrunch)