Meta’s Smart Glasses Risk Being Ruined by Its Own Flaws

▼ Summary

– Meta’s discreet Ray-Ban smart glasses are unnerving because their design makes them perfect, nearly invisible monitoring tools, despite having a privacy indicator light.

– The company considered adding facial recognition, framed as an accessibility feature, which raises significant privacy concerns given Meta’s reputation and the potential for misuse.

– There is a clear tension between the glasses’ benefits for accessibility and the serious risks of covert recording and data misuse by irresponsible users.

– Meta’s reactive privacy policy, which relies on terms of service and indicator lights, is seen as inadequate, especially as mods can disable lights and misuse is already reported.

– The success of smart glasses is fragile and hinges on public trust, which Meta’s poor privacy reputation jeopardizes, risking a consumer backlash similar to Google Glass.

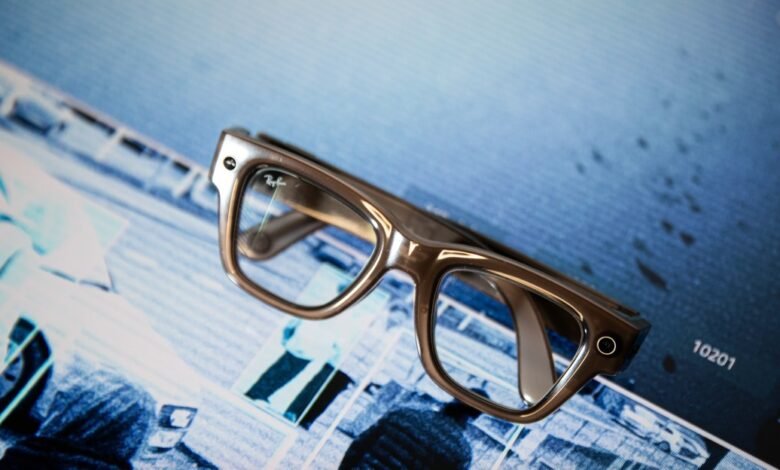

The discreet design of Meta’s Ray-Ban smart glasses is both their greatest strength and their most significant liability. These wearable devices blend seamlessly into everyday life, offering a level of convenience that feels futuristic. Yet this very invisibility fuels deep public unease, a tension that threatens to undermine the entire product category. The recent revelation that Meta considered launching facial recognition software during a politically charged period, hoping privacy advocates would be distracted, only reinforces the skepticism many consumers feel toward the company’s intentions.

Enthusiasts often argue that privacy fears are exaggerated. The smartphone in your pocket has a camera, governments already use facial recognition, and CCTV networks are ubiquitous. As true-crime documentaries show, it’s nearly impossible to avoid being recorded in public. However, smart glasses introduce a new dimension: the cameras are tiny, the privacy indicator lights are faint, and the design is intentionally ordinary. This makes the recording tool nearly invisible, which is precisely the point of the product. It creates a troubling paradox. The glasses are excellent because they are discreet, but that discretion is unsettling because it makes them perfect for covert monitoring.

Wearing them can feel like being a spy. Despite the built-in privacy light, in countless public settings, no one has ever visibly noticed the glasses on my face. I’ve started to spot them on others, and that recognition brings its own discomfort. Technical assurances ring hollow when reports surface of a simple, inexpensive modification that can disable the indicator light. In a personal anecdote, the light on my spouse’s pair stopped functioning entirely, yet the glasses continued to record video without any visible signal.

This context makes Meta’s exploration of a feature to identify strangers with public Meta profiles seem characteristic of the company. While such a tool has potential benefits, assisting those who are blind or have low vision, or aiding memory in social situations, its broad release would be like opening Pandora’s box. The appropriate use in specific, consensual settings is vastly different from unleashing it upon the general public.

The core issue is that smart glasses manufacturers have never solved the “glasshole” problem that helped doom the original Google Glass. Placing powerful, discreet recording devices in everyone’s hands and simply advising responsible use is insufficient. Meta’s privacy policy essentially does just that. There are already disturbing reports of individuals recording people without consent. In response to such incidents, Meta has typically just pointed to its terms of service and the LED lights, reiterating that users should behave safely. This passive stance does little to build trust or prevent abuse.

Public sentiment toward this technology is volatile and often hostile. Online, the glasses are derisively labeled as spy glasses or fascism sunnies, accompanied by violent imagery of them being destroyed. While most such comments are hyperbole, they reflect a genuine anger. A real-world incident in New York City, where a woman was celebrated for snatching and breaking an influencer’s glasses, underscores that this resentment can boil over into physical action.

It’s crucial to acknowledge that smart glasses are not inherently evil. For the blind and visually impaired, this technology can be life-changing, offering new independence. Advocates see immense potential for assisting the deaf, hard of hearing, and those with mobility challenges. Yet, even within accessibility communities, trust in Meta is not universal. Some bristled at the framing of facial recognition primarily as an accessibility feature in recent reports, while fans of discontinued Meta services feel the company has abandoned vulnerable users before.

The current revival of smart glasses is on shaky ground. Meta’s damaged privacy reputation is its biggest obstacle to mainstream adoption. While many trade privacy for convenience, perception ultimately dictates success. Other companies have faced severe backlash for data partnerships and features, forcing them to clarify policies and backpedal. A smarter strategy for Meta would involve a complete, proactive overhaul of its privacy approach to demonstrably protect users.

Many factors contributed to the failure of Google Glass: its awkward design, high cost, and user behavior. Critically, there were also moments of outright public rejection, with people physically removing the devices from others’ faces. Meta has successfully launched a new generation of smart glasses, solving many early design flaws. However, it cannot escape its own legacy. As other tech giants eye the market, the problem of irresponsible users remains. All it would take is a major breach of public trust to send smart glasses back into the realm of science fiction.

(Source: The Verge)