AWS Outage Exposes Widespread Risk of Provider Dependency

▼ Summary

– A DNS failure at AWS’s US-EAST-1 region caused widespread outages affecting services like Signal, Reddit, and Amazon for several hours.

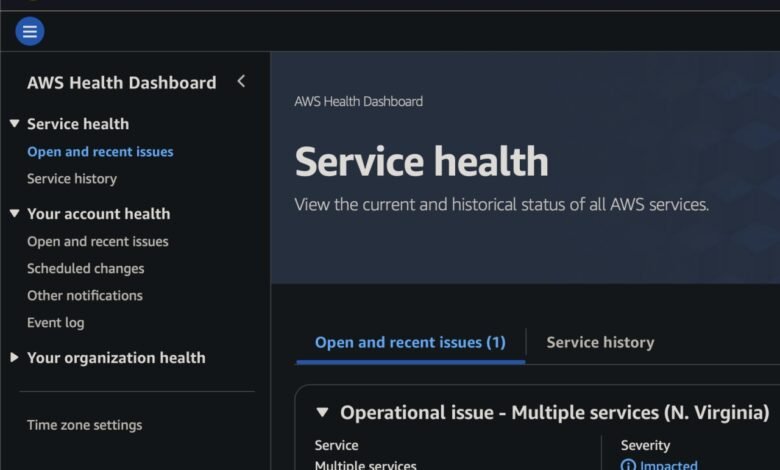

– The incident began on October 20 and was still being resolved with ongoing recovery efforts for services like EC2, ECS, and Glue.

– According to a security expert, the outage resulted from internal DNS failures, often caused by configuration errors or monitoring lapses, with no signs of a security breach.

– Such outages highlight the fragility of extensive software supply chains, where a single AWS issue can disrupt thousands of client services and businesses.

– Users and organizations are advised to have disaster recovery plans and alternative communication channels to mitigate the impact of similar disruptions.

A significant disruption to Amazon Web Services (AWS) caused a cascade of failures across the internet, underscoring the profound risks of concentrated reliance on a single cloud provider. The outage, originating from the US-EAST-1 region, left thousands of popular websites and online services inaccessible for users. Major platforms including Signal, Snapchat, Fortnite, Starbucks, Reddit, Coinbase, Ring, and Venmo experienced downtime, alongside Amazon’s own services like Alexa and the Apple TV and Apple Music platforms. The incident began in the early hours and persisted for an extended period, with services not immediately returning to full capacity.

The problem was first acknowledged by AWS with an initial status update indicating an investigation into increased error rates and latencies. Over the course of many hours, the company issued numerous follow-up communications. A later update detailed ongoing efforts to restore normal operations, specifically mentioning progress on reducing throttles for EC2 instance launches and the recovery of dependent services like ECS and Glue. The update also noted that Lambda invocations had fully recovered, with a backlog of events expected to be processed within a couple of hours.

According to cybersecurity experts, the root cause was an internal DNS failure within the AWS cloud infrastructure. Such failures are not uncommon and often result from misconfigurations or lapses in monitoring critical expiration timelines for configurations and certificates. While initial reports suggested no evidence of a malicious security breach, the widespread unavailability of services still qualifies as a significant cyber incident due to its impact on client operations.

These kinds of large-scale outages happen with some regularity, serving as a stark reminder of the interconnected nature of the modern digital ecosystem. A seemingly minor technical glitch at a major provider like Amazon can create a domino effect, crippling countless businesses and services that depend on that infrastructure. The impact of a four-hour outage can vary dramatically, from a minor inconvenience for some to a potentially catastrophic event for businesses in critical sectors where uptime is essential.

For organizations and individuals, this event highlights the critical importance of preparedness and redundancy. Experts strongly recommend having a Disaster Recovery Plan that includes predefined alternative communication channels, such as different apps, traditional phone calls, SMS, or even radio. Establishing such contingency plans in advance is a fundamental best practice for ensuring operational resilience and the ability to coordinate an effective response when primary systems fail.

(Source: ITWire Australia)