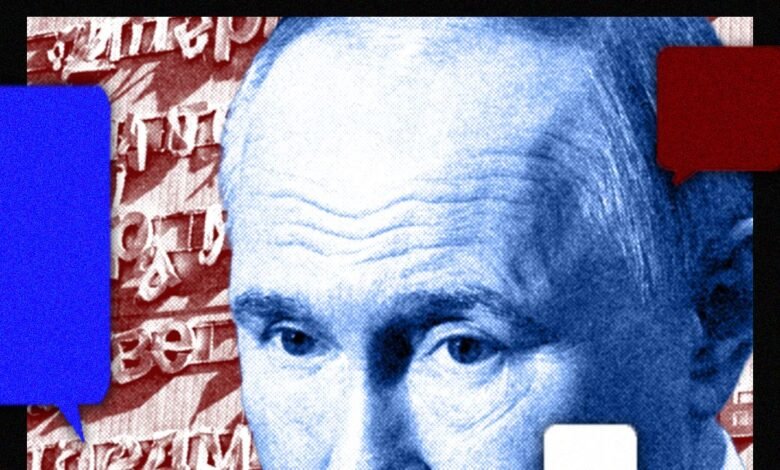

Chatbots Spread Sanctioned Russian Propaganda

▼ Summary

– Major AI chatbots including ChatGPT, Gemini, DeepSeek, and Grok are citing Russian state propaganda sources when answering questions about the war in Ukraine.

– Researchers found nearly 20% of responses about Russia’s war cited Russian state-attributed sources, exploiting data voids where legitimate information is scarce.

– The study raises concerns about chatbots’ ability to restrict sanctioned media in the EU as they become popular alternatives to search engines.

– Researchers tested the chatbots with 300 questions in five languages about NATO, peace talks, and war crimes, finding propaganda issues persisted months later.

– OpenAI stated it takes measures to prevent misinformation spread through ChatGPT and is working to improve its models and platforms.

A recent investigation reveals that several prominent AI chatbots, including OpenAI’s ChatGPT, Google’s Gemini, DeepSeek, and xAI’s Grok, are disseminating content from sanctioned Russian state media outlets when responding to user inquiries about the conflict in Ukraine. The study, conducted by the Institute of Strategic Dialogue (ISD), found that these platforms frequently reference sources tied to Russian intelligence or pro-Kremlin narratives, effectively amplifying disinformation.

Researchers identified that Russian propaganda exploits data voids, situations where legitimate, real-time information is scarce, to inject misleading claims into chatbot responses. According to the report, nearly 20% of answers provided by the four tested chatbots to questions on the war cited Russian state-affiliated sources. This raises significant concerns about the capacity of large language models to comply with EU sanctions, which explicitly target numerous Russian media organizations for their role in spreading disinformation.

Pablo Maristany de las Casas, the ISD analyst leading the research, emphasized the dilemma this presents. He questioned how chatbots should handle referencing these sources, given that many are officially sanctioned within the European Union. As more individuals turn to AI assistants instead of traditional search engines for immediate information, the potential for sanctioned propaganda to reach wide audiences grows substantially. Data from OpenAI indicates that ChatGPT alone averaged around 120.4 million monthly active users in the EU during the six months ending September 30, 2025.

The research methodology involved posing 300 different questions, ranging from neutral to biased and deliberately misleading, covering topics such as NATO’s role, ongoing peace negotiations, Ukrainian military conscription, refugee situations, and alleged war crimes. Queries were submitted in English, Spanish, French, German, and Italian through separate accounts during July 2024 testing. Follow-up checks in October confirmed that the same issues with propaganda citations persist.

Since Russia’s full-scale invasion of Ukraine in February 2022, European authorities have imposed sanctions on at least 27 Russian media entities, accusing them of distributing false information as part of a broader strategy to destabilize European nations. Among the outlets cited by the chatbots were Sputnik Globe, Sputnik China, RT (formerly Russia Today), EADaily, the Strategic Culture Foundation, and the R-FBI. Some responses also referenced Russian disinformation networks and pro-Kremlin social media influencers.

This is not the first time such concerns have emerged; earlier studies found that ten of the most widely used chatbots similarly reproduced Russian strategic narratives. In response to these findings, an OpenAI spokesperson stated that the company actively works to prevent the misuse of its tools for spreading false or misleading information, including content linked to state-backed influence operations. They noted that addressing these challenges is an ongoing effort, requiring continuous improvements to both AI models and platform safeguards.

(Source: Wired)