How Bias Corrupts Medical Practice

▼ Summary

– The White House’s “Make America Healthy Again” report contained fabricated citations, a common issue with generative AI systems.

– AI-generated falsehoods have previously appeared in legal proceedings, with lawyers submitting fictitious cases and citations in court.

– The MAHA report ironically recommended addressing the health research sector’s replication crisis while using phantom evidence itself.

– The government plans to prioritize AI in healthcare for diagnosis and treatment despite known problems with AI “hallucinations.”

– Undisclosed AI use in health research could create biased feedback loops and help scientific fraudsters legitimize false claims.

Seeking to resolve a bitter disagreement, two neighbors once approached the wise Mullah Nasreddin. The first man laid out his argument in full, and the sage responded, “You are absolutely right!” The second neighbor then presented his completely different perspective, and Nasreddin again declared, “You are absolutely right!” A bystander, utterly bewildered, protested that both men could not possibly be correct. Nasreddin turned to him and said, “You are absolutely right, too.”

This ancient tale of conflicting truths finds a modern parallel in a recent White House health initiative. The “Make America Healthy Again” report faced significant criticism after investigators discovered it referenced several non-existent research studies. Such fabricated citations are a known issue with generative artificial intelligence systems, which often invent plausible-sounding sources and data to support their output. Initially, officials dismissed the reporting as inaccurate before conceding that minor citation errors had occurred.

The situation carries a profound irony. The report’s principal recommendation focused on tackling the scientific “replication crisis,” a widespread problem where research findings cannot be reproduced by other teams. Yet the document itself relied on phantom evidence, undermining its own core message.

This incident is not an isolated one. Last year, major news outlets documented numerous legal cases where attorneys submitted AI-generated court citations, including fictitious case law and judicial opinions, into official proceedings. Once discovered, these lawyers were forced to explain to judges how entirely made-up legal precedents had infiltrated their arguments.

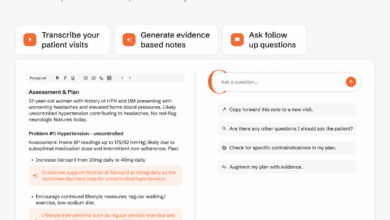

Despite these widely publicized failures, the newly released MAHA policy roadmap instructs the Department of Health and Human Services to aggressively prioritize AI for tasks like early disease diagnosis, creating personalized treatment plans, and predicting patient outcomes. The enthusiastic push to integrate AI across medicine might be understandable if the technology’s tendency to “hallucinate” information was a simple bug. However, many experts within the AI industry itself acknowledge that this fundamental flaw may be impossible to fully eradicate.

The implications for medical research and clinical decision-making are profound. Using AI in studies without proper disclosure risks creating a dangerous feedback loop. Fabricated research, once published, can be absorbed into the datasets used to train the next generation of AI models, thereby amplifying and cementing the original errors and biases. Compounding this threat, a recent academic study exposed an entire industry of scientific fraudsters who could leverage AI tools to make their deceptive claims appear far more legitimate and credible.

(Source: Ars Technica)