AI Agents’ Biggest Weakness: The Protocol That Stops Them All

▼ Summary

– Model Context Protocol (MCP) is middleware designed to enhance AI by connecting generative AI programs to external resources like databases and software.

– Even top AI models such as Gemini 5 and GPT-5 struggle with MCP, requiring many interaction rounds and facing delays and failure cases in complex tasks.

– Multiple benchmarks reveal that AI model performance declines as tasks become more complex, involving multiple servers and dependencies.

– Larger, more powerful AI models generally perform better on MCP tasks, with some open-source models rivaling proprietary ones, but all face challenges in long-horizon planning and efficiency.

– Fine-tuning AI models with specialized datasets like Toucan can improve MCP performance, but adapting to private, non-standard resources remains an open challenge.

Even the most advanced artificial intelligence models face significant hurdles when attempting to operate through the Model Context Protocol (MCP), a middleware system designed to enhance AI capabilities by linking them with external software and databases. Recent benchmark studies consistently demonstrate that as tasks grow in complexity, AI performance declines noticeably, highlighting a critical weakness in current systems.

Multiple research teams have documented these challenges. A collaborative group from Accenture, MIT-IBM Watson AI Lab, and UC Berkeley developed MCP-Bench, a collection of 250 tasks specifically designed to test AI agents using MCP. Their findings indicate that even cutting-edge models struggle with various capabilities, with performance dropping significantly when tasks expand from single-server to multi-server environments. Similarly, researchers from the University of Science and Technology of China observed that all tested models showed reduced effectiveness as tasks became more complex in their MCP-AgentBench evaluations.

The National University of Singapore team further confirmed these limitations, noting that even top-performing models like OpenAI’s GPT-5 experience failure scenarios characterized by repetitive or exploratory interactions that fail to achieve meaningful progress. These consistent findings across independent research efforts point to a fundamental gap in AI’s ability to handle sophisticated multi-step processes through MCP.

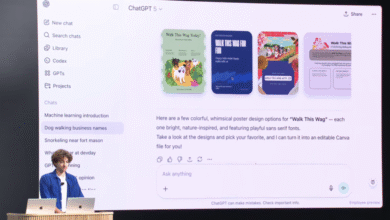

MCP functions as middleware that enables client-server interactions for AI systems. Originally introduced by Anthropic, the protocol aims to provide a standardized, secure method for connecting large language models and AI agents to external resources such as databases and customer relationship management software. While this standardization reduces the number of connections needed to access multiple resources, it doesn’t guarantee that AI models will effectively implement the protocol.

When connected through MCP, an AI model must generate output that accomplishes several objectives simultaneously. It needs to formulate a plan to answer queries by selecting appropriate external resources, determine the correct sequence for contacting MCP servers that provide access to those resources, and structure multiple information requests to produce a final response. Research shows that while superior models like Gemini 5 and GPT-5 perform better than their less sophisticated counterparts, all models remain limited in their ability to manage these complex challenges effectively. Common issues include requiring excessive steps to retrieve information, even when the initial approach appears sound.

Benchmark tests follow a similar methodology, presenting AI models with challenging information queries and providing access to various MCP servers and their associated resources. These typically include publicly available services like Google Search, Wikipedia, and other information repositories. One example from the Accenture research involved planning a week-long hiking trip starting and ending in Denver, requiring the AI to access multiple MCP-enabled services including Google Maps and US national park websites, using specific tools to find parks, check details, review alerts, locate visitor centers, campgrounds, and events.

These benchmarks represent an evolution from simple function-calling challenges to more comprehensive evaluations. They require AI models to meet multiple criteria, including converting natural language prompts into search requests that adhere to the specific communication sequences defined in MCP’s JSON-based schema. Adhering to schema represents just the basic requirement, at higher levels, agents must identify correct tools from large, heterogeneous tool spaces when faced with ambiguous task descriptions, requiring them to disambiguate semantic variations, handle naming inconsistencies, and avoid traps set by superficially plausible but irrelevant tools.

The benchmarks typically measure how many different resources a program accesses and how many interaction turns are required, providing indicators of an AI model’s efficiency in utilizing available resources. MCP-Bench specifically evaluates structural coherence, dependency awareness, parallelism efficiency, and reflective adaptation. Tasks include not only linear workflows but complex compositions requiring concurrent interactions across multiple servers with multiple objectives, all reflecting the models’ capacity for what’s termed “long-horizon planning.” When AI models require increasingly more turns to obtain needed information from MCP servers, it suggests inadequate planning capabilities regarding resource utilization.

All three major studies reported that larger, more powerful AI models generally outperform smaller models, suggesting that as models improve in various respects, they may also enhance their performance on MCP-related challenges. The National University of Singapore team noted that stronger models succeed through better decision-making and targeted exploration rather than blind trial-and-error. Accenture researchers found that the real differentiator is robustness to scaling, where top-tier models demonstrate clear advantages in handling long-horizon, cross-server tasks. Interestingly, the University of Science and Technology of China team observed that some open-source models like Qwen3-235B achieved top scores, rivaling and even surpassing proprietary counterparts.

Despite these relative successes, significant challenges remain across all models. The MCP-Bench tasks are inherently multi-step and often involve chaining heterogeneous tools across servers, with even strong models typically requiring several interaction rounds and struggling with capabilities such as dependency chain compliance, tool selection in noisy environments, and long-horizon planning. Similarly, performance generally declines as tasks transition from single-server to multi-server scopes, with similar drops occurring as call dependency increases from simple single to complex sequential calls. Essentially, as MCP tasks grow more complex, all AI models face greater difficulties, though some handle these challenges considerably better than others.

The clear implication from these benchmarks is that AI models must adapt to a new era where using MCP presents significant challenges, potentially requiring evolution in new directions. All three studies identify the common problem of performance degradation as AI models access more MCP servers, with the complexity of multiple resources overwhelming even models that initially demonstrate good planning capabilities. The National University of Singapore team highlighted the challenge of managing an ever-growing history of MCP interactions and a core unreliability that can only be addressed by developing agents with robust error-handling and self-correction capabilities.

The most immediate approach to improving AI models’ performance may involve training them specifically for MCP interactions. Researchers from the University of Washington and MIT-IBM Watson AI Lab have developed Toucan, a dataset containing millions of examples of MCP interactions between AI programs and external tools. This represents the largest publicly available tool-agentic dataset to date. When used for fine-tuning, training AI models after the main pre-training stage, Toucan enabled relatively small open-source models like Qwen3-32B to outperform much larger models including DeepSeek V3 and OpenAI’s o3 mini on the same benchmark tests used by other researchers.

While Toucan’s results are encouraging, significant questions remain regarding MCP’s application to non-public, non-standard resources in private data centers. Whether fine-tuning for general MCP efficiency will improve performance on specific corporate installations like Salesforce CRM or Oracle databases remains uncertain. The ultimate answers will emerge as chief information officers implement MCP in real-world scenarios and evaluate the results.

(Source: ZDNET)