Claude for Chrome Enters Beta, Prompt Injection Risks Loom

▼ Summary

– Anthropic is testing a Chrome extension that allows its Claude AI to control web browsers, enabling it to perform tasks like scheduling and email management.

– The company is limiting the pilot to 1,000 premium users to address security vulnerabilities, contrasting with competitors’ broader releases of similar AI systems.

– Internal testing revealed prompt injection attacks could trick the AI into harmful actions, with a 23.6% success rate before mitigations were implemented.

– Computer-controlling AI has the potential to revolutionize enterprise automation by working with any software interface, reducing reliance on custom integrations.

– Academic researchers have released an open-source alternative, OpenCUA, to compete with proprietary systems and accelerate adoption in hesitant enterprises.

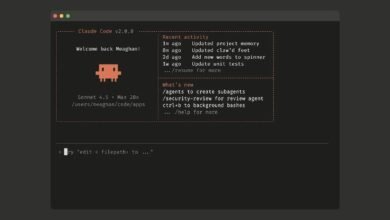

Anthropic has launched a beta version of a Chrome extension that enables its Claude AI assistant to operate directly within users’ web browsers, a significant step into the rapidly growing field of agentic AI systems. This move places the company alongside competitors like OpenAI and Microsoft, though Anthropic is taking a notably cautious approach by limiting initial access to 1,000 trusted users on its premium Max plan. The goal is to identify and address critical security vulnerabilities before a broader release, reflecting the high stakes involved in deploying AI that can autonomously control digital interfaces.

The extension, called Claude for Chrome, allows the AI to perform a range of actions such as scheduling appointments, managing emails, and completing forms by interacting with web pages just as a human would. This functionality represents a major shift from conversational chatbots to systems capable of executing multi-step tasks across applications, a development many experts see as the next major phase in artificial intelligence. However, this increased capability comes with substantial risks, particularly around security and unintended behavior.

During internal testing, Anthropic discovered that malicious actors could use hidden instructions, a technique known as prompt injection, to manipulate the AI into performing harmful actions without user consent. In one simulated attack, Claude was tricked into deleting a user’s emails after receiving a disguised command embedded in a fraudulent security notice. Without safeguards, these types of attacks succeeded nearly a quarter of the time, underscoring the urgent need for robust protective measures.

While Anthropic proceeds carefully, other tech giants are moving more aggressively. OpenAI already offers its Operator agent to all ChatGPT Pro subscribers, and Microsoft has integrated similar computer-control features into its Copilot Studio platform for enterprise clients. These competing systems are designed to automate complex workflows, from booking travel to processing invoices, potentially reducing the need for expensive custom software integrations.

The emergence of computer-controlling AI could revolutionize how businesses handle automation, especially for processes that span multiple applications without standardized APIs. Rather than relying on fragile robotic process automation (RPA) tools that break with interface updates, AI agents promise adaptability and broader compatibility. Salesforce’s CoAct-1 system, for example, has demonstrated impressive success rates by blending traditional automation with AI-driven code generation.

In response to the dominance of proprietary systems, academic researchers are developing open alternatives. The University of Hong Kong recently released OpenCUA, an open-source framework trained on thousands of human demonstrations across major operating systems. This model performs on par with commercial offerings and could encourage wider adoption among organizations wary of vendor lock-in.

To mitigate risks, Anthropic has implemented several security layers in Claude for Chrome, including site-specific permissions, mandatory confirmations for high-risk actions, and blocks on sensitive categories like financial and adult content. These measures have already reduced the success rate of certain attacks, but the company acknowledges that more work is needed before the tool is ready for general use.

The rise of AI agents capable of navigating and manipulating software interfaces marks a turning point in human-computer interaction. These systems promise to make automation more accessible and flexible, but they also introduce novel security challenges that must be addressed proactively. For enterprise leaders, the technology offers compelling efficiency gains, but also demands careful evaluation of safety and governance before integration into critical workflows.

(Source: VentureBeat)