5 Surprising Truths About How ChatGPT Really Works

▼ Summary

– AI chatbots are trained using human feedback to ensure safe and ethical responses, with human annotators ranking answers to align with moral guidelines.

– AI chatbots process language using tokens (smaller units like words or characters) rather than whole words, which can lead to unexpected interpretations.

– AI chatbots have knowledge cutoffs and may not be aware of events or information after their last training update, requiring web searches for current data.

– AI chatbots often “hallucinate” by generating false or nonsensical claims confidently, as they prioritize coherence over factual accuracy.

– AI chatbots use built-in calculators for precise arithmetic in complex tasks, combining reasoning steps with computational tools for reliable results.

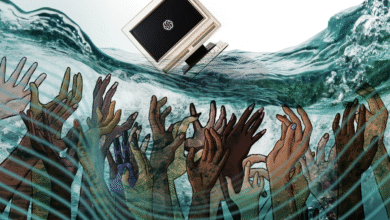

Understanding how AI chatbots like ChatGPT function reveals both their capabilities and limitations. While these tools have become indispensable for many, few users grasp the intricate processes powering their responses. From human-guided training to mathematical quirks, several surprising factors shape what these systems can, and cannot, do effectively.

1. Human oversight plays a critical role in refining AI behavior

Before becoming useful assistants, models undergo extensive pre-training on vast text datasets, learning to predict language patterns. However, without careful alignment by human reviewers, they might generate harmful or misleading content. Teams of annotators rank responses to ensure ethical boundaries, steering chatbots toward neutral, constructive answers. For instance, instead of detailing dangerous instructions, a properly aligned model will redirect users to verified sources.

2. Language processing works differently for AI than for humans

Rather than interpreting full words, chatbots break inputs into tokens, smaller fragments that can include parts of words or symbols. This method allows efficient analysis but sometimes leads to odd splits, like dividing “ChatGPT” into “chat,” “G,” and “PT.” Such tokenization quirks occasionally affect how the AI interprets queries.

3. Knowledge gaps are inevitable due to static training data

Unlike search engines, most AI models don’t update in real time. ChatGPT’s current version, for example, relies on information available only up to June 2024. To address newer queries, it must fetch live data through integrated web searches, though this introduces variables like source reliability. Keeping models current remains a challenge, requiring periodic retraining with fresh datasets.

4. Confidently wrong answers, known as hallucinations, are a persistent issue

Since chatbots prioritize coherent responses over factual accuracy, they sometimes fabricate plausible-sounding but incorrect details. Users might receive fabricated citations or misattributed research findings, emphasizing the need for independent verification. While tools like real-time fact-checking help, they don’t eliminate errors entirely.

5. Math skills rely on hidden computational aids

Though AI can tackle complex arithmetic, it doesn’t solve equations purely through reasoning. Instead, it uses an embedded calculator for precise operations, combining step-by-step logic with computational tools. This hybrid approach enhances accuracy but underscores that AI excels as a supplement, not a replacement, for human judgment.

By recognizing these underlying mechanisms, users can better navigate AI interactions, leveraging strengths while mitigating weaknesses. Whether fact-checking outputs or understanding token-based processing, informed usage ensures more reliable and productive outcomes.

(Source: Science Alert)