Brain Implant Enables Instant Speech for Neural Patients

Breakthrough Brain Implant Translates Thoughts Into Speech Instantly

▼ Summary

– Stephen Hawking used a cheek muscle sensor and speech synthesizer to communicate due to ALS, achieving a rate of one word per minute.

– Recent brain-computer-interface (BCI) devices translate neural activity into text or speech but had latency and limited vocabulary issues.

– UC Davis scientists developed a neural prosthesis that converts brain signals directly into phonemes and words, advancing digital vocal tract technology.

– The UC Davis team aimed to create a flexible speech neuroprosthesis for paralyzed patients to speak fluently with expressive intonation and cadence.

– Previous neural prostheses, like Stanford’s brain-to-text system, had a 25% error rate, making them insufficient for daily communication despite progress.

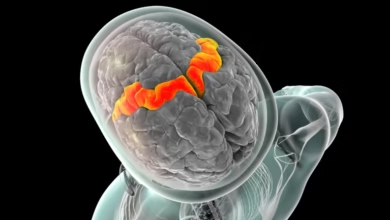

For decades, individuals with severe speech impairments, like the late physicist Stephen Hawking, relied on slow and laborious communication methods. Hawking famously used a cheek muscle sensor to painstakingly select letters, forming words at a rate of about one per minute before a speech synthesizer voiced them. While revolutionary for its time, this technology pales in comparison to today’s advancements in brain-computer interfaces (BCIs).

Researchers at the University of California, Davis, have now developed a neural prosthesis capable of converting brain signals directly into audible speech, complete with natural intonation and rhythm. Unlike previous systems that struggled with delays and limited vocabularies, this innovation could finally provide paralyzed patients with a seamless, expressive voice.

From Text to Natural Speech

Earlier BCIs focused primarily on translating neural activity into text, displaying words on a screen rather than producing spoken language. While impressive, these systems had significant drawbacks. A Stanford University team, led by Francis R. Willett, achieved brain-to-text conversion with a 25% error rate, meaning one in every four words was incorrect. “Three out of four words being right was groundbreaking, but still far from practical for everyday conversation,” explains Sergey Stavisky, a neuroscientist at UC Davis and co-author of the new study.

The UC Davis team aimed higher, striving for real-time speech synthesis that captures the nuances of human communication, pitch, emphasis, and rhythm. “We wanted patients to speak naturally, controlling their own cadence and expression,” says Maitreyee Wairagkar, the study’s lead researcher. Achieving this required overcoming multiple hurdles that had long plagued BCI technology.

A Leap Forward in Neural Decoding

Traditional speech prosthetics relied on predefined word banks, forcing users to select from a limited set of phrases. The new system, however, decodes neural signals into phonemes, the building blocks of speech, allowing for fluid, spontaneous conversation. By analyzing brain activity patterns associated with speech production, the device reconstructs intended vocalizations with remarkable accuracy.

This breakthrough could transform the lives of those with conditions like ALS, stroke-related paralysis, or traumatic brain injuries. Instead of struggling with slow, cumbersome interfaces, patients may soon regain the ability to speak in real time, expressing themselves as effortlessly as anyone else. While further refinements are needed, the technology marks a pivotal step toward fully restoring natural communication for those who have lost their voice.

(Source: Ars Technica)