Google DeepMind’s AI Now Powers Robots Directly

▼ Summary

– Google DeepMind is releasing an on-device version of its Gemini Robotics AI model, enabling operation without an internet connection while maintaining efficiency.

– The flagship Gemini Robotics model helps robots perform diverse physical tasks, generalize new situations, and execute fine motor skills without specific training.

– The on-device model offers offline capabilities nearly as strong as the hybrid model, according to Google DeepMind’s robotics head Carolina Parada.

– The model can adapt to new tasks with minimal demonstrations (50-100) and works across different robot types, including Apptronik’s Apollo and the Franka FR3.

– Google is launching an SDK for the on-device model, allowing developers to evaluate and fine-tune it, with initial access limited to trusted testers.

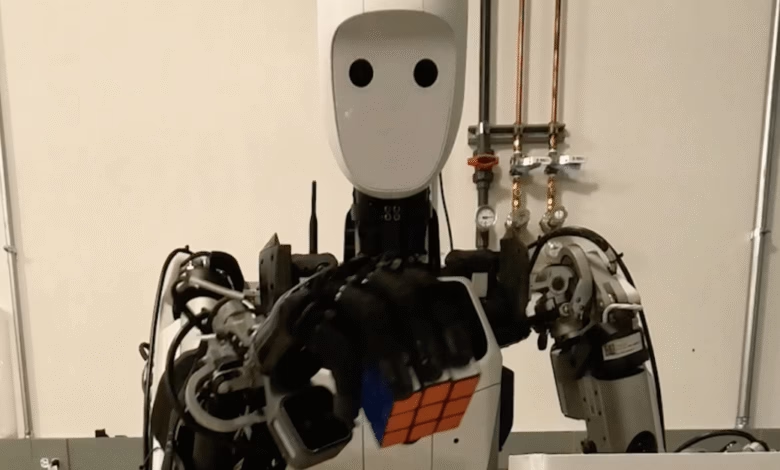

Google DeepMind has introduced a groundbreaking offline version of its Gemini Robotics AI, enabling robots to function without relying on cloud connectivity. This compact yet powerful vision-language-action (VLA) model brings advanced dexterity and adaptability directly to robotic hardware, building on the capabilities of its cloud-based predecessor launched earlier this year.

Unlike traditional AI systems that depend on constant internet access, this streamlined model operates entirely on-device while maintaining impressive performance. It empowers robots to tackle diverse physical tasks, even those outside their initial training scope, by interpreting commands, generalizing unfamiliar scenarios, and executing precise movements.

Carolina Parada, Google DeepMind’s robotics lead, highlights the model’s versatility, noting it requires minimal demonstrations, sometimes as few as 50 to 100 examples, to master new tasks. Though initially trained on Google’s ALOHA robotic system, the AI seamlessly adapted to other platforms, including Apptronik’s Apollo humanoid and the Franka FR3 dual-arm robot.

While the hybrid cloud-device version remains more capable, Parada describes the offline model as a robust alternative for environments with unreliable connectivity or strict data security needs. This makes it ideal for industrial settings, remote operations, or sensitive applications where cloud dependence isn’t feasible.

In tandem with the release, Google is rolling out a dedicated software development kit (SDK), marking the first time developers gain direct access to fine-tune one of DeepMind’s VLAs. Currently available to select testers, the SDK aims to refine the model’s safety and efficiency before broader deployment.

The advancement signals a shift toward more autonomous, responsive robotics, reducing latency and dependency on external servers. As Google continues to mitigate risks, this technology could redefine how robots learn and operate in real-world scenarios.

(Source: The Verge)