Nvidia’s Blackwell Chips Dominate AI Training Benchmarks

▼ Summary

– Nvidia’s Blackwell chips are leading AI benchmarks, delivering 2.2 times greater performance on the Llama 3.1 405B pretraining benchmark compared to previous-generation architecture.

– The Blackwell architecture supports high-performance AI applications with innovations like liquid-cooled racks, 13.4TB memory per rack, and advanced interconnect technologies.

– Nvidia’s platform was the only one to submit results on every MLPerf Training v5.0 benchmark, showcasing its versatility across AI workloads like LLMs and recommendation systems.

– Nvidia has evolved from a chip company to building AI infrastructure, including AI factories that power agentic AI applications across industries.

– MLPerf benchmarks provide standardized, peer-reviewed performance comparisons, with Nvidia achieving a 2.5 times improvement in image generation results from Hopper to Blackwell.

Nvidia’s Blackwell architecture is setting new standards in AI training performance, according to recent benchmark results. The company’s latest chips are demonstrating unprecedented capabilities across multiple workloads, reinforcing Nvidia’s position as a leader in accelerated computing for artificial intelligence.

In the MLPerf Training v5.0 benchmarks, Nvidia’s Blackwell-powered systems outperformed all competitors, delivering record-breaking results in large language model (LLM) training, recommendation systems, and other AI workloads. The platform was the only one to submit results across every benchmark category, showcasing its versatility and dominance in the field.

Two cutting-edge AI supercomputers—Tyche and Nyx—powered the submissions. Built on Nvidia’s GB200 NVL72 rack-scale systems and DGX B200 configurations, these systems leveraged 2,496 Blackwell GPUs and 1,248 Grace CPUs in collaboration with CoreWeave and IBM. The results were staggering: Blackwell achieved 2.2x faster performance on the Llama 3.1 405B pretraining benchmark compared to previous-generation hardware.

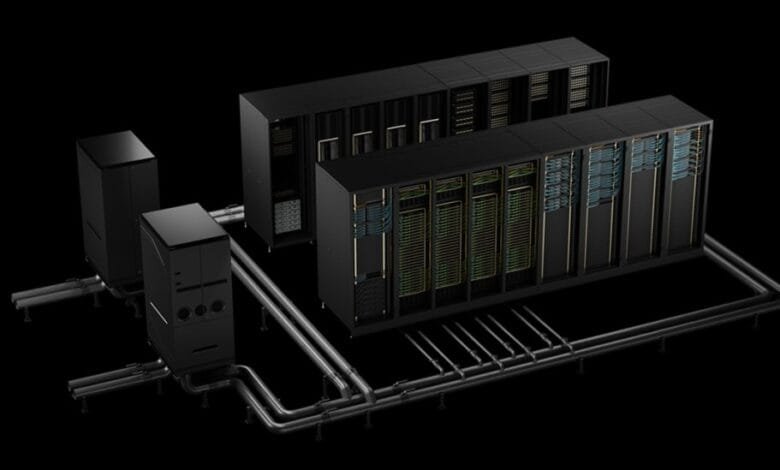

The advancements stem from several key innovations in the Blackwell architecture. High-density liquid cooling, 13.4TB of coherent memory per rack, fifth-generation NVLink, and Quantum-2 InfiniBand networking all contribute to its superior scalability. Additionally, software optimizations in the Nvidia NeMo Framework enhance multimodal LLM training, paving the way for next-generation agentic AI applications.

Nvidia’s ecosystem partners, including ASUS, Cisco, Lenovo, and Supermicro, also contributed to the benchmark submissions, demonstrating widespread industry adoption. The MLCommons Association, with over 125 members, ensures rigorous testing standards, requiring models to meet strict accuracy thresholds before results are published.

Beyond raw performance, Nvidia is evolving from a chip manufacturer to a full-stack AI infrastructure provider. The company now offers GPUs, CPUs, networking solutions, and software stacks like CUDA-X, TensorRT-LLM, and Dynamo, enabling organizations to accelerate AI deployment.

Dave Salvator, Nvidia’s director of accelerated computing products, emphasized the importance of scaling laws in AI development. Pretraining—the foundation of AI knowledge—requires immense computational power, while post-training fine-tuning tailors models to specific datasets. Agentic AI, capable of reasoning and problem-solving, represents the next frontier, where Blackwell’s architecture excels.

With 2.5x improvements in image generation over the previous Hopper architecture, Blackwell is just getting started. Salvator noted that further software optimizations and emerging workloads will push performance even higher.

Nvidia’s shift from GPU specialist to AI infrastructure leader is evident in its expanding portfolio—from DGX servers to full-scale AI factories. These facilities, designed to train and deploy advanced AI models, underscore the company’s vision for an AI-driven future.

As benchmarks continue to evolve, Nvidia remains at the forefront, delivering the speed, efficiency, and scalability needed to power the next wave of AI innovation.

(Source: VentureBeat)