OpenAI’s Spark Model Codes 15x Faster – Here’s the Catch

▼ Summary

– OpenAI has released GPT-5.3-Codex-Spark, a smaller model designed specifically for real-time, conversational coding within its Codex platform.

– The new model offers dramatically reduced latency, with an 80% faster roundtrip and a 50% faster time-to-first-token, enabling quicker, more interactive coding sessions.

– Codex-Spark runs on Cerebras’s WSE-3 AI chips, a partnership that provides the high-speed, latency-first infrastructure required for this new serving tier.

– A key trade-off is that while Codex-Spark generates code 15 times faster, it underperforms the full GPT-5.3-Codex model on capability benchmarks and has lower cybersecurity preparedness.

– The model is currently available only to Pro tier users as a preview and represents a step toward a future Codex with dual modes for both rapid iteration and long-horizon reasoning.

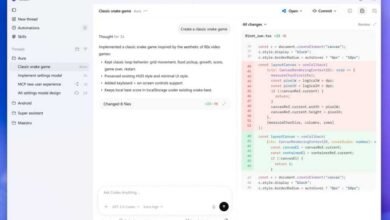

OpenAI has introduced a new model designed to transform how developers interact with AI coding assistants. GPT-5.3-Codex-Spark, a specialized version of the Codex family, promises to generate code up to fifteen times faster than its predecessors. This leap in speed is engineered for real-time, conversational coding, allowing for immediate feedback and rapid iteration. However, this impressive performance comes with notable trade-offs in capability and security, a critical detail for developers to consider.

The model marks a shift away from slower, batch-style agent interactions. Instead, it focuses on delivering a responsive experience for tasks like making quick edits, reshaping logic, or refining interfaces. The overhead for each client-server roundtrip has been slashed by 80%, and the time-to-first-token is 50% faster. These improvements are made possible through technical optimizations like session initialization, streaming enhancements, and a persistent WebSocket connection that eliminates constant renegotiation.

A key driver behind this speed is a new hardware partnership. Spark runs on Cerebras’ Wafer Scale Engine 3 (WSE-3) chips, a unique architecture that places all computing resources on a single, large processor. This design minimizes latency by keeping data transfers extremely tight, providing the raw power needed for low-latency inference. This collaboration represents the first public milestone since OpenAI and Cerebras announced their partnership, aiming to unlock new interaction patterns for developers.

There are significant caveats to this speed boost. OpenAI is transparent that Spark is a complement to, not a replacement for, the more powerful base GPT-5.3-Codex model. On benchmarks like SWE-Bench Pro and Terminal-Bench 2.0, which evaluate complex software engineering tasks, Spark underperforms its sibling model. More critically, the company states Spark does not meet its internal threshold for high capability in cybersecurity, an area where the full Codex model excels. This raises important questions about trading intelligence and security for raw speed.

Initially, access is limited. The preview is available only to users on the $200 per month Pro tier, with separate rate limits in place. If demand surges, users may experience slower access or temporary queuing as OpenAI manages system reliability. The model is designed for lightweight, targeted work where immediate feedback is valuable, and it defaults to not automatically running tests unless specifically instructed.

The long-term vision is to blend different modes of operation. OpenAI is working toward a future where Codex can seamlessly switch between a fast, interactive collaborator for tight loops and a more deliberate, high-capability agent for long-running, autonomous tasks. The goal is to let developers work in a fluid, conversational style while delegating complex background work to sub-agents, offering both breadth and depth without forcing an upfront choice.

For now, the decision rests with developers. The choice is between a faster, more responsive model for rapid iteration and a smarter, more secure model for critical development work. While the prospect of an interruptible AI that keeps pace with human thought is appealing, the trade-offs in accuracy and cybersecurity are substantial. The evolution of these tools suggests a future where such compromises may fade, but currently, users must weigh speed against capability carefully.

(Source: ZDNET)