AI’s Dark Side: How It’s Fueling a Surge in Online Crime

▼ Summary

– The PromptLock “AI ransomware” was revealed to be a controlled academic research project, not a real-world attack, despite initial media alarm.

– Real cybercriminals are actively using AI tools to lower the effort and expertise needed for attacks, making them more common and accessible.

– Experts argue the most immediate AI threat is the escalation of scams and fraud, such as deepfake impersonations, not fully automated “superhacker” malware.

– Generative AI is heavily used for spam, with over half of malicious emails now estimated to be created using large language models (LLMs).

– AI is enabling more sophisticated, targeted email attacks, with the use of LLMs in such schemes nearly doubling within a year.

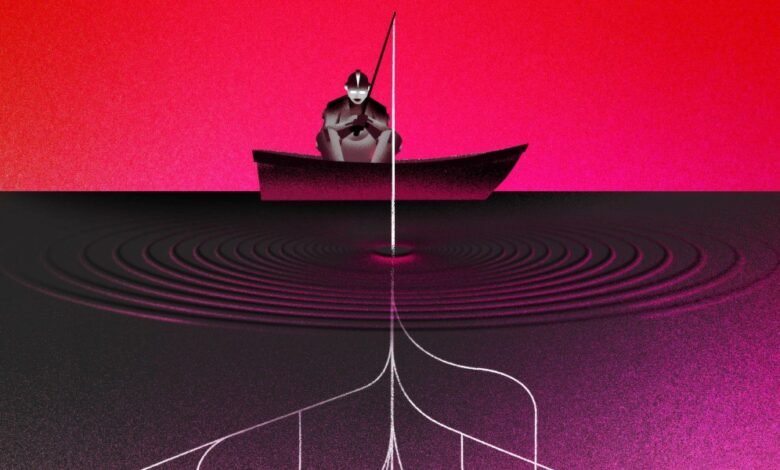

The rapid advancement of artificial intelligence is creating a powerful new toolkit for cybercriminals, fueling a surge in online crime through more sophisticated and scalable attacks. While the specter of fully autonomous AI hackers captures headlines, the more pressing danger lies in how these tools are already being used to amplify traditional scams and lower the technical barrier for entry. The reality is that AI is making cyberattacks more common and more effective, shifting from a theoretical risk to an operational threat.

Researchers Cherepanov and Strýček once believed they had uncovered a pivotal moment with their discovery, which they named PromptLock. They announced it as the first example of AI-powered ransomware, a claim that ignited a global media frenzy. However, the threat proved less dramatic upon closer inspection. A team from New York University soon clarified that the malware was not an active attack but an academic research project. Its purpose was to demonstrate the feasibility of automating every stage of a ransomware campaign, a goal the researchers confirmed they had achieved.

Although PromptLock was an experiment, genuine threat actors are actively leveraging the latest AI capabilities. Just as legitimate developers use AI to write and debug code, malicious hackers employ these same tools to streamline their operations. This automation reduces the time and effort needed to orchestrate an attack, effectively lowering the barriers for less skilled individuals to launch cyber offensives. According to Lorenzo Cavallaro, a professor of computer science at University College London, the prospect of cyberattacks becoming more frequent and potent is not a distant possibility but “a sheer reality.”

Some voices in the technology sector warn that AI is nearing the ability to execute fully automated cyber campaigns. Yet, many security professionals consider this notion exaggerated. Marcus Hutchins, a principal threat researcher at security firm Expel renowned for stopping the WannaCry ransomware attack, argues the focus on AI superhackers is misplaced. Instead, experts urge attention on the immediate and tangible risks. AI is already accelerating the pace and scale of fraudulent activities, with criminals increasingly using deepfake technology to impersonate trusted individuals and defraud victims of significant sums. These AI-enhanced schemes are predicted to grow more frequent and damaging, demanding greater preparedness.

The adoption of generative AI by attackers began almost as soon as ChatGPT gained widespread popularity in late 2022. Initially, these efforts focused on a familiar nuisance: generating massive volumes of spam. A recent Microsoft report highlighted that in the year leading to April 2025, the company prevented approximately $4 billion in scams and fraudulent transactions, noting that “many likely aided by AI content.”

Research from academics at Columbia University, the University of Chicago, and security company Barracuda Networks estimates that large language models now generate at least half of all spam email. Their analysis of nearly 500,000 malicious messages also revealed AI’s role in more advanced campaigns. They studied targeted business email compromise attacks, where a criminal impersonates a trusted contact to deceive an employee into transferring money or sharing sensitive data. The findings showed a sharp increase in AI’s use for these focused attacks, rising from 7.6% in April 2024 to at least 14% by April 2025. This trend underscores a shift from bulk spam to more personalized and convincing social engineering tactics, making AI a formidable weapon in the cybercriminal arsenal.

(Source: Technology Review)