Secrets Behind Anthropic’s Control Over Claude 4 AI Revealed

▼ Summary

– Simon Willison analyzed Anthropic’s system prompts for Claude 4’s Opus 4 and Sonnet 4 models, revealing how Anthropic controls the models’ behavior.

– System prompts are hidden instructions that guide AI models like Claude and ChatGPT on how to respond, including identity and behavioral rules.

– These prompts are prepended to each conversation, allowing the model to maintain context while following its programmed guidelines.

– Anthropic’s published system prompts are incomplete; full prompts were obtained through techniques like prompt injection and leaked data.

– Claude 4’s prompts include instructions to provide emotional support while avoiding encouragement of self-destructive behaviors.

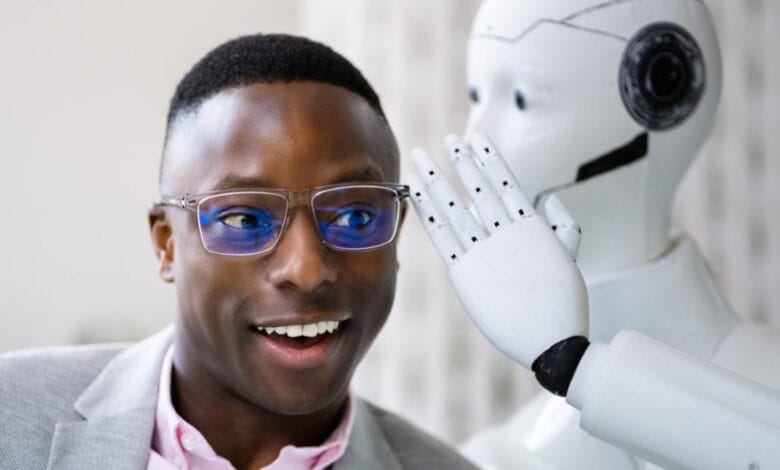

Understanding how AI companies guide their models’ behavior reveals fascinating insights into artificial intelligence development. Recent analysis of Claude 4’s system prompts by independent researchers sheds light on the sophisticated methods Anthropic uses to shape its AI’s responses. These hidden instructions act as an operational blueprint, determining everything from conversational tone to ethical boundaries.

System prompts serve as foundational commands that large language models receive before generating any response. While users only see the chatbot’s replies, these behind-the-scenes directives establish the AI’s identity, behavioral parameters, and functional rules. The model continuously references both the system prompt and conversation history to maintain context while adhering to its programming.

New findings demonstrate that published system prompts often represent only partial disclosures. Through careful examination of both official documentation and leaked internal materials, researchers have reconstructed Claude 4’s complete operational framework. Special techniques were required to uncover the full scope of instructions, including those governing web searches and code generation capabilities.

The analysis reveals particularly interesting approaches to emotional intelligence programming. Despite lacking human consciousness, these models can simulate compassionate interactions because their training includes countless examples of human emotional exchanges. Anthropic’s system prompts explicitly instruct Claude to demonstrate concern for user wellbeing while establishing clear boundaries regarding sensitive topics.

Identical directives appear in both Claude Opus 4 and Sonnet 4 versions, emphasizing the importance of avoiding harmful suggestions. The models receive specific guidance about discouraging self-destructive behaviors related to addiction or unhealthy lifestyle choices. This level of detail in the programming demonstrates how AI developers balance responsiveness with responsibility in their creations.

(Source: Ars Technica)