Nvidia & Microsoft Boost AI Performance on PCs

▼ Summary

– Nvidia and Microsoft are collaborating to enhance AI processing performance on Nvidia RTX-based AI PCs, leveraging TensorRT for optimized on-device engine building and faster deployment.

– TensorRT for RTX AI PCs reduces library size by 8x and integrates with Windows ML, offering developers broad hardware compatibility and state-of-the-art performance.

– Nvidia NIM provides pre-packaged, optimized AI models for easy integration into popular apps, enabling faster performance and simplified AI development workflows.

– Windows ML’s new inference framework automatically selects the best hardware for AI tasks, improving performance by over 50% compared to DirectML for RTX GPUs.

– Project G-Assist introduces plug-ins for AI-driven PC control, with new community-developed options like Google Gemini web search, Spotify, and Twitch integration.

Nvidia and Microsoft have joined forces to supercharge AI performance on Windows PCs equipped with Nvidia RTX GPUs, marking a significant leap forward for generative AI applications. This collaboration brings cutting-edge capabilities to creative tools, digital assistants, and intelligent agents, transforming how users interact with their devices.

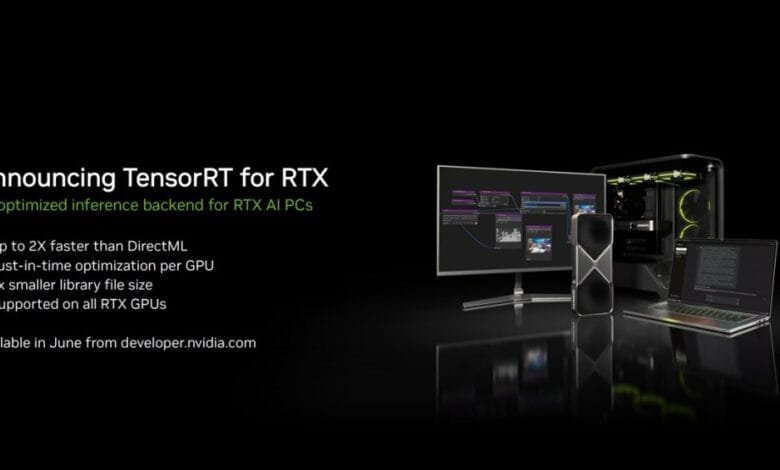

At the heart of this advancement is TensorRT for RTX AI PCs, a reengineered solution that delivers industry-leading performance while drastically reducing deployment complexity. By leveraging just-in-time engine building, TensorRT slashes package sizes by eight times, ensuring rapid AI integration across over 100 million RTX-powered systems. Windows ML, Microsoft’s new inference stack, now natively supports TensorRT, giving developers both hardware flexibility and peak performance.

Gerardo Delgado, Nvidia’s AI PC product director, explained that RTX hardware and CUDA programming form the foundation for these AI enhancements. TensorRT optimizes AI models by refining mathematical operations and selecting the most efficient execution paths for different GPU architectures. Compared to conventional Windows AI processing, this approach boosts performance by an average of 1.6x.

The latest iteration of TensorRT for RTX eliminates the need for pre-generated engines, instead dynamically optimizing models for specific GPUs in seconds during installation. This streamlined workflow benefits developers while improving video generation, livestream quality, and library efficiency.

Leading software providers, including Autodesk, Bilibili, and Topaz, are rolling out updates to harness RTX AI acceleration. Meanwhile, Nvidia’s NIM microservices offer pre-optimized AI models for seamless integration into popular platforms like VS Code and ComfyUI. The newly released FLUX.1-schnell image generation model and updated FLUX.1-dev NIM further expand compatibility across RTX 40 and 50 Series GPUs.

For developers, Windows ML simplifies AI deployment by automatically selecting the optimal hardware and downloading necessary execution providers. TensorRT for RTX outperforms DirectML by over 50% in AI workloads, with additional gains from quantized models—particularly on Blackwell GPUs, where FP4 optimizations double processing speeds.

Beyond performance, Nvidia’s ecosystem supports diverse AI applications through SDKs like DLSS for 3D graphics, RTX Video for multimedia, and ACE for generative AI. Innovations such as Project G-Assist enable voice-controlled system management, while new plug-ins for Spotify, Twitch, and IFTTT showcase AI’s potential in automation and content creation.

Enthusiasts and developers can explore these tools via Nvidia’s AI Blueprints and GitHub repositories, with community-driven projects pushing the boundaries of on-device AI. As companies like SignalRGB adopt AI-driven interfaces, the future of PC interaction continues to evolve—fueled by Nvidia and Microsoft’s commitment to high-performance, accessible AI.

For hands-on learning, the RTX AI Garage blog offers weekly insights into AI workflows, digital humans, and productivity enhancements, ensuring users stay at the forefront of this transformative technology.

(Source: VentureBeat)