Google’s AI Gets Personal, Bots Blocked, Domains Matter

▼ Summary

– Google is launching a “Personal Intelligence” feature for AI Mode subscribers, connecting Gmail and Photos to provide personalized search responses based on a user’s own data.

– This personalization could lead to shorter, more ambiguous user queries, making it harder for SEO practitioners to target searches with clear intent signals.

– Hostinger data shows AI training bots like GPTBot are being widely blocked by websites, while search and assistant bots like OAI-SearchBot are gaining broader access.

– Google’s John Mueller warns that using free subdomain hosting services creates SEO challenges due to spam-heavy environments, making it harder for legitimate sites to gain search visibility.

– A common theme is that access, to user data, websites via bots, and fair search evaluation, is a foundational factor shaping SEO outcomes more than incremental optimization.

The digital landscape is shifting as Google integrates personal data into its AI search results, website owners increasingly block AI training bots, and domain selection proves critical for search visibility. These developments signal a week where foundational decisions about access and platform choice are having a greater impact on performance than ever before.

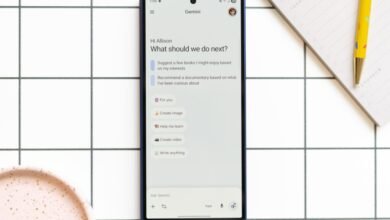

Google has begun connecting user data from Gmail and Google Photos to its AI search feature, a move it calls Personal Intelligence. This opt-in experiment for U.S. subscribers allows the AI to generate responses based on a user’s own emails and images. For instance, it could suggest a coat brand you’ve purchased before while checking the weather for an upcoming trip it found in your inbox. While Google states it does not train its models on this personal data, the feature’s launch raises significant questions for search behavior. Queries may become shorter and less specific as users rely on Google to infer context, potentially making it harder for content to match the more ambiguous search intents.

Public reaction has centered on the balance between utility and privacy. Google’s Robby Stein describes it as a step toward a more personal search experience. However, commentators like content specialist Michele Curtis stress that “personalization only works when trust is architected before intelligence.” Others, including Fluxxy AI founder Syed Shabih Haider, express concern over the security implications of linking multiple applications, noting the heightened risk of a data breach.

Meanwhile, a new analysis of bot traffic reveals a clear split in how websites treat different types of AI crawlers. Data collection bots are being widely blocked, while search and assistant bots are gaining broader access. Hostinger’s study of over 5 million sites shows OpenAI’s GPTBot, used for training models, saw its access plummet from 84% to just 12%. In contrast, OAI-SearchBot, which retrieves live web data for ChatGPT answers, maintained steady coverage. This trend aligns with earlier findings from BuzzStream and Cloudflare, confirming a strategic shift by webmasters.

The distinction is crucial for SEO professionals. Blocking a training bot like GPTBot opts a site out of future AI model training datasets. Blocking a search bot, however, means content won’t be cited as a source in AI-generated answers. The prevailing expert advice, summarized by Aleyda Solís, is to “disallow the ‘GPTbot’ user-agent but allow ‘OAI-SearchBot’” to maintain visibility in AI search tools. The operational cost of uncontrolled bot traffic is also a major factor, with some developers reporting that AI crawlers once constituted 95% of their server requests.

Adding to the theme of foundational choices, Google’s John Mueller recently highlighted the SEO challenges inherent in using free subdomain hosting services. He explained that these platforms often attract spam and low-quality content, creating a “problematic” neighborhood that makes it harder for search engines to identify and reward legitimate sites. Even with perfect on-page optimization, a site on such a domain may struggle to appear in standard search results.

Mueller’s guidance reinforces a long-standing principle: your digital address matters. He advised focusing on building direct traffic and community engagement first, rather than expecting search visibility to lead the way. This sentiment was echoed on LinkedIn, where digital marketer Fernando Paez V noted that “free subdomain hosting services attract spam and make it more difficult for legitimate sites to gain visibility.” The takeaway is clear: investing in a proper domain is a fundamental part of SEO, not an optional extra.

This week’s updates collectively underscore that access is becoming a primary competitive advantage. Visibility is increasingly dictated by the permissions you grant to crawlers, the platform you choose to build upon, and the personal data users allow platforms to utilize. For practitioners, this means auditing bot access in server logs and robots.txt files, carefully evaluating domain and hosting choices for new projects, and closely monitoring how personalized AI search might alter the query landscape they aim to serve.

(Source: Search Engine Journal)