GPT-4.1 Launches: OpenAI Claims Multimodal Edge, Outperforming GPT-4o

▼ Summary

– OpenAI announced the GPT-4.1 family of AI models, including the main GPT-4.1, GPT-4.1 Mini, and GPT-4.1 Nano, focusing on developer needs.

– These models are launched directly into OpenAI’s API, emphasizing enhanced tools for developers rather than the public-facing ChatGPT interface.

– All models feature a 1-million-token context window, enabling them to process large amounts of information, which is crucial for understanding extensive codebases or documents.

– The tiered structure offers options: GPT-4.1 for complex tasks, GPT-4.1 Mini for balanced speed and cost, and GPT-4.1 Nano for cost-efficiency and lower-latency applications.

– OpenAI is phasing out the GPT-4.5 model in favor of the more cost-effective GPT-4.1, while the anticipated GPT-5 launch has been delayed due to integration challenges.

OpenAI took to a livestream event Monday to announce its newest family of AI models, dubbed GPT-4.1 – a name likely to add to the ongoing discussion about the company’s model nomenclature. This release isn’t a single entity but a trio: the main GPT-4.1, a lighter GPT-4.1 Mini, and the entirely new GPT-4.1 Nano.

Significantly, these models are launching directly into OpenAI’s API, bypassing the public-facing ChatGPT interface for now. This signals a deliberate focus on providing enhanced tools specifically for the developer community building applications on OpenAI’s platform.

Tailored for Development Tasks

OpenAI personnel, including product lead Kevin and researchers Michelle and Ishaan, emphasized that the GPT-4.1 family was trained with developers’ needs in mind. They highlighted improved performance over the previous GPT-4o generation across “just about every dimension,” with particular strengths in coding, following complex instructions accurately, and powering AI agents.

A major technical specification shared across all three models is an impressive 1-million-token context window. This allows the models to ingest and process vast amounts of information – roughly 750,000 words, or more than Tolstoy’s War and Peace – in a single go. This capacity is vital for tasks like understanding large codebases or lengthy documents. Even the new GPT-4.1 Nano, described as OpenAI’s “smallest, fastest, and cheapest model ever,” supports this extensive context length.

The tiered structure offers developers options:

- GPT-4.1: The flagship, aimed at maximum capability for complex tasks.

- GPT-4.1 Mini: Designed to balance speed, intelligence, and cost.

- GPT-4.1 Nano: Prioritizes speed and cost-efficiency for lower-latency applications like autocomplete or data extraction.

Pricing follows suit, ranging from $2.00 input / $8.00 output per million tokens for GPT-4.1, down to $0.10 input / $0.40 output for Nano. OpenAI also noted there’s no price premium for using the full long context window.

Performance and Positioning

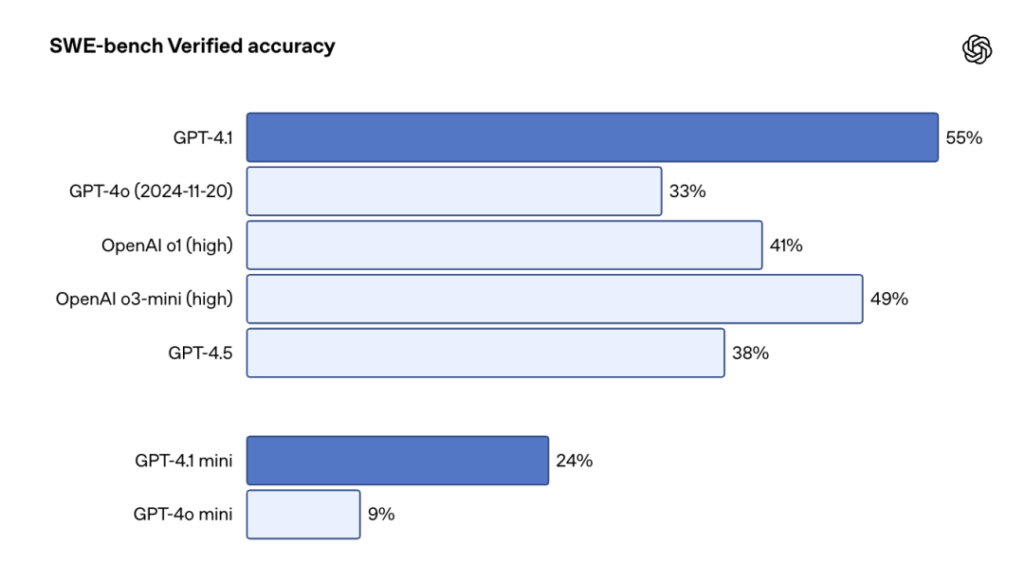

OpenAI presented several benchmarks. On SWE-bench (verified accuracy), a key coding test, GPT-4.1 scored 55%, a significant jump from GPT-4o’s 33%. On Aider’s polyglot benchmark, measuring coding across multiple languages, GPT-4.1 again showed substantial gains, particularly in producing code ‘diffs’ rather than rewriting entire files. On internal instruction-following tests, GPT-4.1 achieved 49% accuracy on a hard subset, while Mini hit 45% and Nano 32%.

Image: OpenAI

Interestingly, OpenAI claimed state-of-the-art performance (72%) on the Video-MME benchmark for understanding long videos without subtitles, showcasing its multimodal capabilities. A “needle-in-a-haystack” test demonstrated near-perfect information retrieval across the full 1M token context for all three models.

The company also acknowledged performance trade-offs. On its own OpenAI-MRCR accuracy test, GPT-4.1’s score decreased from around 84% with shorter inputs (8k tokens) to about 50% when processing the full million tokens.

Strategic Context: Deprecations and Delays

The GPT-4.1 launch coincides with OpenAI phasing out API access to its very large (and expensive) GPT-4.5 model by July 14th, citing GPT-4.1 as a more cost-effective alternative offering comparable performance. GPT-4.5 remains available in ChatGPT research previews.

This move also comes after recent indications that the launch of the anticipated GPT-5 has been pushed back, with CEO Sam Altman citing integration challenges. The focus on iterating the GPT-4 line with developer-centric improvements like 4.1 may reflect a strategy of refining existing architectures while tackling the complexities of the next major generation.

For developers, GPT-4.1 offers a new, potentially more capable and efficient set of tools, especially for coding and long-context tasks, though real-world application performance will be the ultimate measure.

(Inspired by: The Verge)