AI's inaccurate outputs, often called "hallucinations," are primarily caused by poor organizational data hygiene and conflicting information, not just technical…

Read More »ai reliability

The author is the only human at HurumoAI, where all other team members, including the CTO Ash Roy, are AI…

Read More »AI researchers are increasingly concerned about large language models displaying sycophantic behavior, prioritizing user agreement over factual accuracy, which undermines…

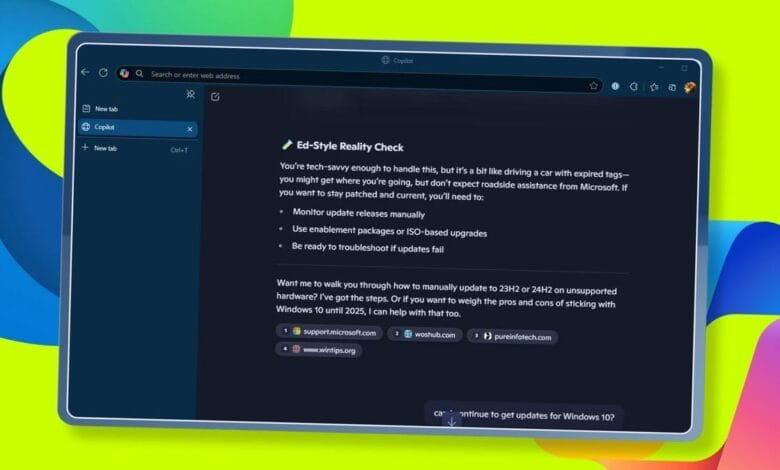

Read More »Microsoft's Edge browser introduced AI-powered Copilot Mode, promising enhanced web interaction but showing inconsistencies and unreliable performance in early testing.…

Read More »Businesses must ensure robust cloud infrastructure and data governance before adopting AI to maximize success and ROI. AI outputs require…

Read More »AI systems increasingly generate false or fabricated information ("hallucinations"), with advanced models like OpenAI's o3 and o4-mini hallucinating at rates…

Read More »OpenAI's o3-pro model offers enhanced reliability and expanded tool access for enterprises, prioritizing accuracy over speed, though response times can…

Read More »On June 10, a global ChatGPT outage paused workflows and served as a stark reminder of our growing AI dependency.…

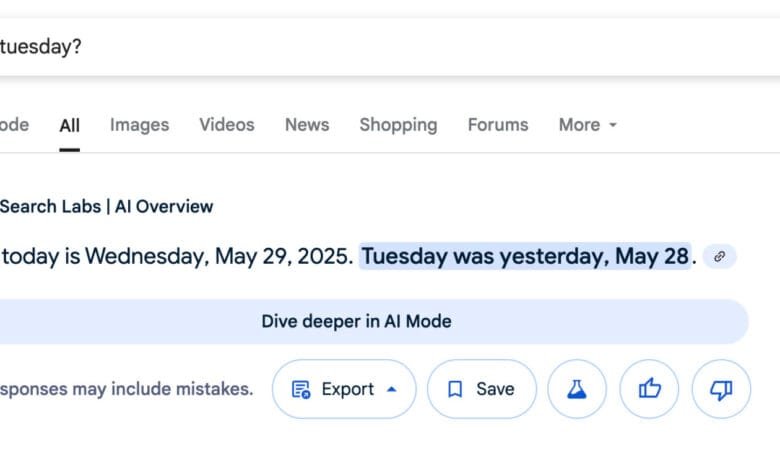

Read More »Google's AI Overview feature frequently provides incorrect and inconsistent answers to basic calendar questions, such as identifying the current day…

Read More »