From March 18-21, 2024, the tech world turned its eyes to the GPU Technology Conference (GTC) hosted by Nvidia. This premier event, held at the San Jose Convention Center, was a gathering of the industry’s most innovative minds, all focused on the future of artificial intelligence. The conference was a showcase of over 900 sessions, 300+ exhibits, and numerous technical workshops, but the centerpiece was the keynote by Nvidia’s CEO, Jensen Huang.

Jensen Huang’s Keynote: Shaping the AI Landscape

On March 18th, Jensen Huang delivered a keynote that was not just a presentation but a vision for the transformative power of AI. Here are the essential points from his address:

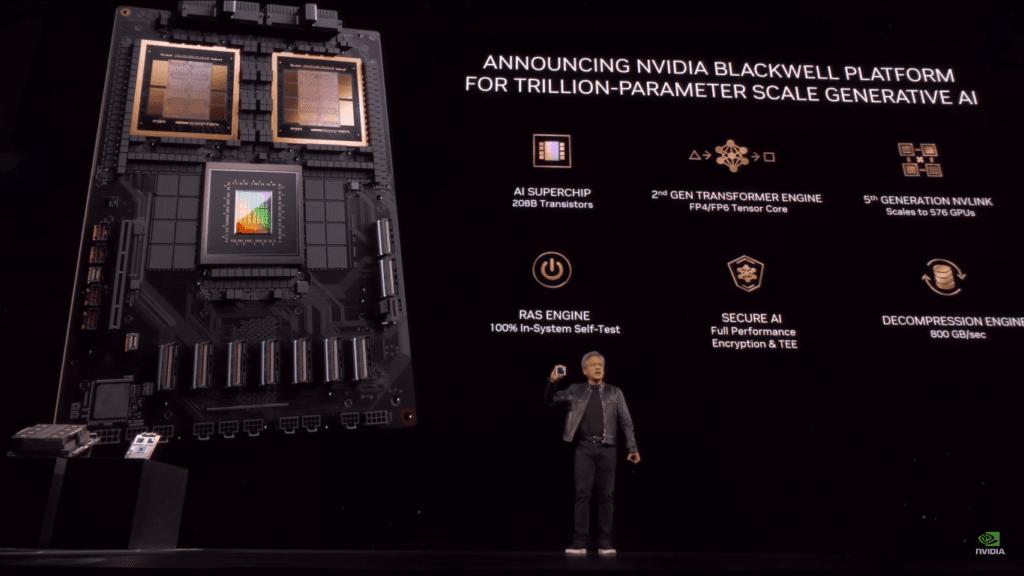

The Blackwell Architecture

Huang unveiled the Blackwell Architecture, a new computing platform engineered for the generative AI era, set to revolutionize AI applications across industries. It features:

- A new class of AI superchip with 208 billion transistors, using a custom-built TSMC 4NP process.

- Second-Generation Transformer Engine to accelerate inference and training for large language models (LLMs) and Mixture-of-Experts (MoE) models.

- Secure AI capabilities with Nvidia Confidential Computing to protect sensitive data and AI models.

- NVLink and NVLink Switch technology to enable exascale computing and trillion-parameter AI models.

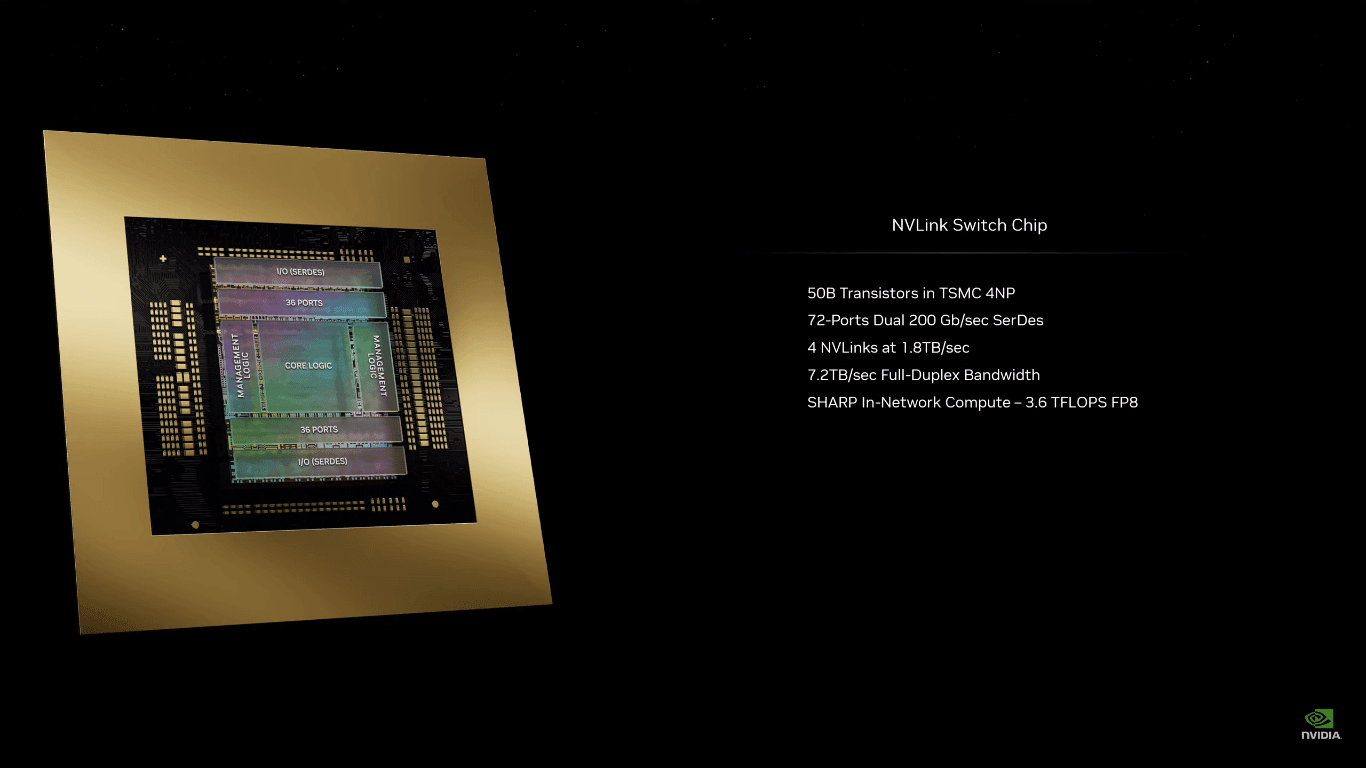

NVLink Switch Chip

The NVLink Switch Chip is a critical component in Nvidia’s high-performance computing (HPC) ecosystem, designed to facilitate seamless, high-speed communication between GPUs within and across servers. Here are the key features and specifications of the NVLink Switch Chip:

- High Bandwidth: The NVLink Switch Chip provides a staggering 900 GB/s of connectivity for each pair of GPUs, ensuring ultra-fast data transfer rates that are essential for complex AI and HPC workloads.

- Scalable Interconnects: It supports a non-blocking compute fabric that can interconnect up to 576 GPUs, allowing for full all-to-all communication at incredible speeds. This scalability is crucial for building powerful, end-to-end computing platforms capable of handling the most demanding tasks.

- Advanced Communication Capability: The chip is equipped with engines for NVIDIA Scalable Hierarchical Aggregation Reduction Protocol (SHARP)™, which facilitate in-network reductions and multicast acceleration. This feature enhances the performance of collective operations within multi-GPU systems.

- Integration with NVLink: The NVLink Switch Chip works in tandem with NVLink, a direct GPU-to-GPU interconnect that scales multi-GPU I/O within the server. NVSwitch connects multiple NVLinks to provide full-bandwidth GPU communication within a single node and between nodes.

- Exascale Computing: Future NVLink Switch Systems, with a second tier of NVLink Switches externally added to the servers, can connect up to 256 GPUs. This setup delivers an extraordinary 57.6 terabytes per second (TB/s) of all-to-all bandwidth, enabling rapid solving of large AI jobs and supporting the development of trillion-parameter AI models.

The NVLink Switch Chip is a testament to Nvidia’s innovation in creating a data center-sized GPU that can meet the speed of business and the increasing compute demands in AI and HPC, including the emerging class of trillion-parameter models.

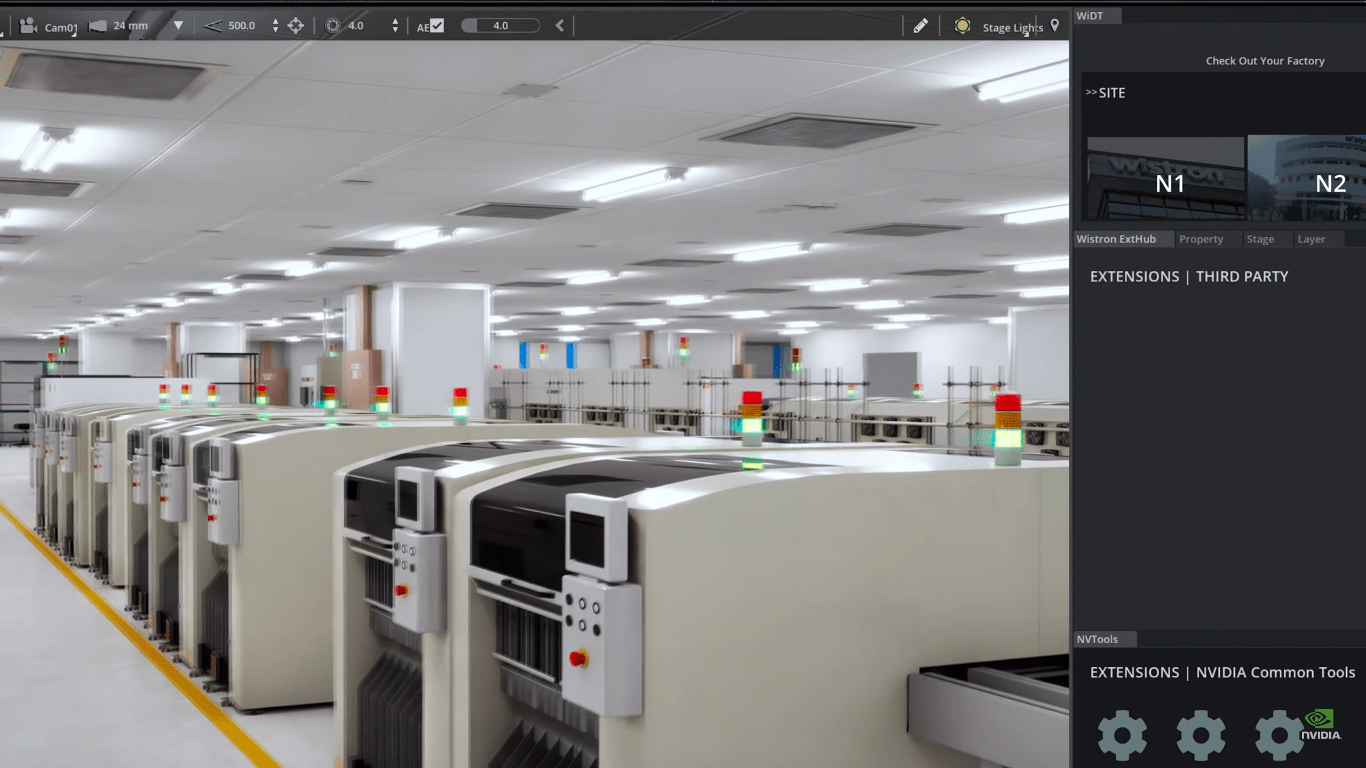

Omniverse Cloud APIs

The Omniverse Cloud APIs are a suite of tools provided by Nvidia to enhance the development and deployment of advanced 3D applications and experiences. Here’s a detailed look at the key components of the Omniverse Cloud APIs:

- Application API: This API is used to query which applications are available in an Omniverse Cloud instance. It allows developers to get information about applications installed to an Omniverse Cloud instance.

- Application Streaming API: It is used to initiate and manage application streams for an Omniverse Cloud instance. This API enables the streaming of applications remotely on Nvidia OVX hardware, accessed through a browser, which means end-users no longer need a high-performance local machine. They can leverage the full potential of USD within a scalable, high-performance clustered computing environment.

- USD (Universal Scene Description) APIs: These include several APIs such as:

- USD Render: To generate fully ray-tracked RTX rendering of OpenUSD data.

- USD Query: Enables scene queries and interactive scenarios.

- USD Write: To modify and interact with USD data.

- USD Notify: To track changes and provide updates.

- Omniverse Channel: Connects users, tools, and Omniverse worlds for collaboration across different scenes.

- Avatar Cloud Engine (ACE): Developers can use ACE to supercharge the development of advanced 3D applications and experiences.

- ChatUSD, DeepSearch, Picasso, and RunUSD: These APIs are part of the broader access to Omniverse Cloud APIs that are coming soon, which will further enhance the capabilities for developers.

The Omniverse Cloud APIs connect a rich developer ecosystem of simulation tools and applications, enabling full-stack training and testing with high-fidelity, physically based sensor simulation. They are designed to facilitate the creation of digital twins and immersive simulations that can be used across various industries.

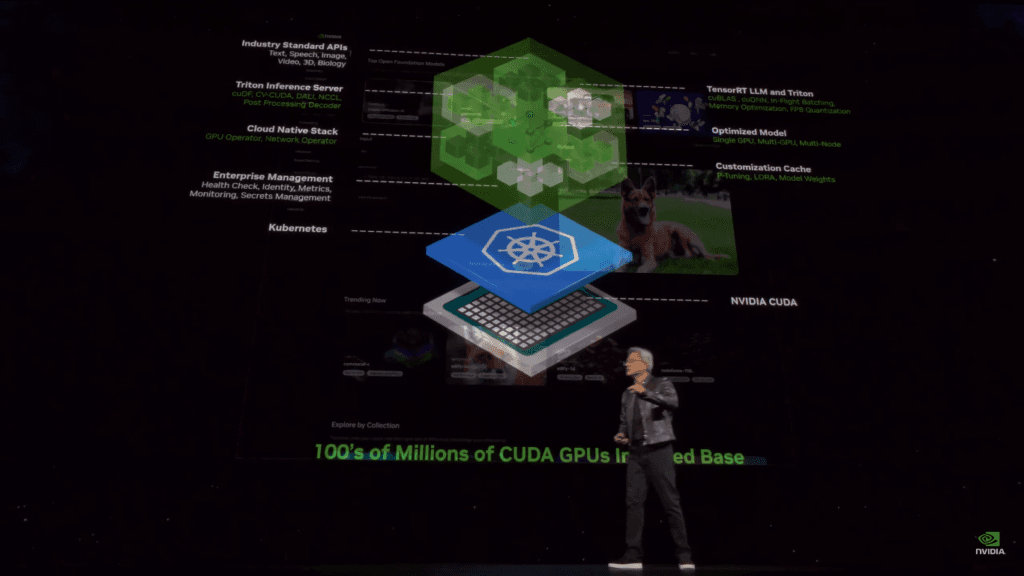

NVIDIA Inference Microservices (NIM)

NVIDIA Inference Microservices (NIM) is a comprehensive suite of tools designed to facilitate the deployment of AI models into production environments. Here’s a detailed breakdown of NIM’s features and capabilities:

- Microservices for Generative AI: NIM includes a wide range of microservices that support the deployment of generative AI applications and accelerated computing. It’s part of NVIDIA AI Enterprise 5.0 and is available from leading cloud service providers, system builders, and software vendors.

- Optimized Inference: NIM microservices optimize inference for a variety of popular AI models from NVIDIA and its partner ecosystem. They are powered by NVIDIA inference software, including Triton Inference Server, TensorRT, and TensorRT-LLM, which significantly reduce deployment times from weeks to minutes.

- Security and Manageability: NIM provides security and manageability based on industry standards, ensuring compatibility with enterprise-grade management tools. This makes it a secure choice for businesses looking to deploy AI solutions.

- NVIDIA cuOpt: This is a GPU-accelerated AI microservice within the NVIDIA CUDA-X collection that has set world records for route optimization. It enables dynamic decision-making that can reduce cost, time, and carbon footprint.

- Ease of Use: Developers can work from a browser using cloud APIs to compose apps that can run on systems and serve users worldwide. This ease of use is a key factor in NIM’s adoption by developers.

- Broad Adoption: NIM microservices are being adopted by leading application and cybersecurity platform providers, including companies like Uber, CrowdStrike, SAP, and ServiceNow.

- Future Capabilities: NVIDIA is working on more capabilities, such as the NVIDIA RAG LLM operator, which will move co-pilots and other generative AI applications from pilot to production without rewriting any code.

NIM represents NVIDIA’s effort to make AI more accessible and easier to deploy for businesses of all sizes. Its integration into NVIDIA AI Enterprise 5.0 signifies a major step forward in the democratization of AI technologies.

Nvidia’s Earth-2

Nvidia’s Earth-2 is an ambitious initiative aimed at accelerating climate and weather predictions through high-resolution simulations augmented by AI. Here are the key aspects of the Earth-2 platform:

- High-Performance Computing: Earth-2 leverages Nvidia’s advanced computing capabilities to run accelerated, AI-augmented simulations for climate and weather modeling.

- Interactive Visualization: The platform includes interactive visualization services, allowing for high-fidelity depictions of weather conditions globally.

- Data Federation: Omniverse Nucleus is part of Earth-2, providing a data federation engine that offers transparent data access across external databases and live feeds.

- AI Services: Earth-2’s AI services enable climate scientists to access generative AI-based models, such as CorrDiff diffusion models, which can generate large ensembles of high-resolution predictions.

- Simulation Services: These services will allow insights from weather and climate simulations to be gathered, aiding in planning for extreme weather scenarios.

The Earth-2 platform is designed to provide a path to simulate and visualize the global atmosphere at unprecedented speed and scale, supporting the development of kilometer-scale climate simulations and large-scale AI training and inference. This project represents a significant step forward in the use of AI for environmental research and the fight against climate change.

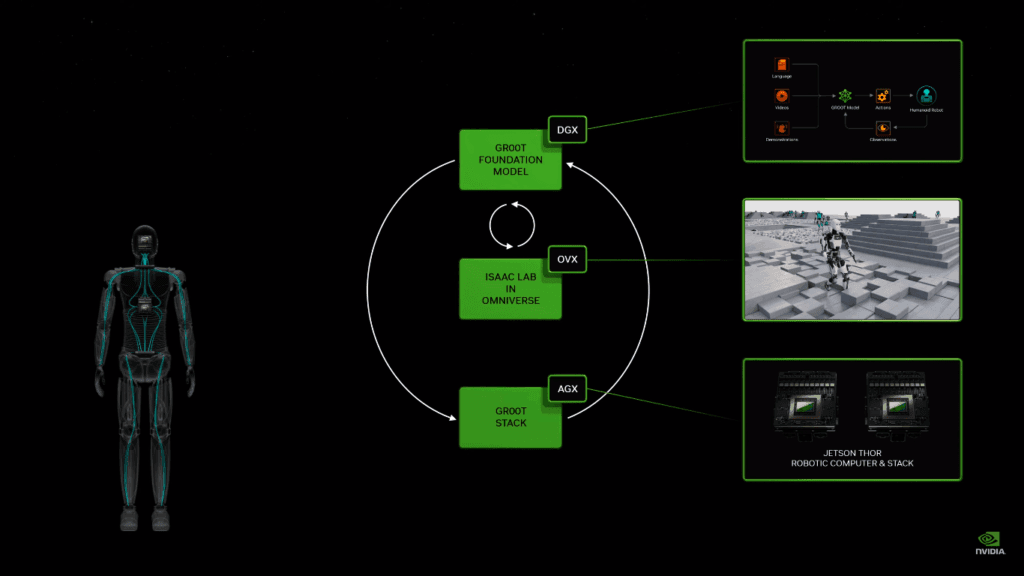

Project GROOT

Project GROOT is an exciting initiative by NVIDIA, aimed at advancing the field of robotics and artificial intelligence. The below are some key details about it:

- Generalist Robot 00 Technology (GROOT): NVIDIA has introduced Project GROOT as a foundational model for humanoid robots. These robots are designed to understand natural language and emulate human movements by observing them. The goal is to create a versatile foundation that enables significant advancements in artificial general robotics.

- Jetson Thor: NVIDIA has developed a new computer system called Jetson Thor, specifically tailored for humanoid robots within Project GROOT. Jetson Thor features a GPU based on the newly announced Blackwell GPU architecture. With its transformer engine capable of delivering 800 teraflops of AI performance, Jetson Thor can run multimodal generative AI models like GROOT.

- Upgrades to NVIDIA Isaac Robotics Platform: NVIDIA also showcased enhancements to its NVIDIA Isaac robotics platform. These upgrades make robotic arms smarter, more flexible, and more efficient, making them ideal for use in AI factories and industrial settings globally.

Project GROOT represents a significant step forward in the development of humanoid robots, and Jetson Thor plays a crucial role in powering these advancements

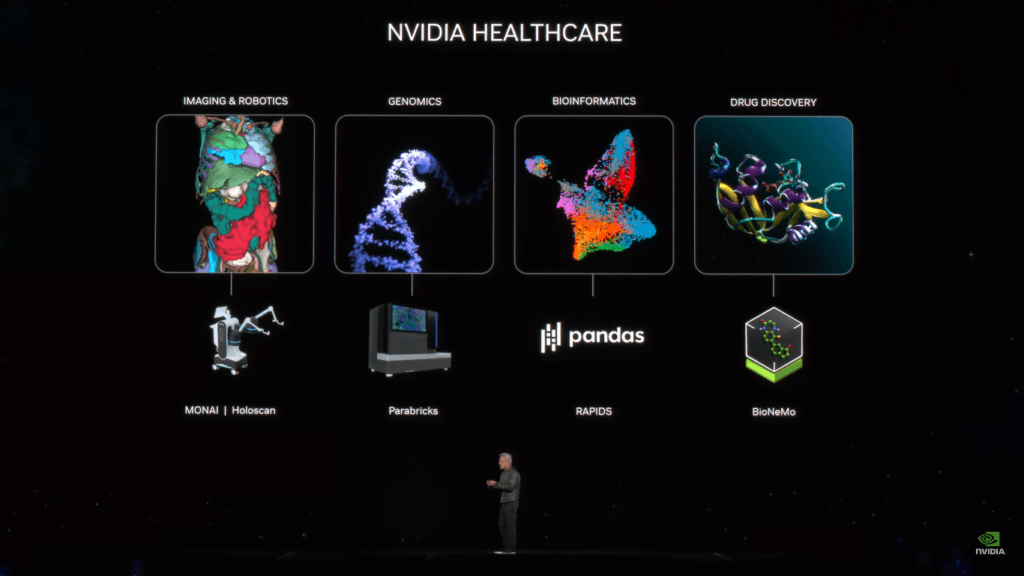

Nvidia BioNeMo

Nvidia BioNeMo is a generative AI platform specifically designed for drug discovery. It simplifies and accelerates the process of training models using proprietary data and scales the deployment of models for drug discovery applications. Here are some detailed features and benefits of Nvidia BioNeMo:

- State-of-the-Art Biomolecular Models: BioNeMo provides AI models for 3D protein structure prediction, de novo protein and small molecule generation, property predictions, and molecular docking.

- Turnkey Solution: The platform offers a web interface or cloud APIs for easy access to pretrained models for interactive inference, visualization, and experimentation.

- Scalable AI Services: BioNeMo allows for the building of enterprise-grade generative AI workflows with GUI and API endpoints on scalable, managed infrastructure.

- Training Framework: BioNeMo Training is an end-to-end optimized training service with state-of-the-art models, data loaders, training workflows, and validation in the loop, supported by enterprise-level assistance.

- Microservices: BioNeMo microservices, including cloud APIs, provide rapid access to optimized biomolecular AI models, enabling users to harness generative AI for drug discovery applications efficiently.

- AI Supercomputer in the Cloud: NVIDIA DGX Cloud, powered by NVIDIA Base Command Platform, offers a multi-node AI training-as-a-service solution to optimize and train models.

- Ecosystem Support: BioNeMo is supported by documentation, tutorials, and community support to help users get started and explore the platform’s capabilities.

BioNeMo represents NVIDIA’s commitment to advancing AI-powered drug discovery by providing a comprehensive platform that offers both model development and deployment, aiming to accelerate the journey to AI-powered drug discovery.

The Ripple Effect of the Keynote

Jensen Huang’s keynote at the GTC 2024 had a notable impact on several external factors, particularly in the stock market. Here are some of the effects observed:

- Stock Market Reaction: Nvidia’s stock experienced fluctuations around the time of the keynote. While there was an initial drop in after-hours trading, the overall sentiment was positive, with options traders showing significant interest in the stock.

- Industry Analysts’ Response: Wall Street analysts reacted favorably to the announcements made during the keynote, with predictions of sustained revenue growth for Nvidia into 2025. The new Blackwell chip, in particular, was highlighted as a step ahead of competitors, cementing Nvidia’s lead in AI technologies.

- Technology Sector Influence: The keynote also influenced the broader technology sector, especially companies involved in AI, high-performance computing, gaming, and autonomous vehicles. The announcements were seen as indicative of Nvidia’s innovative capabilities and its strong position in the market.

Huang’s keynote was a testament to Nvidia’s commitment to advancing AI technology. It was a message to the world about the untapped potential of AI and Nvidia’s pivotal role in the AI revolution.

The GTC 2024 and Huang’s keynote have set a new benchmark for AI innovation, promising a future where technology amplifies human capability and creativity. As we digest the wealth of insights and breakthroughs presented, it’s evident that GTC 2024 was a defining moment in AI history.