Can We Trust AI-Written Content? A Look at the Rise of Large Language Models

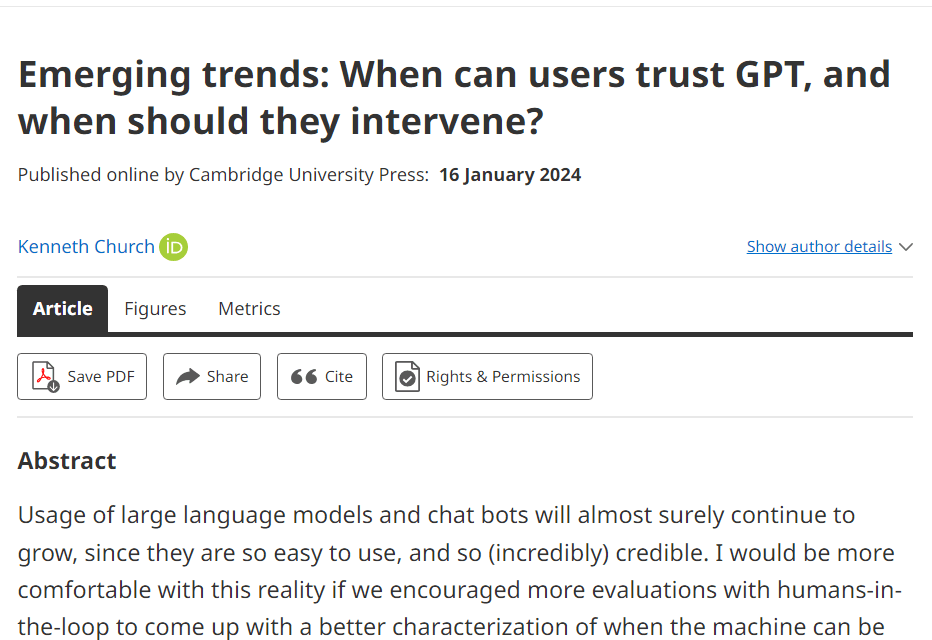

The introduction of ChatGPT by OpenAI has sparked tremendous excitement about the potential of large language models (LLMs). ChatGPT demonstrates an uncanny ability to generate human-like conversational text on virtually any prompt, while remaining versatile, eloquent and easy to use. However, as Professor Kenneth (Ken) Church of Northeastern University explores in a recent study published by Cambridge University Press , ChatGPT also has significant limitations and risks that users should be cognizant of. Without proper diligence and skepticism, overreliance on ChatGPT could propagate misinformation and erode confidence in AI.

Professor Ken Church is a senior Research Scientist at the Institute for Experiential AI at Northeastern University. The institute focuses on developing cutting-edge, human-centric AI solutions. It also aims to apply AI to real-world problems in a human-centric approach, fusing what algorithms do well with what human intelligence does best. The research areas include life sciences, cybersecurity, environment, infrastructure, telecom, healthcare, finance, and responsible AI.

The institute’s faculty members are interdisciplinary and multi-departmental, and they collaborate with industry, academia, and public sectors to create real value by solving AI problems. There is also an AI Solutions Hub that partners with AI experts to create working solutions for industry, academia, and society.

The institute’s goal is to advance the state-of-the-art in AI through applied solutions with a human in the loop.

The Study: Testing ChatGPT’s Capabilities and Limitations

To probe ChatGPT’s strengths and weaknesses, Church assigned students in his natural language processing class to write essays using the AI assistant along with other tools like Google and Stack Overflow. The results revealed ChatGPT’s exceptional fluency and conversational ability. Impressively, it could craft high-quality metaphors, documentation, outlines and thesis statements that were often superior to search engines. For example, ChatGPT ably explained sports metaphors that many international students were unfamiliar with. It also provided useful programming documentation and accurately summarized article contents.

However, ChatGPT performed remarkably poorly when asked to provide supporting quotes and references. It readily fabricated nonexistent papers, authors and citations with false specifics. Despite discussing the risks of AI hallucinations in class, students readily propagated the misinformation in their essays. They valued ChatGPT’s convenience over truth or accuracy. This illustrates the inherent danger in over trusting ChatGPT’s captivating outputs.

The Need for Healthy Skepticism and Human Oversight

Church argues that while impressive, ChatGPT lacks grounded reasoning and fails at fact checking itself. Its fluency promotes unwarranted trust in its authoritative sounding but often inaccurate information. More caution and evaluation are necessary to determine when ChatGPT can be trusted versus when human oversight is essential.

Users have a responsibility to verify information rather than blindly reproducing ChatGPT’s text. The court of public opinion should penalize trafficking of AI-generated misinformation. Fact-checking and web searches should counterbalance ChatGPT’s weaknesses, as misinformation remains inexcusable even from AI. Otherwise, overreliance could erode confidence in all digital information.

Co-Creating a Symbiotic Future

Going forward, Church advocates co-creation between humans and AI, improving both in tandem. Models can be enhanced through training techniques while also cultivating human skills for critical evaluation. Constructive symbiosis will emerge from exploiting complementary strengths. ChatGPT should assist human creativity and reasoning, not replace them through unchecked automation.

Responsible innovation requires maintaining meaningful human agency and oversight over increasingly capable systems like ChatGPT. We must learn when to trust them versus intervene. With wisdom, we can harness the power of AI while navigating the subtle risks they pose if given free reign. The path forward involves thoughtful partnership between people and machines.

ChatGPT’s Remarkable Strengths

To appreciate the excitement around ChatGPT while also understanding its limitations, it is instructive to examine some of its most impressive capabilities:

- Creative writing: ChatGPT can generate essays, stories, poetry, song lyrics and more with strong coherence and relevance to prompts. Its outputs display sophistication lacking in earlier AI.

- Conversational ability: The system handles multi-turn dialogue admirably, a huge leap over predecessors constrained to single exchanges. Its responses are contextual and progressive.

- Versatility: ChatGPT tackles a mind-boggling array of topics with aplomb, from explaining scientific concepts to rendering movie scripts, recipes and programming solutions.

- Ease of use: Requiring only plain text prompts, ChatGPT greatly lowers the barrier to leveraging AI. It avoids the need for specialized knowledge or coding.

- Fluency: Its writing flows smoothly and naturally, with far fewer of the telltale repetitive or nonsensical Outputs that reveal its artificial origins.

- Metaphors and analogies: Impressively, ChatGPT capably handles figurative language that historically challenged AI due to its abstraction.

- Summarization: The system can recap lengthy text coherently, hitting key points and staying within specified word limits.

- Creativity: ChatGPT can generate original poems, songs, stories and more based on minimal direction, with outputs that display a creative spark.

- Humor: The system exhibits a capacity for humor, including processing and responding to jokes as well as generating its own amusing content.

- Documentation: As Church’s students discovered, ChatGPT creates useful programming documentation superior to search engines.

Together, these capabilities account for much of the hype and high user engagement ChatGPT has garnered. For many everyday applications, it represents a marvelous step forward in democratized AI.

ChatGPT’s Concerning Limitations

However, Church’s assignment also highlighted some major current limitations of ChatGPT that require caution on the user’s part:

- Fabrication: The system readily fabricates bogus facts, quotes and references, masquerading as truth. This dangerous tendency propagates misinformation.

- Lack of fact-checking: ChatGPT cannot reliably vet or validate the information it provides. It has no inherent sense of accuracy.

- Uncertainty quantification: The system offers no warning about possible mistakes or uncertainty in its outputs. It presents all text as true and authoritative.

- Limited reasoning: Despite advances, the system lacks robust logical reasoning and semantic understanding, reducing reliability.

- Bias amplification: ChatGPT often exacerbates biases in training data, requiring mitigation efforts.

- Ethical gaps: Without human-aligned values and ethics, ChatGPT’s advice can potentially cause harm if applied without oversight.

- Unpredictability: Subtle prompt variations produce erratic results, from brilliance to nonsense. Mastering its capabilities requires experience.

Although impressive, these deficiencies warrant maintaining a healthy skepticism of ChatGPT. Users should judiciously verify its information, interpret its advice carefully and utilize the system thoughtfully. Integrating human oversight addresses the system’s limitations while harnessing its strengths.

Responsible Paths Forward with ChatGPT

Rather than unbridled enthusiasm or fear over AI like ChatGPT, Professor Church advocates a measured, responsible approach balancing promise and risk:

- Greater evaluation: More research should explain when ChatGPT outputs are trustworthy versus in need of intervention.

- User responsibility: Users have an ethical obligation to fact-check AI instead of spreading misinformation.

- Penalizing falsehoods: Public attitudes and policies should strongly discourage trafficking in AI-generated misinformation.

- “Co-creation” model: Humans and AI systems can constructively advance together through collaboration.

- Critical thinking: Educational efforts should sharpen users’ discernment of credible versus dubious AI-produced text.

- Improved training: Continued progress in areas like common sense knowledge and ethics will enhance future systems.

- Values alignment: Aligning AI systems with human values and morality remains an urgent priority.

- Transparency: Being open about AI limitations builds appropriate trust and expectations among users.

- Judicious usage: Tasks requiring semantic understanding, reasoning and factual accuracy may be best left to humans for now.

- Ongoing oversight: Maintaining meaningful human agency reduces risks as AI capabilities grow.

With wisdom, ethics and responsible innovation, society can maximize the benefits of AI like ChatGPT while minimizing its dangers. The prudent path involves neither unchecked enthusiasm nor excessive dread, but instead cultivating a thoughtful, balanced relationship between people and technology focused on our shared well-being.