GPT-5 Fails Over 50% of Real-World Orchestration Tasks in MCP-Universe Benchmark

▼ Summary

– Salesforce AI Research developed MCP-Universe, an open-source benchmark to evaluate LLMs interacting with real-world MCP servers across enterprise scenarios.

– The benchmark tests models in six core domains including location navigation, repository management, financial analysis, 3D design, browser automation, and web search using actual MCP servers.

– Testing revealed that even top models like GPT-5 struggle with long contexts and unfamiliar tools, performing poorly on more than half of enterprise-grade tasks.

– MCP-Universe uses execution-based evaluation rather than LLM-as-a-judge systems to better assess real-time performance with dynamic data.

– The benchmark aims to help enterprises identify where models fail and improve their AI implementations through more realistic testing.

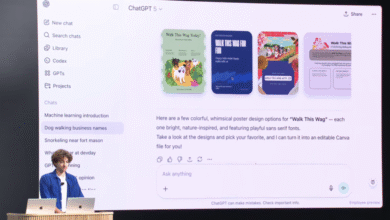

Enterprise AI leaders seeking to understand how large language models perform in real-world scenarios now have a powerful new evaluation tool. Salesforce AI Research has introduced MCP-Universe, an open-source benchmark designed to test how effectively models interact with Model Context Protocol servers across diverse, practical business environments. This framework moves beyond traditional isolated performance metrics to assess genuine tool integration and multi-step reasoning.

Unlike conventional benchmarks that examine narrow capabilities like math or coding in isolation, MCP-Universe evaluates six core enterprise domains: location navigation, repository management, financial analysis, 3D design, browser automation, and web search. The system connects to 11 real MCP servers and includes 231 tasks that reflect actual business operations, from route planning using Google Maps to financial market analysis via Yahoo Finance.

A key finding from initial testing reveals that even top-tier models struggle significantly when faced with complex, real-time tasks. OpenAI’s recently released GPT-5 achieved the highest success rate among tested models, particularly excelling in financial analysis, yet it still failed to complete more than half of the assigned enterprise-grade tasks. Other leading models like xAI’s Grok-4 and Anthropic’s Claude-4 Sonnet also showed notable limitations, especially in handling long-context scenarios and unfamiliar tools.

Junnan Li, director of AI research at Salesforce, emphasized that models frequently lose coherence with extended inputs and cannot adapt to unknown tools as fluidly as humans. This gap underscores the importance of using integrated platforms rather than relying on a single model for critical agent-based workflows. The research team opted for execution-based evaluation instead of the common LLM-as-a-judge approach, arguing that static knowledge benchmarks cannot adequately assess dynamic, real-time decision-making.

MCP-Universe employs three types of evaluators: format checkers for structural compliance, static assessors for time-invariant correctness, and dynamic evaluators for fluctuating outputs like stock prices or software issues. This multi-faceted approach aims to provide a more holistic and demanding assessment of model capabilities.

Among open-source models, Zai’s GLM-4.5 delivered the strongest performance, though all models exhibited clear weaknesses in browser automation, geographic reasoning, and financial tasks. The results suggest that current frontier models still lack the robustness needed for dependable enterprise deployment without additional scaffolding, reasoning support, or guardrails.

Salesforce hopes that MCP-Universe will help organizations identify specific failure points in their AI systems, enabling better design of tools and workflows. By offering an extensible framework for building and evaluating agents, the benchmark encourages continued innovation in making LLMs more reliable and context-aware in business settings.

(Source: VentureBeat)