GitHub Copilot: Why It’s Not Your Ideal Coding Partner

▼ Summary

– AI programming assistants like GitHub Copilot are becoming common in software development, aiming to boost efficiency but sparking debate.

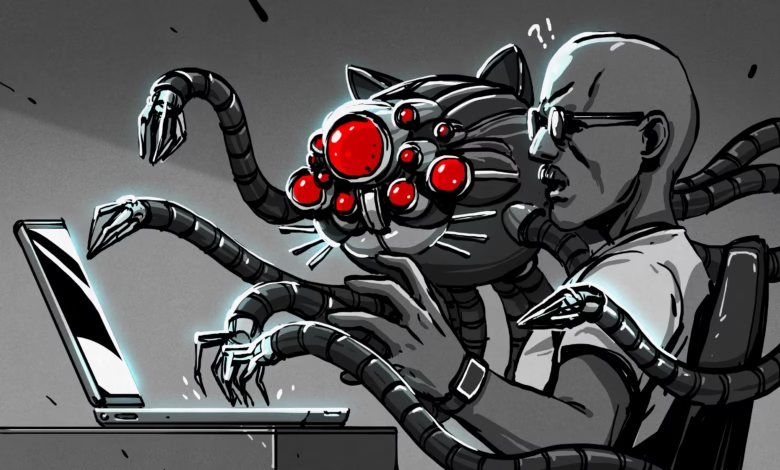

– [Jj] strongly dislikes AI programming assistants, comparing their frustration to an intensely negative experience.

– Many developers share frustration with AI tools, feeling they act like advanced autocomplete without real understanding.

– [Jj] argues that tools like Copilot degrade programmers by reducing critical thinking and problem-solving skills.

– Research suggests LLMs in development may harm mental faculties, and developers remain accountable for code quality and issues.

AI-powered coding assistants like GitHub Copilot have sparked intense discussions among developers, promising efficiency but raising concerns about their long-term impact on programming skills. While these tools claim to accelerate development by suggesting code snippets, many argue they undermine the very essence of problem-solving in software engineering.

The frustration isn’t unfounded. For some developers, tools like Copilot feel like little more than glorified autocomplete, pulling from vast repositories of existing code without fostering deeper understanding. One vocal critic, [Jj], argues that relying on such assistants erodes critical thinking, a skill fundamental to writing clean, maintainable, and innovative software. The convenience of instant suggestions comes at a cost: the gradual atrophy of a programmer’s ability to reason through complex logic independently.

Research supports these concerns. Studies on large language models (LLMs) in development workflows reveal mixed outcomes. While they may speed up routine tasks, they often fail to enhance a developer’s problem-solving capabilities. Worse, they can introduce subtle errors or outdated practices, leaving the human programmer to clean up the mess. When production issues arise, it’s the developer, not the AI, who must defend the code and troubleshoot under pressure.

The debate isn’t just about efficiency; it’s about what kind of programmers we become when we offload too much thinking to machines. Tools like Copilot might save time in the short term, but they risk creating a generation of developers who lean on automation rather than mastering their craft. For those who value deep technical expertise, that’s a trade-off worth questioning.

At the end of the day, code isn’t just about getting things to work, it’s about understanding why they work. And no AI, no matter how advanced, can replace the human insight required to build truly robust systems.

(Source: Hackaday)