Brain-to-Voice Breakthrough: Real-Time Speech from Thought Becomes Reality

A Voice Restored: Inside the World's First Expressive Speech Neuroprosthesis

▼ Summary

– Scientists developed the world’s first expressive speech neuroprosthesis, enabling a paralyzed man to speak naturally using brain signals.

– The system decodes speech intonation, rhythm, and emotion directly from neural activity, producing fluent, spontaneous conversation.

– A 45-year-old ALS patient received a brain implant with 256 electrodes, and AI translated his neural signals into a synthetic version of his original voice.

– The breakthrough allows real-time speech synthesis with emotional tone, emphasis, and even singing, far surpassing previous slow, robotic BCIs.

– While still experimental, this technology marks a shift toward more human-centered, expressive communication for people with severe disabilities.

In a world-first, scientists have helped a man with severe paralysis speak again, not through predictive text or robotic phrases, but through a natural, expressive voice generated directly from his brain signals. The study, published last week in Nature, marks a critical advance in brain–computer interface (BCI) technology. For the first time, a system has successfully decoded the intonation, rhythm, and emotional tone of human speech directly from neural activity, producing fluent, spontaneous conversation.

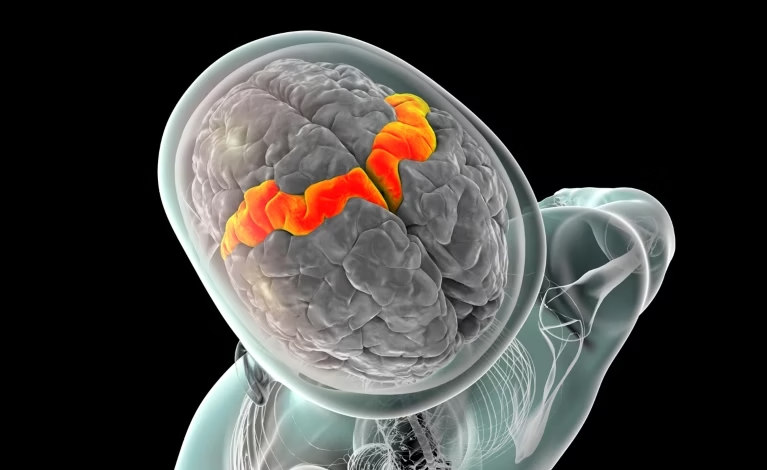

The participant, a 45-year-old man with advanced ALS (amyotrophic lateral sclerosis), had lost nearly all ability to speak. Researchers at Caltech implanted a paper-thin device with 256 micro-electrodes into the part of his brain responsible for controlling speech articulation. Using deep learning, the system translated brain signals into a synthetic voice trained on recordings of the man’s real voice from before his condition worsened.

“This is the first time we’ve been able to decode both the what and the how of speech,” said neuroscientist Marc Slutzky, a co-author of the study. “Not just the words he wants to say, but how he wants to say them, whether he’s asking a question, making a joke, or even singing a note.”

Speech in Real Time With Emotion, Emphasis, and Melody

One of the standout achievements is the system’s ability to synthesize speech in under 10 milliseconds, virtually instant, on par with the delay humans experience during regular conversation. Earlier BCIs often required users to compose sentences letter by letter, or could only decode basic words with long pauses in between.

This new device goes far beyond that. It captures not just phonemes but prosody: the rise and fall of pitch, vocal stress, and rhythm that convey emotional intent. Listeners were able to recognize around 60% of the decoded words in real time, compared to just 4% without the interface.

“It’s not just speech, it’s voice! in all its richness”, said lead author Francis Willett, a neuroscientist at Stanford involved in the project. “He can emphasize a word, ask a question, or hum a melody. That level of expression has never been achieved before with a brain implant.”

The participant even demonstrated the ability to sing short musical phrases, a breakthrough hinting at future use cases in music therapy, emotional expression, or even creative performance for people with locked-in conditions.

Why This Signals a New Era in BCIs

Beyond the technical feat, this development signals a shift in how BCIs are designed and used. The emphasis is no longer just on functionality but on humanity, giving users not just a way to communicate but a way to be heard as themselves.

The implant, placed in the ventral precentral gyrus, a motor speech region, captures neural firing every 10 milliseconds. A neural network then decodes the signal into waveforms representing the user’s intended voice. Unlike prior models limited by vocabularies or rigid grammar trees, this BCI outputs free-form, expressive sound, allowing for unconstrained, natural conversation.

Still, the technology remains in its early days. It has so far been tested on a single participant. The research team notes that further trials are needed, especially among stroke patients or those with traumatic injuries, to assess reliability and adaptability.

But the implications are profound. A shift from brain-to-text to brain-to-voice means communication for the severely disabled could become far more immediate, intuitive, and emotionally rich.

(Inspired by: Nature)