AMD Launches MI350 AI Accelerator with 35X Faster Inferencing

▼ Summary

– AMD unveiled its open, scalable rack-scale AI infrastructure and Instinct MI350 Series accelerators, offering 4x faster AI compute and 35x faster inferencing than previous chips.

– CEO Lisa Su emphasized the shift to inference-driven AI and highlighted the importance of open industry collaboration, indirectly critiquing Nvidia’s closed systems.

– AMD showcased its next-gen AI rack, Helios, powered by MI400 Series GPUs and Zen 6-based Epyc Venice CPUs, targeting hyperscalers and enterprise deployments.

– The ROCm 7 software stack was introduced, featuring improved AI development tools and compatibility, alongside the AMD Developer Cloud for streamlined AI project access.

– Major partners like Meta, Oracle, and Microsoft are adopting AMD’s AI solutions, with Meta using MI300X for Llama inference and Oracle deploying MI355X GPUs in zettascale clusters.

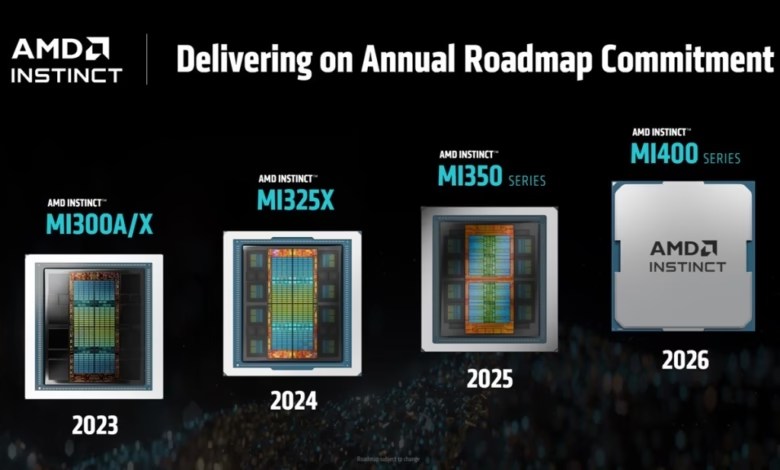

AMD’s latest MI350 AI accelerator delivers groundbreaking performance, boasting 35 times faster inferencing speeds compared to previous generations. The company unveiled its vision for an end-to-end AI platform at its Advancing AI event, emphasizing open collaboration and scalable infrastructure built on industry standards.

The AMD Instinct MI350 Series represents a major leap forward, offering four times the AI compute performance of its predecessors. Designed for generative AI and high-performance computing, these accelerators combine efficiency with scalability, setting a new benchmark for the industry. AMD CEO Lisa Su highlighted the significance of this advancement, stating that inference capabilities have reached a critical inflection point in AI development.

During the event, AMD showcased its open rack-scale AI infrastructure, powered by the MI350 Series, fifth-gen Epyc processors, and Pensando Pollara network interface cards. Hyperscalers like Oracle Cloud Infrastructure are already deploying these solutions, with broader availability expected in late 2025. The company also previewed its next-generation Helios AI rack, which will leverage upcoming MI400 Series GPUs, Zen 6-based Epyc Venice CPUs, and Pensando Vulcano NICs.

Industry analysts note that AMD is strategically targeting a different market segment than Nvidia, focusing on cost-efficient, open solutions for tier-two and tier-three cloud providers as well as enterprise deployments. The shift toward inference-driven workloads positions AMD favorably, particularly for customers seeking optimized total cost of ownership (TCO).

ROCm 7, AMD’s latest open-source AI software stack, enhances developer experience with improved framework support, expanded hardware compatibility, and new tools for AI deployment. The company also announced the AMD Developer Cloud, providing a fully managed environment for AI development, backed by partnerships with industry leaders like OpenAI, Hugging Face, and Grok.

Major tech firms are already leveraging AMD’s AI solutions. Meta uses Instinct MI300X for Llama 3 and Llama 4 inference, with plans to adopt MI350 and MI400 GPUs. Microsoft has integrated MI300X into Azure for both proprietary and open-source models, while Oracle Cloud Infrastructure is deploying zettascale AI clusters with up to 131,072 MI355X GPUs.

AMD’s commitment to energy efficiency remains a key differentiator. The MI350 Series surpassed the company’s five-year efficiency target, achieving a 38-fold improvement. Looking ahead, AMD aims to boost rack-scale energy efficiency by 20 times by 2030, drastically reducing power consumption for large-scale AI training.

With strong industry backing and a focus on open, scalable solutions, AMD is positioning itself as a formidable competitor in the AI hardware space. As inference workloads gain prominence, the company’s emphasis on performance, efficiency, and accessibility could reshape the competitive landscape.

(Source: VentureBeat)