Industry Report: Z.ai’s GLM-5 Launch

From Vibe Coding to Agentic Engineering

▼ Summary

– Z.ai has launched GLM-5, a major open-source AI model designed for autonomous, multi-step agentic workflows rather than just conversational tasks.

– It excels at long-horizon planning and directly outputs ready-to-use files like documents and spreadsheets, performing competitively with top proprietary models.

– The model is a significant technical upgrade, scaling to 744 billion parameters and using efficient attention mechanisms to manage long contexts cost-effectively.

– GLM-5 is fully open-source under the MIT License, freely available for commercial use and optimized to run on a wide range of hardware beyond just Nvidia GPUs.

– This release marks a key step in commoditizing frontier AI, providing enterprise-level agentic capabilities without vendor lock-in.

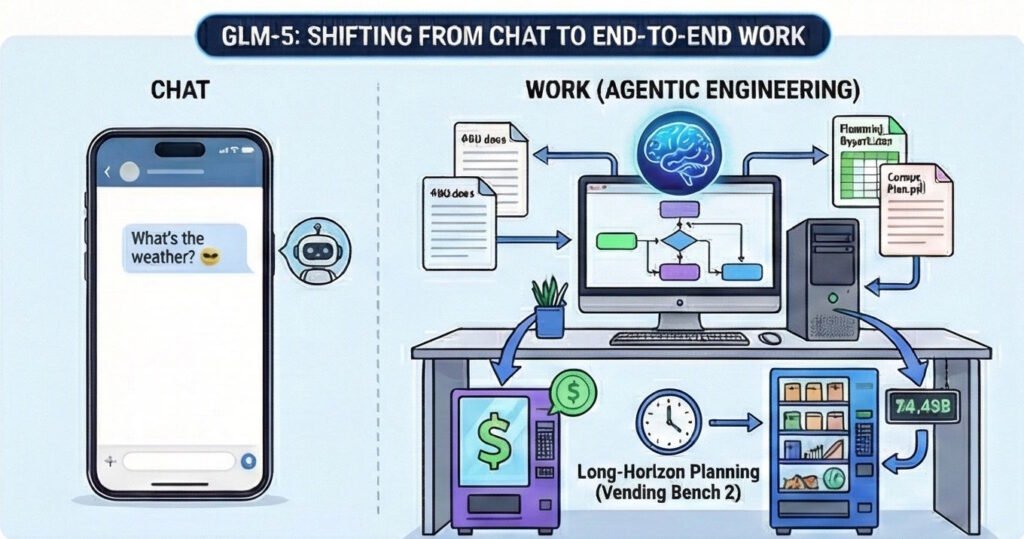

Z.ai has officially launched GLM-5, a massive leap forward in open-source AI. Positioned as a shift from traditional “chat” interfaces to actual “work” and complex systems engineering, GLM-5 is designed to execute long-horizon, multi-step tasks independently (agentic workflows).

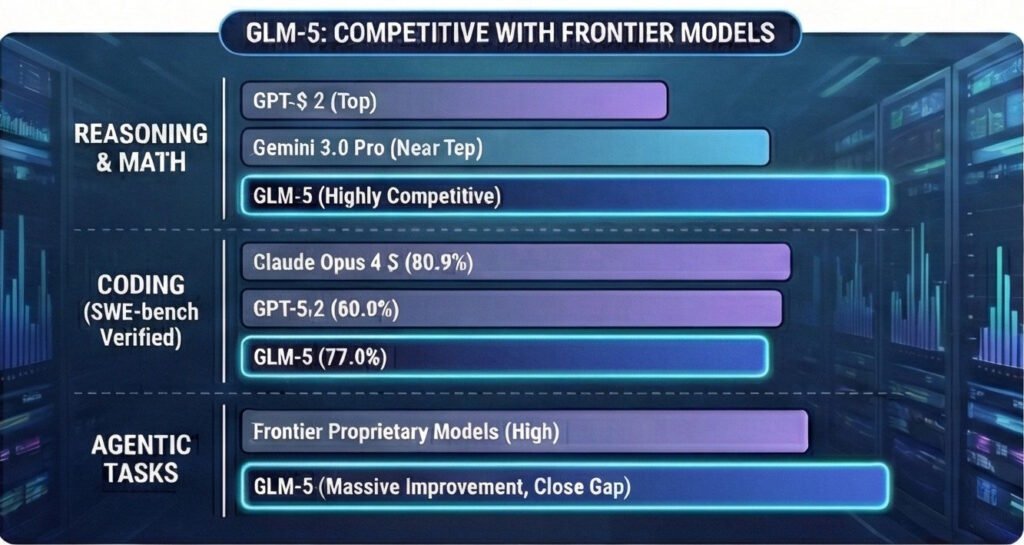

Released under the highly permissive MIT License, GLM-5 claims the title of the world’s most capable open-source model for reasoning, coding, and agentic tasks, closing the performance gap with proprietary frontier models like OpenAI’s GPT-5.2, Anthropic’s Claude Opus 4.5, and Google’s Gemini 3.0 Pro.

1. The Core Shift: From Chatting to “Working”

The most significant industry takeaway is GLM-5’s focus on Agentic Engineering. Instead of just answering questions, GLM-5 is built to operate as a digital worker.

- End-to-End Deliverables: GLM-5 can ingest source materials and directly output fully formatted, ready-to-use files (like

.docx,.pdf, and.xlsx). It can autonomously create product requirements documents (PRDs), financial reports, lesson plans, and complex spreadsheets.

- Long-Term Planning: The model excels at tasks requiring extended focus. On Vending Bench 2, a benchmark where the AI must run a simulated vending machine business over a one-year horizon, GLM-5 ranked #1 among open-source models. It generated a final account balance of $4,432, demonstrating resource management skills nearly identical to Claude Opus 4.5 ($4,967).

- Cross-App Operations: Through integration with frameworks like OpenClaw, GLM-5 can act as a personal assistant that operates directly across a user’s apps and devices, rather than being confined to a browser window.

2. Under the Hood: Technical & Scale Improvements

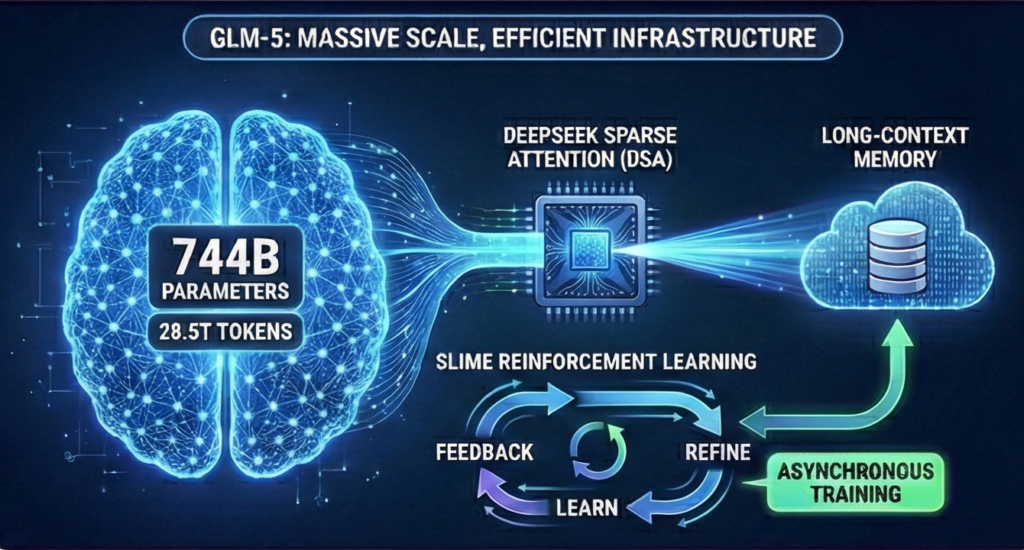

GLM-5 represents a massive scaling effort over its predecessor, GLM-4.5/4.7, while introducing new infrastructure to keep computing costs manageable:

- Massive Scale: The model grew from 355 billion to 744 billion total parameters (with 40 billion “active” parameters during any given task). Its pre-training diet was also expanded from 23 trillion to 28.5 trillion tokens.

- DeepSeek Sparse Attention (DSA): To process massive amounts of context without bankrupting deployment budgets, Z.ai integrated DSA. This allows the model to “remember” long conversations or massive codebases while significantly reducing running costs.

- “Slime” Reinforcement Learning: To bridge the gap between being just “competent” and “excellent,” Z.ai developed a novel asynchronous training infrastructure called slime. This vastly improves how the model learns from feedback, allowing for faster, more refined updates.

3. Competitive Performance

According to Z.ai’s benchmark data, GLM-5 is highly competitive with the top-tier closed-source models across the board:

- Reasoning & Math: Scores exceptionally high on elite math and reasoning benchmarks (like AIME 2026 and Humanity’s Last Exam), trailing only slightly behind GPT-5.2 and Gemini 3.0 Pro.

- Coding: Achieves a 77.8% on SWE-bench Verified, outperforming standard open-source models and holding its own against Claude Opus 4.5 (80.9%) and GPT-5.2 (80.0%).

- Tool & Agent Usage: Shows massive improvements in browsing the web, using tools, and managing context over long periods compared to its predecessor, GLM-4.7.

4. Ecosystem Integration and Availability

Z.ai is making GLM-5 highly accessible to both developers and standard users:

- Fully Open-Source: The model weights are freely available on Hugging Face and ModelScope under the MIT License, allowing for broad commercial use.

- Hardware Agnostic: While it runs on standard Nvidia GPUs (via vLLM and SGLang), Z.ai has heavily optimized GLM-5 to run on non-Nvidia hardware. It supports chips from Huawei, Moore Threads, Cambricon, and others, which is a major strategic advantage amid global GPU shortages.

- Developer Ready: It easily plugs into popular AI coding agents like Claude Code, OpenCode, and Cline via the Z.ai “GLM Coding Plan.”

- Consumer Access: Users can test the model for free directly on the Z.ai web platform, utilizing both standard “Chat Mode” and a specialized “Agent Mode” for complex document generation.

Bottom Line for the Industry

GLM-5 represents a milestone in the commoditization of frontier-level AI. By open-sourcing a model capable of long-term planning, direct document generation, and complex coding tasks, Z.ai is putting enterprise-grade “agentic” capabilities into the hands of developers globally, free of proprietary vendor lock-in.