Encharge AI Launches EN100 Chip with Breakthrough Analog Memory Tech

▼ Summary

– EnCharge AI launched the EN100, an AI accelerator chip using analog in-memory computing, designed for laptops, workstations, and edge devices, delivering 200+ TOPS within tight power constraints.

– The EN100 enables advanced AI applications to run locally, reducing reliance on cloud infrastructure by offering high efficiency (20x better performance per watt) and programmability for current and future AI models.

– Available in M.2 (for laptops) and PCIe (for workstations) form factors, the EN100 provides GPU-level compute power at lower cost and energy consumption, supporting frameworks like PyTorch and TensorFlow.

– EnCharge AI’s technology originated from Princeton University research, addressing AI’s energy and memory bottlenecks with analog in-memory computing, making it 20x more efficient than digital solutions.

– The company aims to democratize AI by moving inference to edge devices, overcoming data center limitations like cost, latency, and environmental impact, with $144M in funding and partnerships like DARPA’s OPTIMA program.

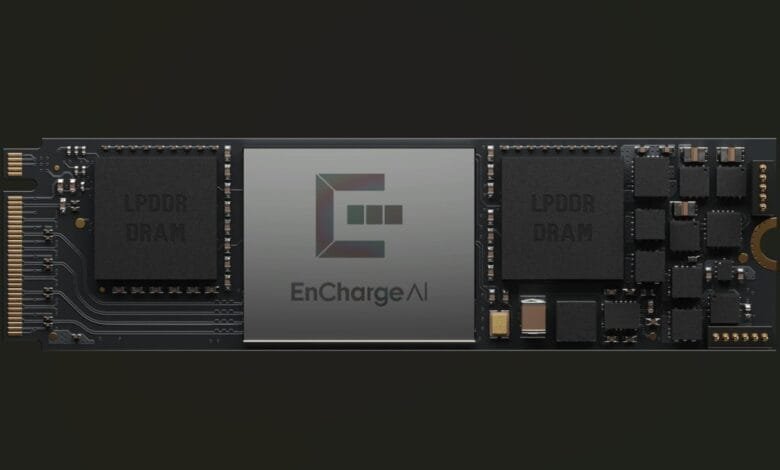

EnCharge AI has unveiled its groundbreaking EN100 chip, marking a significant leap forward in analog in-memory computing technology. Designed to bring high-performance AI capabilities directly to laptops, workstations, and edge devices, the EN100 delivers over 200 TOPS of compute power while operating within tight energy constraints. This innovation could redefine how AI applications run locally, eliminating the need for cloud dependency.

The EN100 leverages analog in-memory computing, a novel approach that dramatically improves efficiency compared to traditional digital architectures. By processing data directly within memory, the chip minimizes energy waste while accelerating AI workloads. This makes it ideal for applications requiring real-time processing, such as generative AI, computer vision, and multimodal reasoning systems.

Key features of the EN100 include:

- M.2 for Laptops: Packing 200+ TOPS in an 8.25W power envelope, the chip enables advanced AI applications without draining battery life.

- PCIe for Workstations: With four NPUs delivering nearly 1 PetaOPS, the PCIe version rivals GPU performance at a fraction of the cost and energy consumption.

EnCharge AI’s full-stack software suite ensures seamless integration with popular frameworks like PyTorch and TensorFlow, allowing developers to optimize models efficiently. Early adopters are already exploring use cases such as always-on AI assistants and real-time gaming enhancements.

What sets EN100 apart?

- 20x better performance per watt than competing solutions.

- 128GB LPDDR memory with 272 GB/s bandwidth, handling complex AI tasks typically reserved for data centers.

- Compact form factor, making it ideal for space-constrained devices like laptops and smartphones.

The company, which spun out of Princeton University, has secured $144 million in funding, including an $18.6 million DARPA grant to further develop its compute-in-memory technology. By addressing the energy and memory bottlenecks plaguing traditional AI chips, EnCharge aims to democratize AI, bringing powerful inference capabilities to everyday devices.

Why this matters: As AI models grow larger, reliance on cloud data centers has led to soaring costs, latency issues, and environmental concerns. The EN100’s ultra-efficient design could shift AI processing to local devices, reducing dependency on centralized infrastructure. This not only enhances privacy and security but also opens doors for new AI-driven applications in industries ranging from healthcare to defense.

With Tiger Global, Samsung Ventures, and RTX Ventures among its backers, EnCharge is well-positioned to disrupt the AI hardware market. Developers and OEMs can join the Early Access Program to explore how EN100 can power the next wave of intelligent devices.

By reimagining AI computation at the hardware level, EnCharge is paving the way for a future where advanced AI runs locally—faster, cheaper, and more sustainably.

(Source: VentureBeat)