AI Demand Sparks DRAM Shortage and Price Hikes

▼ Summary

– Surging AI demand for High-Bandwidth Memory (HBM) is diverting DRAM supply from other electronics, causing prices to rise 80-90% this quarter.

– The DRAM shortage stems from a collision between the industry’s typical boom-bust cycle and an unprecedented, large-scale AI data center build-out.

– HBM is a complex, 3D-stacked DRAM technology critical for AI accelerators, as it overcomes memory bandwidth limitations but costs three times more than other memory.

– New memory fabrication plants are under construction but will not meaningfully increase supply or lower prices until at least 2027-2028.

– Even with future capacity increases, prices are expected to remain high due to insatiable AI demand and the slow rate at which prices typically decline.

The surging demand for artificial intelligence is creating a significant shortage of a critical computer memory component, sending prices soaring and squeezing supply for a wide range of other electronics. The type of DRAM used to power AI data center hardware, known as high-bandwidth memory (HBM), is commanding such intense demand that it is diverting manufacturing capacity away from other memory products. Industry analysts report DRAM prices have jumped between eighty and ninety percent in the current quarter alone. While the largest AI firms have secured their chip supplies for years ahead, manufacturers of personal computers, smartphones, and countless other devices are left to navigate scarce inventory and inflated costs.

This challenging situation stems from a perfect storm: the notoriously cyclical nature of the DRAM industry has collided with an unprecedented, AI-driven infrastructure build-out. Experts indicate that, absent a major downturn in AI investment, it will likely take several years for new production capacity to catch up with demand, and elevated prices may persist even then. To grasp the full scope of the issue, it’s essential to understand the specialized memory at the heart of it.

High-bandwidth memory represents a fundamental shift in memory design. It tackles the slowing progress of traditional chip scaling by vertically stacking multiple, thinned DRAM chips into a single compact unit. This “stack” is then placed extremely close to a GPU or AI accelerator, connected by thousands of microscopic pathways. The primary goal is to smash through the “memory wall,” the bottleneck created by the immense energy and time required to shuttle data into processors running massive AI models. While HBM technology is over a decade old, its importance has exploded alongside the size of AI systems. This premium comes at a steep price; HBM can cost three times more than conventional memory and often constitutes over half the total cost of a packaged AI accelerator.

The roots of today’s shortage trace back to the volatile cycles inherent to memory manufacturing. Building a new fabrication plant requires a staggering investment, often exceeding fifteen billion dollars, leading companies to expand only during profitable boom periods. However, constructing and bringing such a facility online can take eighteen months or longer, frequently causing new supply to arrive just as demand softens, which floods the market and crashes prices. The current cycle began during the pandemic-driven chip panic, when major cloud providers stockpiled huge inventories of memory to ensure supply chain stability. When data center expansion slowed in 2022, prices plummeted, prompting manufacturers to slash production by drastic amounts to avoid losses.

This period of cutbacks made companies deeply cautious about investing in new capacity just as AI demand began its meteoric rise. The collision is now evident. Nearly two thousand new data centers are currently planned or under construction globally, a potential twenty percent increase in total supply. Investment analysts project trillions of dollars will flow into AI-focused data center infrastructure this decade, with a significant portion dedicated to servers and storage. This boom has propelled companies like Nvidia to record revenues, with their latest server GPUs requiring eight separate HBM stacks, each containing twelve DRAM dies. As a result, HBM and related cloud memory now account for nearly half of leading DRAM makers’ revenue, a dramatic increase from just a few years ago. Industry leaders forecast the HBM market will grow to a value larger than the entire DRAM market was in 2024, with demand substantially outstripping supply for the foreseeable future.

Addressing the supply crunch involves two paths: innovation in packaging technology or constructing new factories. The three dominant memory producers, Samsung, SK Hynix, and Micron, all have new facilities in various stages of development. However, these projects are long-term endeavors. New HBM and DRAM fabs in Singapore, South Korea, Indiana, and New York are not expected to reach full production until between 2027 and 2030. An executive from Intel recently summarized the outlook, stating he sees “no relief until 2028.”

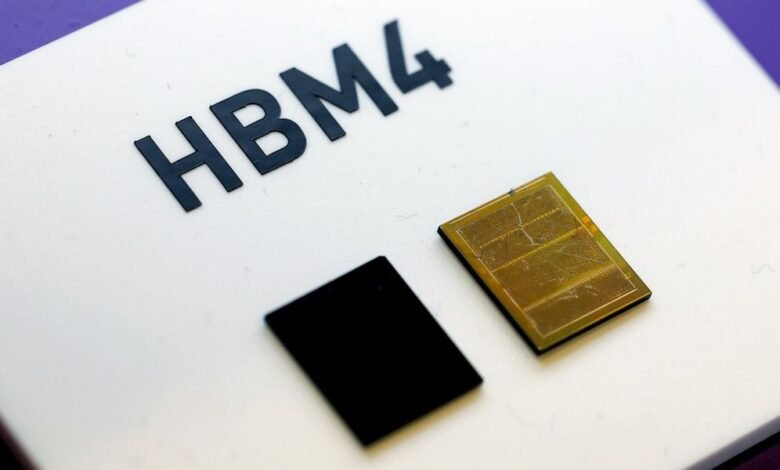

In the interim, supply increases will depend on incremental expansions, improvements in manufacturing yields, and closer collaboration between memory suppliers and AI chip designers. Even when new plants come online, economists caution that prices tend to fall much more slowly than they rise, especially when driven by seemingly insatiable demand for computing power. Concurrently, the technology itself continues to evolve. The forthcoming HBM4 standard supports stacks of sixteen DRAM dies, pushing the limits of thermal management within the tightly packed silicon. Advances in manufacturing processes, like advanced molded underfill and future techniques such as hybrid bonding, aim to improve heat dissipation and could eventually enable stacks of twenty dies, further increasing the silicon consumed per unit and the performance delivered to AI systems.

(Source: Spectrum)